Figure 03 Brings Helix Home and Robots to Real Work

Figure’s 03 humanoid and its Helix vision‑language‑action system stepped out of the hype cycle in October 2025. With safer hands, faster perception, and BotQ‑scale manufacturing, real pilots in logistics and early home trials point to practical jobs and falling costs.

The quiet turning point for embodied agents

For a decade, household humanoids have been a promise that always seemed two product cycles away. October 2025 feels different. Figure announced its 03 robot and began showing Helix, its vision language action system, moving from lab reels to real deployments. Logistics pilots now run for hours without cuts and home alpha tests have started with carefully screened participants. Taken together, these are the first credible signs that embodied agents are shifting from hype to deployment.

Early in the month, the company pulled the wraps off 03 and laid out why this generation matters: a redesigned sensor stack, slimmer hands with tactile pads, and a training pipeline built for repetition at scale. In on‑site reporting, the 03 launch was framed as a bid for mass production and safe home use, not a one‑off engineering showpiece. See the context around the October Figure 03 launch.

What changed under the hood

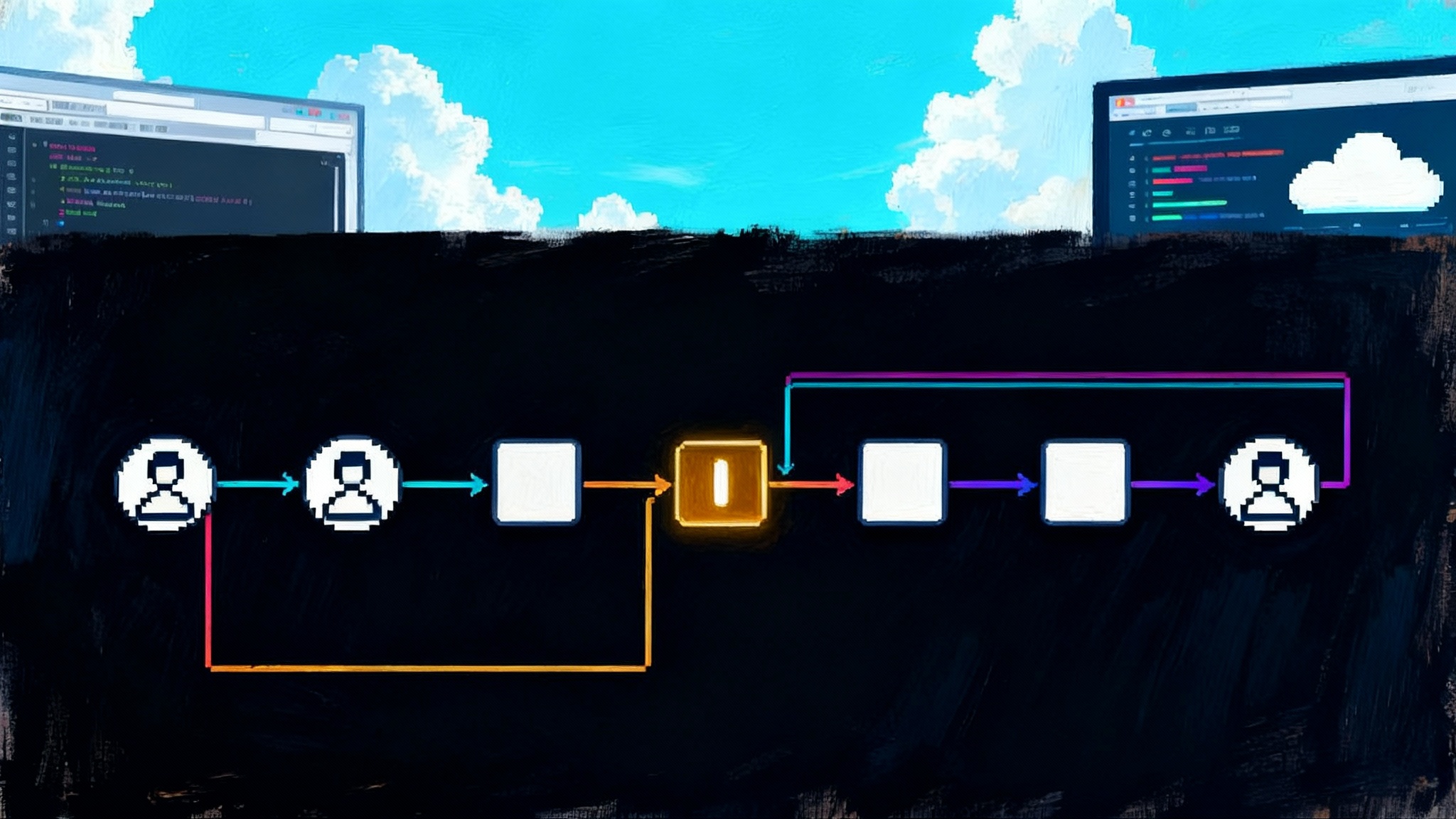

Helix is a vision language action model, which means it fuses perception, instruction following, and motor control. The architecture uses two cooperating systems plus a safety reflex layer. Think of it like this:

- System 2 plans and reasons. It digests a camera stream and your instruction, builds a compact description of the scene, and breaks the task into steps. You might say, “Load the dishwasher and clear the table.” System 2 identifies dishes, silverware, and obstacles, then proposes subgoals.

- System 1 handles reflex‑speed control. It turns those subgoals into precise trajectories for wrists, fingers, and torso at hundreds of updates per second. When a plate starts to slip, System 1 adjusts the grasp before you notice.

- A low‑level safety layer manages balance and collision response. If the robot is nudged while carrying a glass, it prioritizes stability and compliant motion so the spill is contained and the robot does not topple.

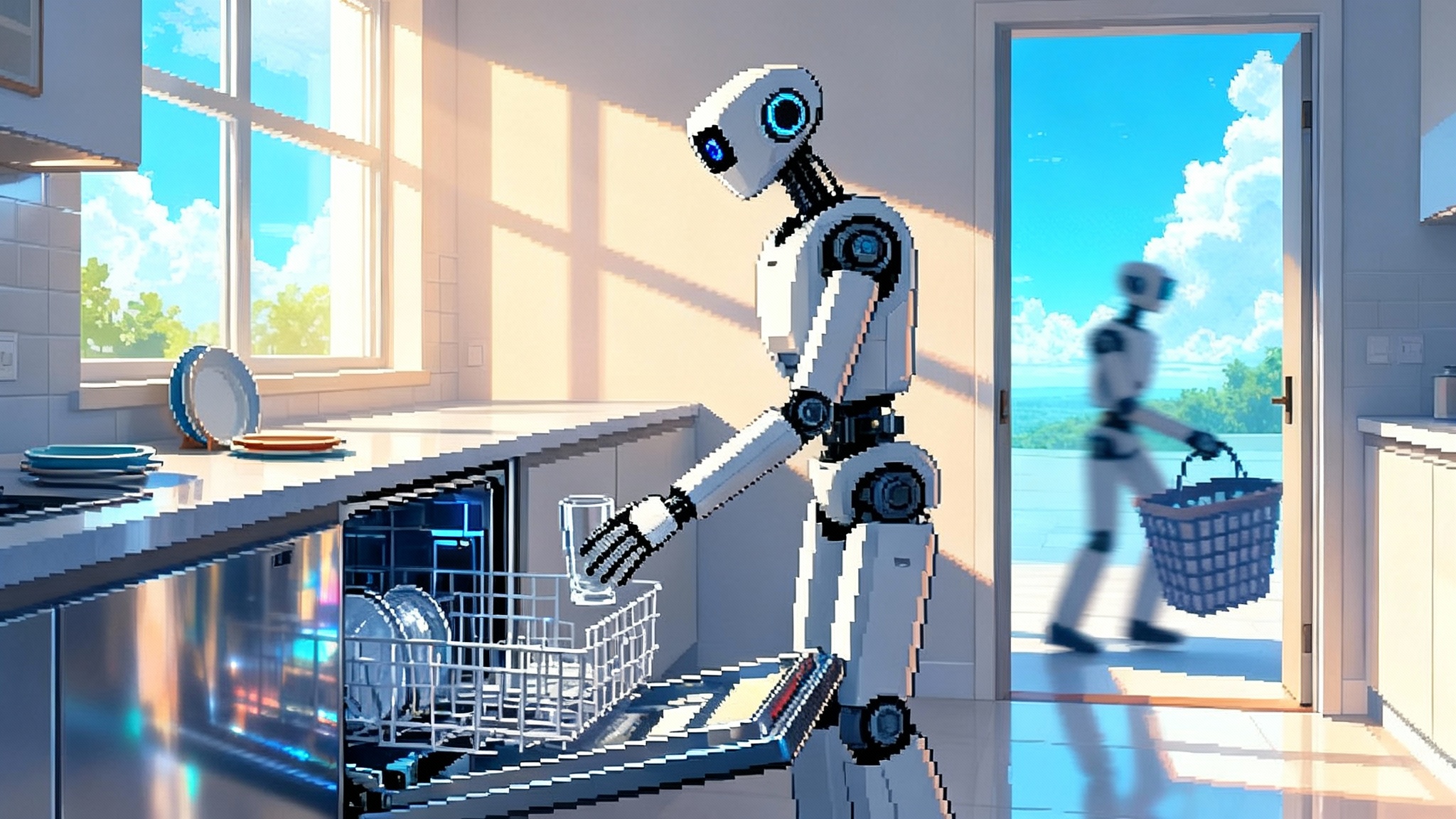

The payoff is not a single headline demo but a qualitative change in behavior. 03 does not just execute prewritten routines. It strings together micro‑skills it learned in one setting and adapts them to another. In a kitchen, the robot can identify unknown items, orient them, and place them where they belong. In a warehouse, the same stack of capabilities rotates irregular parcels to face the barcode scanner and nests soft mailers without crushing them. This modular skill transfer mirrors the modular turn for real agents.

Hands and eyes make the difference

Dexterous manipulation has always been the bottleneck between cool robot videos and useful help at home. The 03’s hands are where Helix meets hardware. The design adds palm cameras and tactile pads in the fingers so the robot can do what people do intuitively: explore an object as it grasps it. That is crucial for towels, grocery bags, crumpled paper, pill bottles with child‑proof caps, and everything else that does not behave like a rigid cube.

Consider how you fold a bath towel. You pinch a corner, trace an edge by feel, correct mid‑motion when the fabric sags, then smooth the fold. Without tactile feedback and a camera close to the contact patch, a robot cannot perform these corrections. With them, the robot treats the task as a stream of tiny state updates: edge found, slip detected, pressure eased, new grasp point chosen. The same skill stack lets it slide a plate into a dishwasher rack without tapping the prongs or crushing a paper cup while rotating it to read a logo.

The head and chest camera suite also changed. Wider fields of view, higher frame rates, and lower latency reduce the frequency of blind spots that force a robot to pause, look, and try again. That combination, plus better temporal fusion in Helix, explains why the latest logistics clips run at higher throughput, with fewer resets and less operator intervention.

From pilots to jobs: Helix in the wild

Figure’s logistics pilots are an honest test because throughput, error rates, and rework costs show up on a balance sheet. The most telling clips are not the fastest sequences but the slow saves where a package is caught mid‑slip and the robot recovers without hitting a safety stop. In parallel, the company began home alpha tests with a small group of curated households. The tasks look familiar on purpose: loading dishwashers, clearing tables, moving laundry, putting away groceries. These are the kinds of chores that expose messy edge cases and force rapid iteration on both software and hardware. If you are tracking where agents escape the lab, compare this to local agents in practice.

A pattern is emerging. The same skill primitives transfer across settings with little hand holding. Grasp classifying, orientation finding, placement nudging, and visual scanning all generalize. That is the practical advantage of vision language action over a bag of handcrafted routines. It does not mean magic or full autonomy. It does mean fewer brittle scripts and more graceful degradation when something goes wrong.

BotQ‑scale manufacturing resets the cost curve

Robots became the computer of the future once already. The first wave lost to the spreadsheet of reality. Machined parts were slow to make, assembly was artisanal, and every unit looked a little different. The 03 generation is built for tooling. Part counts are down, tolerance stacks are tighter, and parts that once consumed days on a milling machine now pop out of steel molds in seconds. That is what Figure means by a manufacturing system designed for robots rather than prototypes.

The keystone is BotQ, the company’s dedicated production facility. The public plan is to start around five figures of units per year and grow toward six figures as tooling amortizes and the supply chain matures. Independent coverage summarizes an initial capacity target near twelve thousand units a year and a multiyear ramp to one hundred thousand, along with vertical integration for actuators, hands, batteries, and major structures. See the outline of BotQ production capacity goals.

Why that matters: cost and reliability improve in lockstep with volume. Tooling spreads its cost over thousands of units. Automated tests catch variance early. Subassemblies become swappable modules with tracked provenance. In simple terms, you stop paying for craftspeople to build robots and start paying for a factory to print them. The result is not just a lower unit price. It is stability in the field because every robot behaves like the last one. At fleet scale, over‑the‑air learning also benefits from the hybrid LLM era shift.

A second order effect is data. Once hundreds of similar robots are doing similar work, new skills and bug fixes become over‑the‑air updates rather than bespoke patches. One home teaches a useful edge case, then the fleet learns it. The value of each additional deployment rises because it sharpens the entire model, not just a single unit.

The first real use cases

The question everyone asks is simple: what will these robots do first that is worth paying for? The early answers break into two groups.

Consumer tasks that save a half hour each day:

- Dishwashing helper: pick, scrape, rinse, rack, and run. If the robot fails, the failure mode is a misplaced fork, not a safety event.

- Laundry staging: sort, transfer loads, move folded items to drawers. Folding remains a showpiece, but even partial automation moves the needle.

- Kitchen reset: put away groceries, clear counters, return items to their common locations. The memory feature demonstrated around objects and locations is directly useful here.

Enterprise tasks that convert to clear productivity:

- Logistics kitting and sortation: rotate irregulars to face scanners, de‑nest poly mailers, handle mixed bins at stable cycle times.

- Back‑of‑house retail: unload cages, face shelves, close gaps, and rebuild end caps during off hours when the sales floor is empty.

- Light manufacturing assistance: presentation of parts to workers, insertion of fasteners where tolerances and ergonomics frustrate traditional arms.

- Hospitality and facilities: turn rooms, collect linens, restock supplies, push carts, and operate elevators with vision‑based button pressing.

These jobs share two traits. First, the environment is semi‑structured. That lets Helix reuse the same set of micro‑skills all day. Second, the payback math is not exotic. A robot that reliably saves one to two labor hours per shift at low supervision can justify a monthly operating lease that looks like a commercial appliance rather than a science project.

How the VLA plus hands unlock fine manipulation

Three capabilities turn chore demos into dependable work.

- Tactile micro‑planning: With fingertip sensors registering tiny forces, Helix learns the same habit people do. Lighten pressure the moment a cup begins to deform. Increase pressure when friction drops during a rotation. This is a feedback loop, not a fixed grip.

- Contact‑close vision: Palm cameras give the model useful pixels at the point of contact. That is the difference between blindly exploring for a grab point and seeing the crease you need to pinch.

- Reflex envelopes: System 1 is allowed to make millisecond decisions within bounded safety envelopes. System 2 sets the goal, but the reflex layer is free to prevent a tap from turning into a topple.

The combination cuts retries and resets, which is what separates a good video from a good product. In a kitchen, that means fewer clangs and more clean placements. In a warehouse, it means labels facing scanners the first time.

Guardrails that unlock 2026 adoption

Robotics adoption accelerates when everyone involved knows how the system will behave on its worst day. Here is a concrete playbook for 2026 that puts guardrails where they do the most good.

- Certify the hardware for human spaces. For home use, treat UL 3300 as a baseline and add fire, shock, pinch, and stability testing that assumes unsupervised operation near children and pets. For workplaces, align with the updated ANSI and Association for Advancing Automation R15.06 adoption of ISO 10218, with collaborative limits from ISO Technical Specification 15066. The goal is not a maze of rules. It is one clear dossier per deployment that an insurer can underwrite.

- Enforce speed and force limits by default. Set conservative limits for free‑space speed and contact force in domestic modes, with visible indicators when limits change. In commercial settings, tie higher limits to machine‑readable presence detection and opt‑in training for staff.

- Require a three‑layer control stack. Prove that high‑level planning can never bypass posture control and reflex safety. Demand formal tests that show recovery from slips, trips, and light collisions without leaving the safe envelope.

- Put privacy on a budget. In homes, default to on‑device processing for audio and video when a task allows it. If data leaves the house for learning, require household‑level controls: visible recording status, session expiration, a retention ceiling measured in days, and a one‑button purge. In enterprises, treat recorded footage and logs as sensitive operations data and rotate keys on a schedule.

- Log like an aircraft. Every unit should keep tamper‑resistant event logs with time, state, commanded actions, and contact forces. Mandate incident reporting to a neutral registry when a safety envelope is exceeded. This makes recalls surgical rather than broad.

- Update like a safety system. Over‑the‑air updates should be double‑signed, staged, and reversible. Treat any model update as a potential change to behavior at the contact surface and require a brief post‑update validation routine.

None of these steps slow down progress. They speed it up by making deployments predictable, insurable, and repeatable.

How to buy and how to pilot

If you are a consumer pilot household or an enterprise operator, turn the abstract promise of embodied agents into concrete milestones.

- Define the job. Choose 3 to 5 tasks with daily repetition and a measurable outcome, like dishwasher cycles completed, parcels oriented, or carts restocked.

- Measure interventions. Count how often a human touches the task and why. Interventions per hour matters more than headline speed.

- Track recoveries. Note where the robot saves a near miss without help. This is a leading indicator of future autonomy.

- Set guardrails. Write a simple operating envelope for each task: allowed speeds, contact forces, and zones where the robot must stop if a person enters.

- Close the loop. Feed edge cases back to the vendor weekly and expect a cadence of updates. Your pilot is most valuable when it generates model improvements that benefit the fleet.

What to watch in 2026

Three numbers will tell us if embodied agents have turned the corner.

- Cost per useful hour. Not list price, but the fully loaded cost divided by hours of successful task execution. Watch for a steady drop as BotQ ramps.

- Interventions per shift. Expect this to fall as Helix learns and as hardware tweaks reduce retries. Below one intervention per hour is the first big threshold.

- Mean time between safety stops. This metric captures how well the reflex layer and the environment work together. Longer gaps between stops mean higher trust and smoother operations.

If those numbers improve in both homes and warehouses, we will know the curve is bending the right way.

The bottom line

For years, embodied agents were a story about what would be possible if three things arrived at once: a generalist brain that can plan and act, a hand and sensor stack that can handle the messiness of the real world, and a factory that makes identical robots at scale. October 2025 is the first time all three showed up in the same product plan.

The work ahead is still real. Safety has to move from slideware to certification. Privacy has to be simple enough for families and facilities teams to manage. Reliability has to reach the point where a dishwasher cycle ends more often with clean cutlery than with a pause and a human sigh. But the path is visible. The 03 generation and Helix look like the first credible way to put embodied intelligence to work not just in videos but in daily life.

If 2025 was the reveal, 2026 will be the accountability year. That is good news. It means robots are leaving the hype cycle and entering the service economy, one plate, one parcel, and one well‑designed handoff at a time.