Private PC agents arrive as Intel's AI Assistant Builder debuts

Intel’s AI Assistant Builder turns modern AI PCs into private, fast agents that run on the laptop, not the cloud. Here is what it does, why local matters for security and latency, and a 12‑month plan to ship real workflows.

The on-device agent moment has arrived

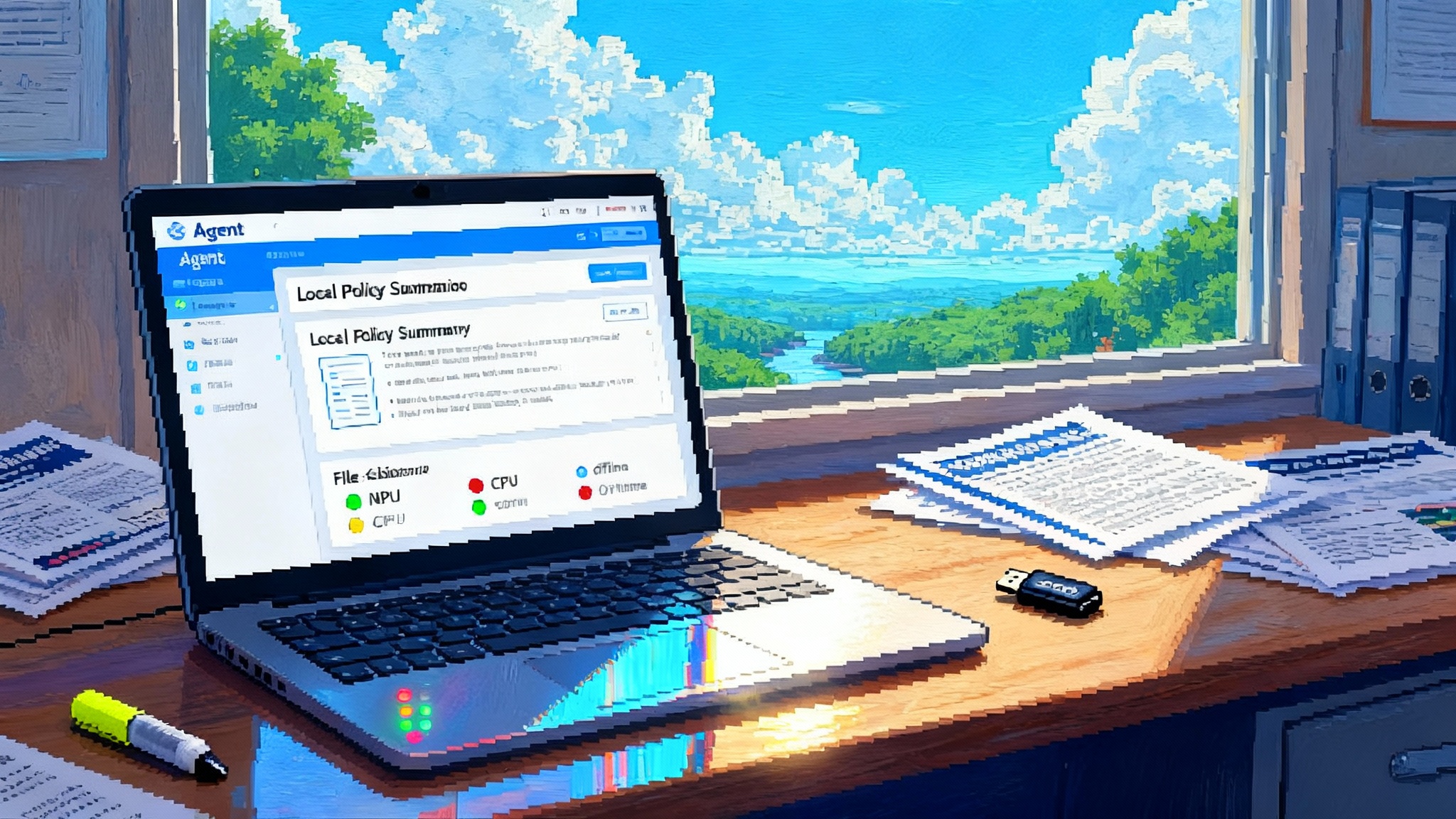

In May 2025, Intel moved a long-running idea from whiteboards into everyday PCs by releasing the Intel AI Assistant Builder public beta. The headline is simple and practical: with a recent Intel Core Ultra system, you can build and run private assistants that live on the machine, answer in milliseconds, and keep sensitive data inside the device. No cloud contract required. For information workers, field technicians, and compliance teams who hesitate to send documents to remote servers, that is a turning point.

This release also lands in the middle of an AI PC surge. Research firms expect a step change in the installed base of machines that include a neural processing unit, a dedicated accelerator for on-device inference. Gartner projects that tens of millions of AI PCs will ship in 2025 and that nearly a third of the market will be AI capable by year end, a shift that recasts the PC from a thin client to a local AI runtime. See Gartner 2025 AI PC forecast for the scale of the change.

Together, the hardware trend and Intel’s builder tool put quietly powerful agents on the desk. The implication is not another chat window. It is task-level autonomy tied to local files, local applications, and local rules. For security leaders, this evolution fits the controls outlined in Agent Security Stack Goes Mainstream.

Why local agents are the next practical step for enterprise AI

Cloud models are excellent for heavy reasoning and large context, but three forces push enterprises toward on-device agents for day-to-day work.

- Privacy and control. A legal team handling privileged documents or a research team working with pre-publication data wants guarantees that content stays inside the firewall and preferably on the device. An assistant that never uploads files can enforce that boundary by design.

- Low latency. Waiting for a remote model to parse a spreadsheet or summarize a forty-page policy creates friction. With a small language model accelerated by a neural processing unit, responses feel instant and interaction loops tighten.

- Resilience. Agents that run locally keep working through outages, airplane mode, and hotel Wi-Fi. For field service teams and satellite offices, this is not nice to have. It is critical.

The point is not to replace the cloud. It is to flip the default. Let the laptop handle the fast, private work and escalate to the cloud for rare or heavy tasks. That hybrid pattern is robust, cost-aware, and easier to govern. It also aligns with how Reasoning LLMs in production are moving from think to action.

What Intel’s AI Assistant Builder actually does

Intel’s builder offers a ready path for teams that want to get hands-on without assembling a stack from scratch. Here is the practical picture.

- Local first by default. It packages assistants that run entirely on the device, using the neural processing unit for language and vision models where available. If a device lacks the right accelerator, it can fall back to the graphics processor or the CPU.

- Model flexibility. You can pick a small language model tuned for local inference, including models quantized to int8 or int4. Quantization reduces memory footprint and increases speed with negligible accuracy loss for many tasks.

- Document and image flows. The builder supports common knowledge flows such as summarizing documents, answering questions about a set of files, or describing images. Under the hood it uses retrieval augmented generation to ground answers in local content.

- Packaging and distribution. Teams can export a configured assistant and share it with colleagues. That makes pilots and departmental rollouts straightforward.

Crucially, the tool is not a walled garden. Developers can swap in their own models, connect to local vector stores, and wire up desktop or line-of-business tools. Think of it as a starter kit that respects enterprise constraints. The approach pairs well with patterns in The Open Agent Stack Arrives.

The developer playbook for local agents on AI PCs

If you are a developer or an architect, here is a concrete plan to build agents that feel fast, respect policy, and ship on schedule.

1) Choose SLMs that align with the neural processing unit

- Start with task clarity. Agents that redact, classify, summarize, or fill structured forms thrive on SLMs in the one to seven billion parameter range. Use larger models only when necessary.

- Quantize early. Convert to int8 first, measure, then try int4 for speed and memory savings. Validate output quality against a task-specific evaluation set, not general benchmarks.

- Optimize for throughput, not peak tokens per second. Many enterprise tasks require short, frequent generations. Tune batch size and context to minimize cold starts and cache misses.

- Exploit the neural processing unit where it helps most. Offload transformer attention and feed-forward blocks to the neural unit and keep control code on the CPU. Use the vendor’s runtime to avoid custom kernels.

- Build a small library of blessed models. Maintain two to three models per task class such as summarization, classification, and tool use. Version them and store conversion scripts in the repository so engineers can reproduce builds.

2) Implement on-device tool use with tight scopes

- Prefer callable, auditable tools. Expose a catalog such as file search, spreadsheet queries, calendar access, clipboard read, and redactor. Keep function signatures simple and log all calls locally with hashes of inputs and outputs.

- Use an allow list at the OS boundary. When the agent asks to open an application or modify a file, route that request through a policy layer that checks file paths, process names, and time windows. Deny by default.

- Design for interruption and resumption. Local agents often run alongside other tasks. Use an event loop that can be paused and resumed and write state checkpoints to a local store.

- Keep a human confirmation pattern. For any tool that changes data or sends messages, require an approval step with a clear diff and a one-click accept.

3) Add guardrails that do not depend on connectivity

- Input and output filters. Run lightweight classifiers locally to block sensitive prompts or mask personally identifiable information before it ever touches the model.

- Policy grounding. Before generation, retrieve local policy snippets and include them in the prompt as binding constraints. After generation, run a rule checker to detect deviations and regenerate with stricter instructions if needed.

- Deterministic fallbacks. For high-risk tasks, add a non-learned pathway such as a template-driven summary or a regular expression redactor.

- Secure storage. Encrypt local caches and vector indexes at rest. Bind keys to the device’s secure enclave or OS keystore.

4) Orchestrate agents like desktop applications, not web services

- Event-driven architecture. Treat file saves, window focus changes, and system idle time as events. Let the agent subscribe to these and react, rather than polling continuously.

- Resource budgets. Cap memory, token count, and tool call frequency per minute. Local machines vary widely. A simple budget system prevents runaway processes on older laptops.

- Observability without a server. Write structured logs, timelines of tool calls, and evaluation scores to a local folder. Provide a one-click bundle for users to share with support.

- Versioning and rollback. Package each agent as a versioned application. Keep the last two versions installed so users can roll back instantly if a new build misbehaves.

Three high-ROI use cases you can ship this year

Local agents shine where privacy, latency, and offline resilience line up with a clear task. Here are three patterns that return value fast.

1) Compliance copilot for regulated teams

What it does: scans local folders and network drives for regulated content, classifies documents by policy, flags risky phrases, drafts disclosures, and prepares audit packets. Runs with read-only access by default and a human approval step for any changes.

Why it works locally: no document leaves the device. The model can operate on full files without token limits since it streams chunks from disk, not from a remote upload. Latency is low, so reviewers can iterate quickly on redlines and disclosures.

How to measure value: time saved per review cycle, number of detected issues caught before filing, and reduction in external compliance tooling costs.

What to build first: a classifier plus a redaction tool, then a templated letter generator that cites local policy paragraphs.

2) Offline field-service assistant

What it does: helps technicians repair equipment by indexing service manuals, parts catalogs, and past work orders on the device. Generates step-by-step checklists, fills return-to-service forms, and suggests probable faults from recent error codes. Syncs deltas when connectivity returns.

Why it works locally: job sites often have weak connectivity. With a local index and a small vision model, the agent can process photos of components, match them to manuals, and guide troubleshooting without a network.

How to measure value: first-time fix rate, mean time to repair, and truck rolls avoided. Track how often the assistant resolves a case without supervisor escalation.

What to build first: a document packager that bundles manuals into a local index and a checklist generator that outputs a printable plan.

3) Secure data-room assistant for transactions

What it does: lives inside a locked-down virtual desktop that hosts a due-diligence data room. Summarizes binders, compares versions, extracts key financials, and produces questions for sellers. Produces nothing that includes raw sensitive data unless the user approves a reveal.

Why it works locally: deal rooms often ban outbound connections. An on-device agent can run in the same environment as the files, respecting the same controls and audit trails.

How to measure value: hours saved per associate during diligence, fewer missed items in quality of earnings checks, and faster issue routing to subject matter experts.

What to build first: a compare tool for draft and final exhibits, plus a structured extractor for term sheets and covenants.

A 12-month roadmap to ship agentic desktop apps without the cloud

This plan assumes a small platform team that partners with security and a few pilot departments. Adjust the endpoints to your environment. The goal is a production-quality agent that runs locally on AI PCs and scales to thousands of endpoints.

Months 1 to 3: Prove the core loop

- Pick one high-ROI use case from the list above.

- Hardware baseline. Standardize on one or two AI PC configurations with a neural processing unit and at least 32 GB of memory for developer machines and 16 GB for pilot users.

- Model selection. Compare two SLMs at the same quantization level on a task-specific dataset. Evaluate speed, accuracy, and failure modes.

- Tool catalog v0. One read-only tool for search, one write tool that requires human approval. Keep everything logged locally.

- Guardrails v0. Add a simple redaction filter and a policy grounding step that inserts snippets into prompts.

- Developer experience. Create a reproducible build that converts models, packs assets, and produces a signed installer. Include a toggle to switch between the neural unit and CPU for debugging.

- Success criteria. Define three numeric targets such as sub-300 millisecond token latency for short outputs, 90 percent precision on the top three label classes, and a crash-free hour under a synthetic load test.

Months 4 to 6: Pilot with real users and tighten safety

- Expand to 50 to 100 pilot users in one department.

- Offline awareness. Show a simple indicator and queue outbound actions for later sync.

- Security review. Bind local caches to the OS keystore, encrypt vector indexes at rest, and ensure logs exclude raw sensitive data by default.

- Approval flows. Present clear diffs before any write action and capture approvals with a timestamp and local signature.

- Evaluation harness. Collect opt-in usage metrics such as completion time and manual edits. Replay a rolling test set nightly to catch regressions.

- Support runway. Build a one-click export that bundles logs and anonymized traces for troubleshooting.

- Governance checkpoints. Agree on what the agent can do without a human in the loop. Put those permissions in a policy file that ships with the app and can be audited.

Months 7 to 9: Scale and integrate with the desktop

- Endpoint distribution. Push the agent through the enterprise software catalog with automatic updates and a safe rollback path.

- Model hardening. Freeze model versions for a quarter and backport fixes. Maintain conversion scripts and reference metrics for each revision.

- Selective multi-agent orchestration. Pair a fast classifier with a slower planner that runs on edge cases. Keep control logic simple and observable.

- Desktop hooks. Add file system watchers for designated folders, context menu entries for summarize or classify, and a quick action hotkey.

- Performance budgets. Enforce per-device budgets for memory and tokens. Show a status panel so power users can tune behavior.

- Training and internal marketing. Record short clips that demonstrate real workflows with sensitive content handled locally.

Months 10 to 12: Production hardening and wider rollout

- Threat modeling and chaos drills. Test prompt injection on local files, simulate corrupted indexes, and unplug the network mid-task. The agent should fail safely and recover.

- Accessibility and internationalization. Support screen readers, keyboard navigation, and required languages for your workforce.

- Compliance evidence. Produce a living package that documents models, policies, approvals, and tests.

- Cost review. Compare time saved and vendor fees avoided to the cost of hardware upgrades and maintenance.

- Finalize a cross-department template. Distill a pattern others can apply: a small language model, a handful of tools, one policy file, a local index, and a signed installer.

What about the cloud and big models

Local agents are not a rejection of hosted models. They are a boundary. For heavy reasoning, large-context synthesis, or tasks that benefit from global knowledge, call out to a hosted model through a broker with clear consent. Cache inputs and outputs locally and redact anything sensitive first. Make escalation explicit so users understand when data leaves the machine and why.

The bottom line for leaders

If you have been waiting for a practical way to put agents in front of employees without rewriting your data governance playbook, 2025 is your window. The installed base of AI PCs is rising, model runtimes are mature enough to run fast on the neural processing unit, and Intel’s builder has lowered the setup cost. The opportunity is to move routine expert work to trusted local assistants and keep scarce human attention for the last ten percent of judgment.

Start small, pick a measurable workflow, and ship something your colleagues can try without a tutorial. The agent that quietly reads the company’s own documents and answers in a few hundred milliseconds is the one that sticks.