OpenAI AgentKit turns demos into production-grade agents

OpenAI’s AgentKit bundles Agent Builder, ChatKit, Evals, and reinforcement fine-tuning into a versioned pipeline you can trust. Here is why it matters, what shipped, and a practical one-week plan to go live with a monitored agent.

The news, and why it matters now

OpenAI introduced AgentKit on October 6, 2025, and it is more than a feature drop. It is a blueprint for turning agents from flashy demos into dependable applications. The package combines Agent Builder for visual workflow design, ChatKit for embeddable conversation interfaces, expanded Evals for measurement, and reinforcement fine-tuning for performance gains. Together they form a production pipeline where agents can be versioned, tested, deployed, and monitored as cleanly as any modern service. See the launch details in OpenAI’s announcement of AgentKit on October 6, 2025.

If last year was the era of improvisational agent demos, this quarter is about shipping software you can sign off with a change request. AgentKit’s promise is simple. Less glue code. Fewer one-off dashboards. A shared vocabulary between product, engineering, legal, and security. You can trace an agent run, roll back a workflow version, prove that guardrails are in place, and embed the experience in your app without rebuilding the front end each time.

From fragmented stacks to a single pipeline

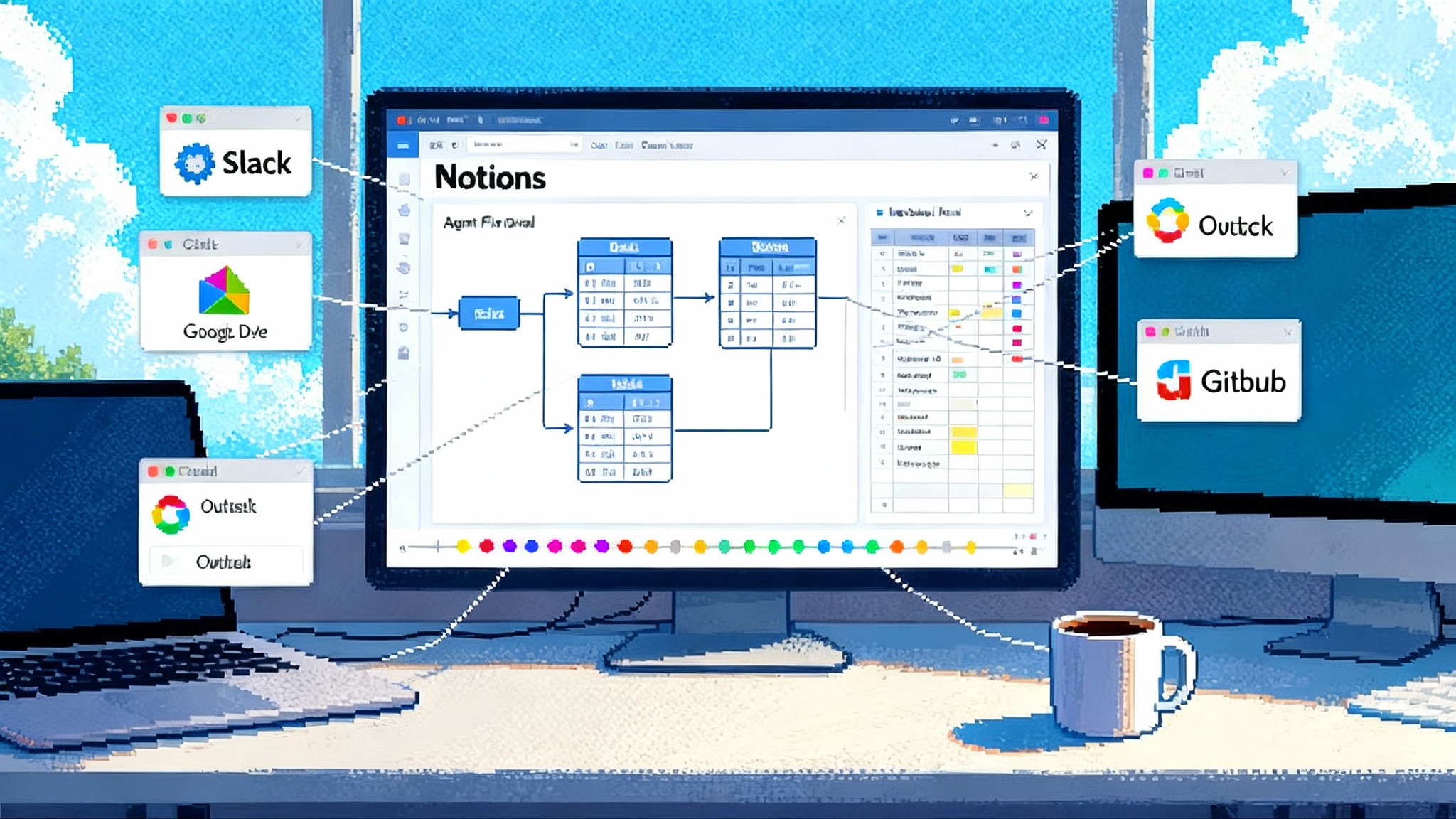

Until now, most teams glued together a stack that looked like this: a prompt or two in a notebook, a custom orchestration script, a couple of bare-bones connectors to internal systems, a hand-rolled chat widget, and a spreadsheet of ad hoc tests. Versioning was handwritten. Evaluation was manual. The moment the use case expanded to multiple tools or teams, the setup sagged.

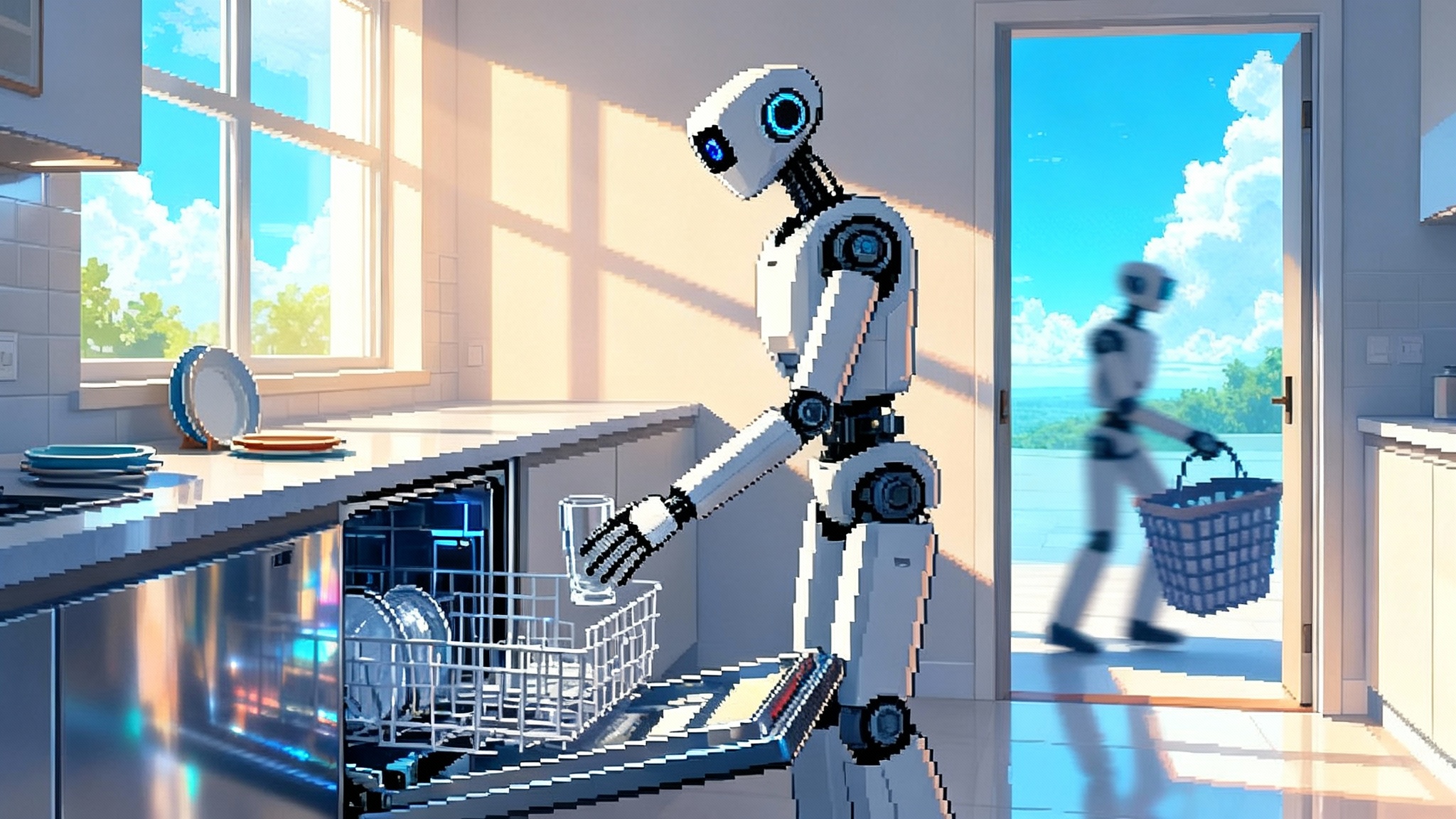

AgentKit replaces that sprawl with a path that mirrors how production software grows up:

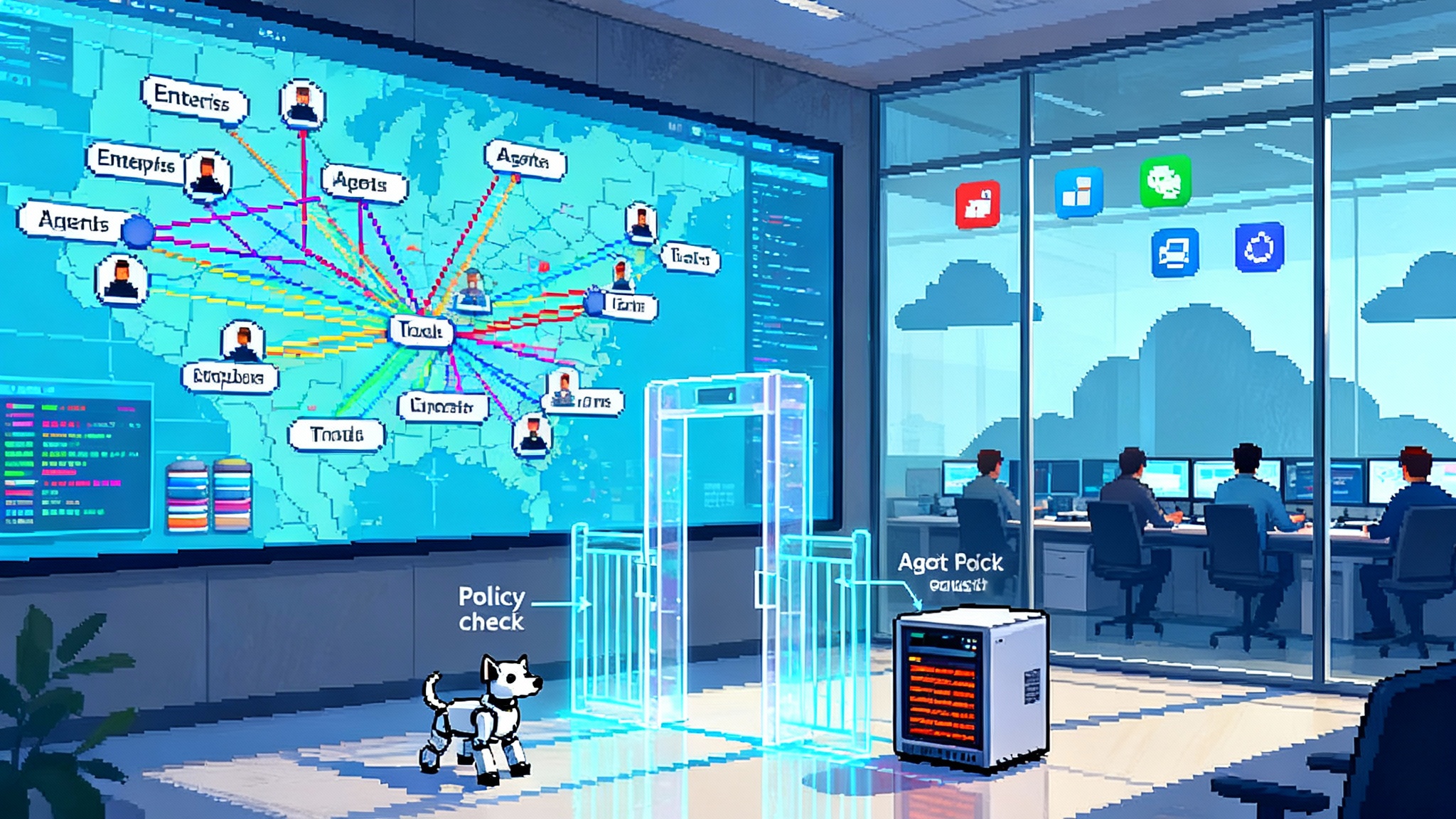

- Design in Agent Builder. Create multi-step logic on a visual canvas, wire tools and data sources, and keep versions. Product can point at the graph and ask clear questions. Engineering can diff versions and enforce review. Legal and security can validate guardrails in the same place, aligning with the priorities in our agent security stack this fall.

- Embed with ChatKit. Ship a flexible chat interface that handles streaming, threading, and state so you do not rebuild chat from scratch. Brand it, theme it, and focus on behavior, not UI plumbing.

- Measure with Evals. Treat quality like a first-class artifact. Use datasets, trace grading, and prompt optimization to catch regressions. Add third-party models in evals when you need comparisons.

- Improve with reinforcement fine-tuning. When prompts hit a ceiling, RFT lets you push specific behaviors that matter in your domain, complementing the push toward reasoning LLMs in production.

The result is a production-grade feedback loop. Define. Ship. Measure. Improve. Repeat.

What shipped, concretely

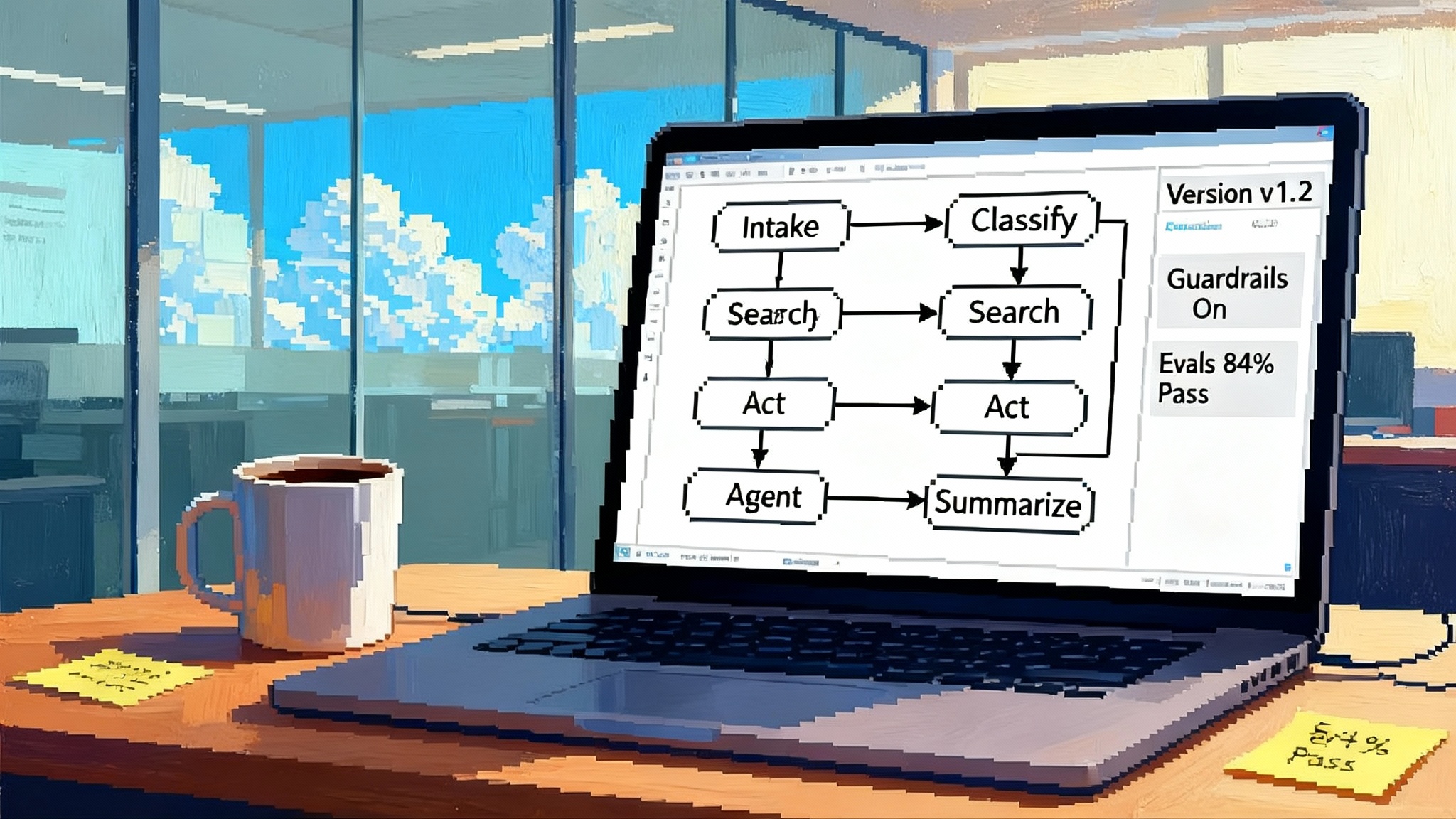

- Agent Builder: A visual canvas for composing multi-agent flows with versioning and guardrails. Templates cover common patterns. You can run preview executions inline and attach evals to specific paths.

- ChatKit: A drop-in chat experience that you can embed in web or mobile clients. It takes care of streaming, structured tool output, and showing the model’s reasoning traces where appropriate.

- Evals upgrades: Datasets for repeatable tests, trace grading for end-to-end workflows, automated prompt optimization, and support for evaluating third-party models inside the same platform.

- Reinforcement fine-tuning: General availability on o4-mini and a private beta for GPT-5. New options include custom tool-call training and custom graders so you can reward exactly what matters in your use case.

- Connector Registry: For enterprises with a Global Admin Console, a central place to manage connectors like Google Drive, SharePoint, Teams, and internal systems.

Pricing and rollout in plain terms

There is no new ticket at the door. AgentKit components are included with standard API model pricing. ChatKit and the new Evals capabilities are generally available today. Agent Builder is in beta. Connector Registry is starting a beta rollout to select API, ChatGPT Enterprise, and Edu customers that have a Global Admin Console. OpenAI also signals a Workflows API and options to deploy agents directly to ChatGPT are planned.

What this means for planning: you can prototype with full measurement this quarter without waiting for a new contract. If your organization needs Connector Registry for governance, you will request the beta via your admin path. If you want reinforcement fine-tuning on GPT-5, you will need the private beta; otherwise start with o4-mini and move up when available.

Why this is different from past attempts

Most agent frameworks asked you to be both playwright and stage manager. You wrote the playbook and you built the lighting rig, the set, and the ticketing system. AgentKit’s split frees you to focus on the script and the cast.

- Separation of concerns: Agent Builder owns workflow design and versioning. ChatKit owns the user surface and real-time state. Evals owns quality. RFT owns performance. The edges between them are explicit. That reduces untracked behavior.

- Versioned everything: You can pin a version of an agent and evolve it without breaking the running instance. When a regression appears, you can roll back quickly and explain precisely what changed.

- Evaluation-first: Rather than a bookmark of manual test prompts, you store datasets and graders. You can run regressions on every change. This builds trust with compliance and with customers. You are not asking them to believe a demo; you are showing a score that improves week over week.

- Production ergonomics: Tool use, tracing, connectors, and security reviews happen in one place. That means less latency from decision to deployment.

A one-week path to a real, monitored agent

You can stand up a scoped, production-monitored agent in five business days. Here is a playbook that balances ambition with risk.

Day 1: Choose one narrow outcome and define success

- Pick a workflow with bounded scope and real impact. Examples: triage inbound support emails and propose first replies; draft procurement intake forms and route to the right approver; generate first-pass research summaries with citations for a sales team.

- Write down hard constraints. Response time target. Maximum tool-call budget per run. Data sources allowed. Redlines for safety and tone.

- Create a tiny eval dataset, 25 to 50 cases from real history. Include tricky edge cases and add a pass-fail rubric. This becomes your north star.

Day 2: Connect data and tools, then sketch the flow

- In Agent Builder, create the first graph: intake, classify, fetch context, reason, act, and summarize. Keep it minimal. One tool per node.

- Add connectors you are allowed to use. Start with read-only where possible. Ensure audit logs are on.

- Enable baseline guardrails. Mask personally identifiable information, flag sensitive terms, and set per-tool rate limits.

Day 3: Wire ChatKit and ship an internal preview

- Embed ChatKit into your internal web app behind a feature flag. Keep the interface honest about model uncertainty. Show traces to internal testers to build understanding.

- Invite five to ten users. Capture qualitative feedback and obvious failure modes. Do not try to fix every complaint. Note patterns.

Day 4: Build evals and close the loop

- Turn the Day 1 dataset into a formal eval. Add trace grading for at least one end-to-end run. Set a minimum acceptable score and institute a block on deployment if it is not met.

- Add automated prompt optimization to explore improvements within your constraints. Keep the best candidate as a new version and lock it.

- Start recording basic metrics: success rate, average latency, tool-call error rate, and eval pass rate. These become your dashboard.

Day 5: Tighten the workflow and prepare go live

- Remove any unnecessary tool calls that add cost without improving outcomes. Replace fragile prompts with structured steps. Add a feedback capture in ChatKit.

- If you hit a ceiling with prompt tuning, test a small reinforcement fine-tuning run on o4-mini using your annotated interactions. Reward correct tool use, factual grounding, and concise tone. Keep it small. The goal is directional improvement, not perfection.

- Run evals again. If you clear the bar and latency is within target, prepare a limited pilot launch to 5 percent of traffic with instant rollback.

Weekend buffer or Day 6 and 7: Pilot, monitor, iterate

- Pilot with real users. Monitor the dashboard every hour on day one, then twice daily. Investigate traces for failures and label them for the next RFT batch.

- If the agent misroutes or hallucinates, use guardrails to block the specific pattern. Add examples to the eval dataset so it does not regress.

- If the pilot holds for 48 hours with stable metrics, ramp to 20 percent. Keep your version pin so you can roll back.

By Monday morning, you will have a working, monitored agent, a living eval suite, and a plan to improve. None of this requires bespoke infrastructure.

What developers can ship this quarter

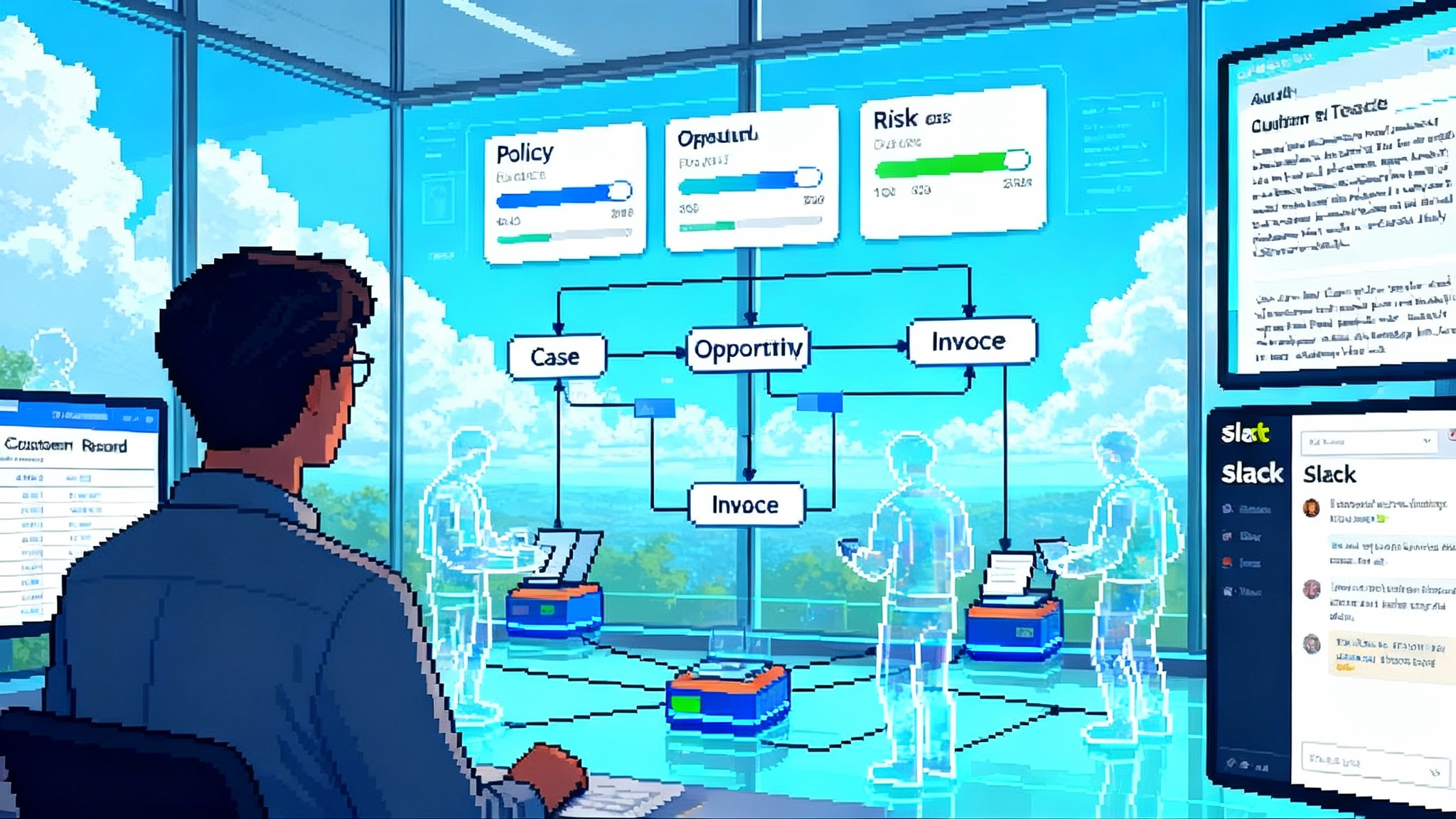

- Support triage and drafting: Pull context from tickets and knowledge bases, draft a first reply, and route edge cases to a human. Evals track tone and factual grounding. ChatKit captures user satisfaction and flags unsure responses.

- Sales research and personalization: Build a research agent that builds a one-paragraph brief with public facts and a single personalization based on CRM data. Evals check for accuracy and compliance. Reinforcement fine-tuning rewards short, high-signal output.

- Procurement or finance assistants: Create an intake agent that standardizes vendor details, classifies risk, and fills a request form. Guardrails mask sensitive identifiers.

- Internal knowledge assistant: Use connectors to index drive folders and wikis, then answer questions with citations. Evals track the ratio of supported claims to unsupported ones. Reinforcement fine-tuning teaches better tool timing.

For teams in regulated environments, the Connector Registry plus versioned workflows will make audits materially easier. For startups, the biggest unlock is not cost. It is cycle time. You can reach a trustworthy baseline in days rather than months, then iterate weekly.

How the pieces fit together, with examples

- Agent Builder as the control room: Think of it like a production storyboard. The nodes are your shots, the edges are transitions, and the versions are your cuts. Teams at Ramp and LY Corporation emphasized that they went from blank canvas to working agents in hours, not quarters. The value is shared visibility. Everyone can point to the same map.

- ChatKit as the stage: It handles the hard parts of a modern chat experience. Streaming, threading, partial results, and a place to show uncertainty. Canva and HubSpot reported fast integrations for support agents that their users can interact with directly inside existing products.

- Evals as the scoreboard: Quality is not a vibe. With datasets, trace grading, and automated prompt optimization, you capture high-value failures, assign clear graders, and enforce quality gates. Leaders can watch the score move rather than flip between screenshots.

- Reinforcement fine-tuning as the accelerator: When you want an agent to use tools with discipline or follow a house style, RFT gives you a game plan. Start with o4-mini for speed. Use custom graders to encode what good means in your application. When the GPT-5 beta opens, you can carry forward the same grading philosophy.

Early signals from the field

OpenAI says that since the Responses API and Agents SDK debuted earlier in the year, customers have built end-to-end agents in support, sales, and research. Klarna reports a support agent that handles the majority of tickets. Clay cites a step change in outbound growth from a sales agent. While anecdotes are not guarantees, they show that the pipeline can handle production weight when scoped carefully and tracked with real evals.

Practical caveats and how to manage them

- Hallucination risk does not vanish. Mitigate with retrieval, strict graders that penalize unsupported claims, and a refusal fallback that hands off to a human.

- Tool timing is hard. If an agent calls the wrong tool at the wrong time, cost and confusion spike. Use RFT to reinforce correct sequences, and add per-node rate limits.

- Governance matters. Use Connector Registry and version pins so sensitive data sources cannot drift. Require approvals for version promotions.

- Latency stays front of mind. Trace grading helps find slow steps. Coalesce tools and cache results. Budget time per node.

- Do not skip the first eval dataset. Even a small set teaches the platform how to reward or penalize behavior. Expand it weekly.

Ecosystem ripple effects to watch

- For startups: Customer-facing agents will shift from differentiator to table stakes. The moat moves from model access to datasets, evals, and operational cadence. If you sell to enterprises, offer a contract addendum that spells out your eval gates and rollback plans.

- For enterprises: Expect a consolidation of shadow agent projects into a governed platform. Security teams will prefer tools that surface traces and graders. Procurement will ask for version histories and quality metrics before approving expansion.

- For platforms that compete with OpenAI: Expect fast follower features. Differentiation will come from connectors, governance depth, and cost controls rather than raw model claims, a trend we explore in open agent stack arrives.

What to do this week if you are a CTO or product lead

- Pick one high-ROI workflow and commit to the one-week plan above. Put a success rate target, a refusal rate target, and a cost cap in writing.

- Create an eval owner and a versioning owner. It is not enough to have a project manager. You need stewards for quality and change control.

- Start on o4-mini for RFT if you need it. Request GPT-5 RFT beta if your use case depends on complex tool choreography.

- If you rely on internal systems, ask your admin about the Global Admin Console and Connector Registry beta. Plan your governance review now.

- For reporting, build a single dashboard with success rate, latency, cost per task, eval pass rate, and top three failure modes. Share it weekly.

Where to learn more

If you want a concise overview of the surface area and examples that match the pipeline described here, OpenAI’s platform page for agents is a helpful second stop. It shows how Agent Builder, ChatKit, built-in tools, and Evals line up across the product. Start with the OpenAI API Agents overview and map it to your own stack.

The bottom line

AgentKit turns agents into software you can manage. The shift is not about a spectacular demo. It is about measurable progress that compounds. With a versioned canvas, an embeddable surface, a scoreboard, and a way to teach the model what you value, you can pick one job and make it reliable. Do that each week and you will ship more in this quarter than you shipped all year. The tools are ready. The next move is yours.