The New Agent App Stores: Databricks and Microsoft 365

Late 2025 turned data clouds and office suites into the shelves for AI agents. Databricks embeds frontier models in Agent Bricks, while Microsoft 365 lights up an Agent Store with governance and audit controls.

Breaking: the distribution layer for enterprise agents just snapped into place

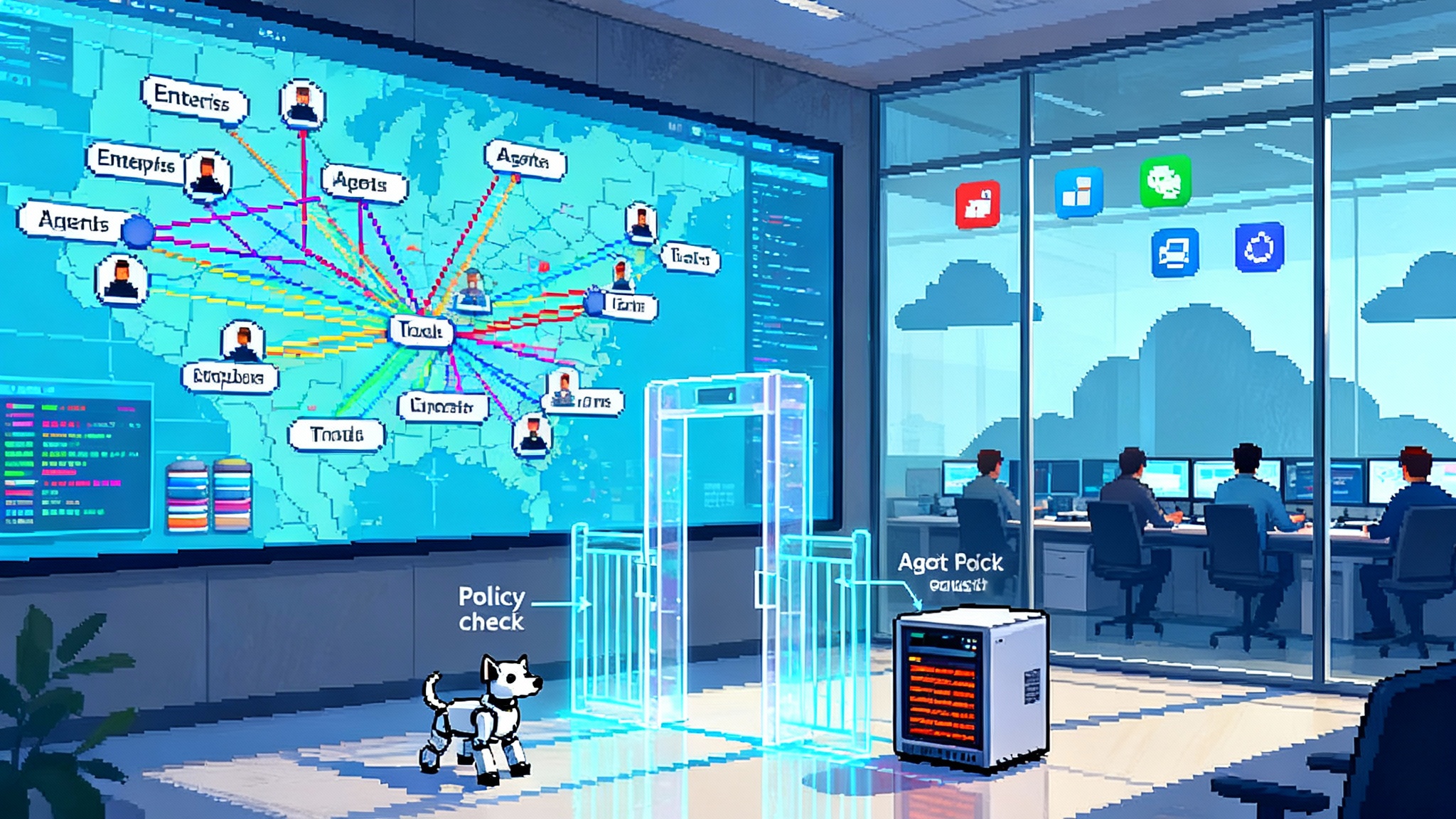

Two moves from late September through October 2025 quietly redrew the map for enterprise AI. First, Databricks and OpenAI struck a multi year deal that makes OpenAI’s frontier models native inside Databricks’ Agent Bricks, the company’s production framework for building and running agents on governed enterprise data. The partnership, valued at roughly 100 million dollars, positions GPT 5 as a flagship option for more than twenty thousand Databricks customers and, more importantly, puts the smartest models where the data already lives. That is a distribution change, not just a model menu change. It shortens the path from dataset to deployed agent by removing middleware hops and procurement friction, and it signals that the data platform itself is becoming an app store for agents. See the coverage from Reuters on September 25, 2025.

Then, in October, Microsoft expanded what it began earlier this year by lighting up the Microsoft 365 Agent Store with first party agents and administrator controls that address two of the biggest blockers to broad deployment: lifecycle governance and auditability. Admins can now reassign ownership when an agent’s creator leaves or changes roles, and they can export an inventory of agents with useful metadata for compliance reviews and security checks. Microsoft’s intent is plain: turn the office suite into the place where workers discover, pin, and manage agents in the flow of Word, Excel, Outlook, and Teams. Microsoft summarized these governance additions in its October update to Copilot and the Copilot Control System. Details are in Microsoft’s October 2025 roundup.

If the 2010s were about mobile app stores capturing distribution for software, late 2025 marks the moment when enterprise agent distribution shifts to the two places companies already trust: their data clouds and their productivity suites.

Why this matters: data gravity and workflow gravity beat everything

Enterprise agents do two things that traditional apps do not. They read and reason over data that has permissions and lineage, and they act inside daily tools where people already work. Those two forces determine where agents will be made and where they will be found.

-

Data gravity: Databricks is where petabytes of structured and unstructured data already sit with catalogs, lineage, and governance. Bringing frontier models into Agent Bricks means a healthcare payer can build a prior authorization agent without copying protected health information into a separate inference environment. Finance teams can build reconciliation agents that never leave governed tables. Agents inherit controls like data masking and access policies automatically. This is not just convenient. It is the difference between a pilot and something auditors will approve.

-

Workflow gravity: Microsoft 365 is where daily decisions live. If the Agent Store sits one click from documents, spreadsheets, mail, and chats, employees can install and use agents without switching context. That turns discovery into habit. It also makes distribution measurable through the same analytics that already track app adoption inside the suite.

For deeper context on cross stack coordination, see how standards are enabling A2A and MCP cross stack teams.

From build then beg to shelf placement by default

Until now, most enterprises rolled out agents the hard way. Teams would prototype with a hosted model, build glue logic to reach enterprise data, patch together identity and secret handling, and then begin the long march through security review, procurement, and desktop distribution. Even when a pilot succeeded, getting it in front of thousands of users was a separate project.

The new pattern flips this:

-

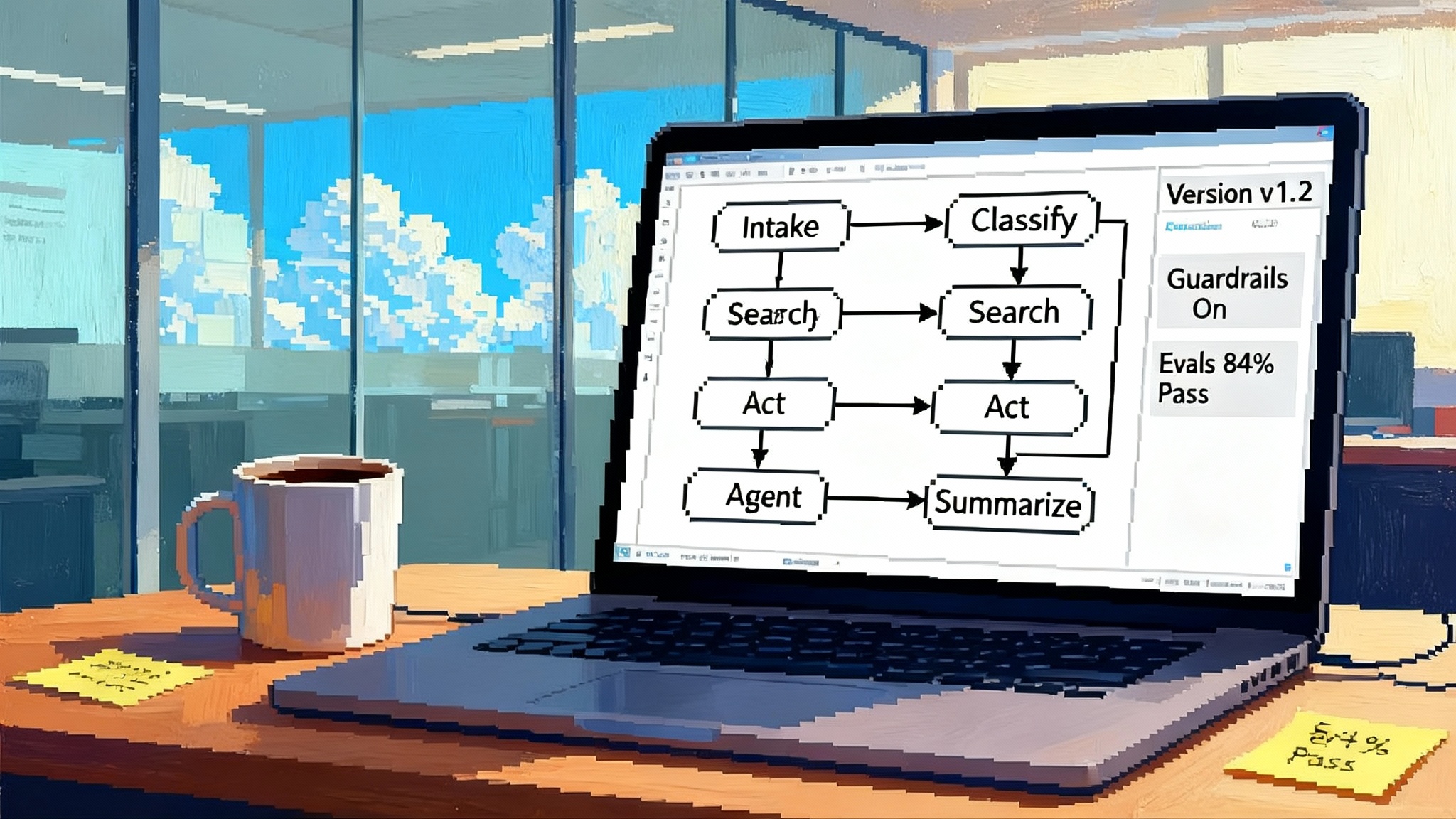

Build in the data platform where the model is first class. With Agent Bricks, you select a frontier model, point to governed data, and use built in evaluation to measure accuracy on task specific benchmarks. You do not negotiate a new model contract or ship data copies around the cloud. See how productionization tightens with OpenAI AgentKit production agents.

-

Distribute inside the suite where work happens. With the 365 Agent Store, an agent appears beside the tools employees already use. Admins can block, approve, or deploy agents at the tenant or group level and can prove who owns what.

This looks mundane on paper. In practice it removes entire steps that used to kill momentum between prototype and production.

Pricing will converge around two patterns

There are many ways to price AI. These two moves will collapse choices into seat uplift in the suite and governed consumption in the data plane.

-

Seat uplift in the suite: Microsoft is steadily absorbing more first party agents into the base Microsoft 365 Copilot experience. The company has also introduced credit capacity packs and broader controls through the Copilot Control System, including the ability to apply prepaid credits to certain agent capabilities. The effect is to make useful agents feel included, while keeping power users and specialized scenarios on a predictable credit budget. Procurement understands seats and credits. Finance teams can allocate them like they allocate storage or meeting licenses.

-

Governed consumption in the data plane: With OpenAI models inside Databricks, usage rolls up under familiar Databricks cost centers and rate cards. Teams will meter agent training, evaluation, and inference inside the same workspace budgets they use for queries and notebooks. Expect tighter cost reports that link agent runs to data assets and teams, because both live in the same platform.

What to do: if you run procurement or FinOps, model two curves for 2026. Curve one is a seat heavy baseline where the majority of knowledge workers have access to general agents with pooled credits. Curve two is a consumption heavy baseline for data teams and operations functions that run agents against high volume datasets. Compare the total cost at target adoption levels. Then set thresholds where a use case moves from the suite to the data plane for cost reasons.

Compliance and governance: the blockers are finally getting fixed

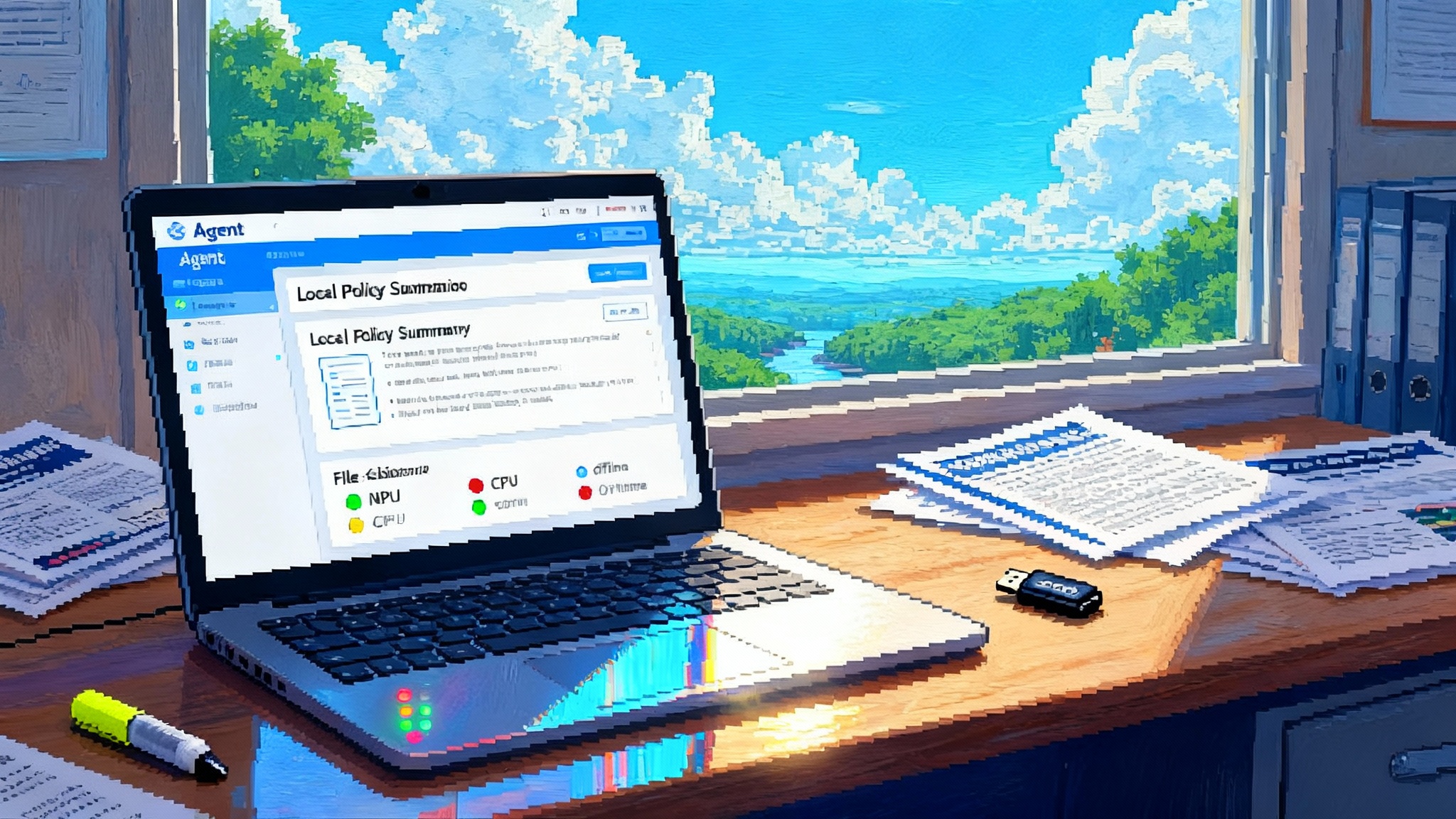

Two boring but necessary features matter a lot: ownership reassignment and inventory export.

-

Ownership reassignment fixes orphaned agents. Before October, a surprising number of organizations paused agent pilots because the creator might leave, be reassigned, or simply get too busy. When that happened, there was no clean way to transfer control. Now admins can reassign ownership to a licensed user and remove the original owner’s access. That is agent lifecycle 101 and it finally exists inside the suite.

-

Agent inventory export turns discovery into audit. Admins can export a list of agents with metadata like creator, sensitivity level, and capabilities. That lets compliance teams sample agents, check if any use nonapproved regions, and link agents to business owners. Combined with Microsoft’s roadmap for Graph inventory endpoints, the export makes it possible to reconcile what the console shows with what scripts and logs say.

Why this changes adoption: regulated industries tend to block anything they cannot list, own, and prove. A simple test applies in banks, hospitals, and utilities. Can we list every agent, name its owner, show where its data comes from, prove it obeys permissions, and transfer it if someone leaves? After the October updates, the answer is moving toward yes. That unlocks budgets. For the security angle, compare with the patterns in Agent Security Stack.

What to do: appoint a temporary Agent Registrar. This is a role that every large organization should run for the next year. The Registrar maintains the inventory, enforces the owner of record, ensures every agent has a decommission plan, and integrates export feeds with your configuration management database. Treat it like device management for software that can think and act.

Developer ecosystems: from one off bots to managed catalogs

Developers will build in two places and publish to two shelves.

-

Build in Databricks when the data is the product. Agent Bricks includes built in evaluation harnesses and tuning workflows that let teams measure accuracy on domain tasks. Because models and data share a platform, developers can iterate quickly and still satisfy governance. Expect more template agents for extraction, classification, and orchestration that use Unity Catalog lineage and access policies by default.

-

Build in Copilot Studio when the workflow is the product. Microsoft is turning Copilot Studio into a low code hub that supports multi agent orchestration, bring your own model, and integration through the Model Context Protocol. Developers and power users can package agents that operate directly inside Outlook, Teams, Excel, and SharePoint. Publishing to the Agent Store surfaces those agents where end users already are.

The shift from ad hoc bots to cataloged agents means discoverability, versioning, and lifecycle hooks become standard. The store becomes not only a shelf but a set of policies.

What to do: define two submission checklists. For the data plane, require evaluation results that show accuracy on company benchmarks, evidence of permission inheritance, and a rollback plan. For the suite, require a security review for external actions, a clear permission prompt, and a plan for how the agent explains its sources to users in context.

The new distribution power dynamics

The mobile era taught us that control of distribution shapes the market. Agent distribution will follow a similar pattern, but with two gatekeepers that are already embedded in enterprise stacks.

-

Databricks as the data native store: By embedding frontier models, Databricks reduces switching costs for model providers and makes model choice an in platform decision. That means procurement cycles will start to favor whoever already hosts the data and evaluation tooling. If an analytics platform can deliver comparable quality to a standalone inference vendor, the analytics platform wins by default because it saves time and audits better.

-

Microsoft 365 as the work native store: Because the Agent Store sits beside documents and meetings, it will drive categoryFormation. If Microsoft promotes Researcher type and Analyst type agents as first party patterns, partners will build inside those patterns and enterprises will pilot them faster because they look familiar in the client apps.

This is not a vendor lock in argument. It is a distribution reality. When a platform makes the default path smoother, most teams take it.

How bundling accelerates real deployments in 2026

Bundling is not about free features. It is a supply chain for confidence.

-

Reduced decision load: When core agents arrive inside the suite at a known seat price and a known credit envelope, business units stop arguing about whether to try them and start arguing about what to use them for. That single change moves projects from slideware to experiments.

-

Fewer integration points: Agents in the data plane that inherit permissions and logging from existing catalogs are easier to approve. Security can reuse pattern reviews. Audit can reuse existing evidence. IT can reuse identity patterns. Less novelty means faster yes.

-

Easier measurement: Because agents in both platforms ride existing analytics, leaders can show adoption, time saved, and quality figures in the same dashboards they use for app usage. That makes quarterly reviews less speculative and more operational.

Expect the following milestones in 2026 if these trends hold:

-

Every enterprise stands up a tenant wide agent inventory and begins monthly reviews with security and compliance.

-

At least one revenue adjacent or risk critical agent moves from pilot to production in the data plane. Examples include invoice exception handling, claims triage, or supply chain anomaly response.

-

The suite hosts a standard set of departmental agents that are discoverable in the Agent Store and backed by shared credits. Examples include project summarizers, meeting coordinators, and first line support triage.

-

Partners and internal teams shift from building bespoke bots to shipping agent packages that pass the submission checklists and ride the store channels.

Winners, risks, and the pragmatic playbook

Winners

-

Platforms that reduce switching and audit friction will pull ahead. Databricks wins because agents sit on the data under governance. Microsoft wins because agents sit in front of users at work.

-

Teams with a clear procurement model win. Groups that standardize on seat plus credits in the suite and metered consumption in the data plane will move faster because they can approve and scale without renegotiation.

Risks

-

Default bias is real. Stores can nudge teams toward first party agents even when a third party is better. Counter this with an internal rule: every agent category must evaluate at least two options against a benchmark before defaulting to first party.

-

Hidden spend can creep in. Credits and consumption are easy to overlook when pilots multiply. Solve this with spend guards at the environment level and monthly variance reviews that tie agent cost to business outcomes.

-

Shadow agents will appear. The easier it gets to build agents, the more likely someone ships one without review. The Agent Registrar role and the export inventory reduce this, but only if you act on the data.

Pragmatic playbook for the next 90 days

-

Pick your default home per use case. Data heavy and regulated workflows default to the data plane with Agent Bricks. Personal productivity and team collaboration default to the 365 Agent Store. Document that decision rule so teams stop debating the venue.

-

Stand up a basic evaluation harness. Choose two or three tasks that matter, like summarizing a claims note or drafting a customer response with consented knowledge sources. Record accuracy, latency, and cost for each candidate agent and model. Rerun it monthly.

-

Create a two tier governance checklist. Tier A for agents that read sensitive data or can trigger external actions. Tier B for read mostly helpers. Publish both checklists along with examples of pass and fail. This turns governance from mystery into workflow.

-

Pre agree the cost model. Decide how many credits to pool for the suite and what consumption budgets to set in data workspaces. Communicate how teams request increases and what evidence is required.

-

Pilot one cross functional agent. Choose a process that spans at least two departments, for example onboarding a new vendor. Measure end to end time and error rates before and after. Use the result to justify the next wave.

The bottom line

App stores won the last decade because they controlled the shelf. Databricks and Microsoft are now building the shelves for enterprise agents. One sits next to your governed data. The other sits next to your work. Together they change not only where agents run, but how they are bought, audited, and scaled. If you are planning for 2026, treat these platforms as the distribution layer for agents by default, then make explicit exceptions when a use case demands something else. The store is the story, and the shelf you choose will determine which agents customers actually use and which ones stay in the demo deck.