Ray-Ban Display and Neural Band: A Real UI for AI Agents

Meta’s Ray-Ban Display smart glasses and Neural Band promise a practical UI for AI agents. With a tiny lens display and subtle wrist gestures, navigation, captions, and on-the-spot guidance shift from chat to action.

Meta finally shows a wearable UI for agents

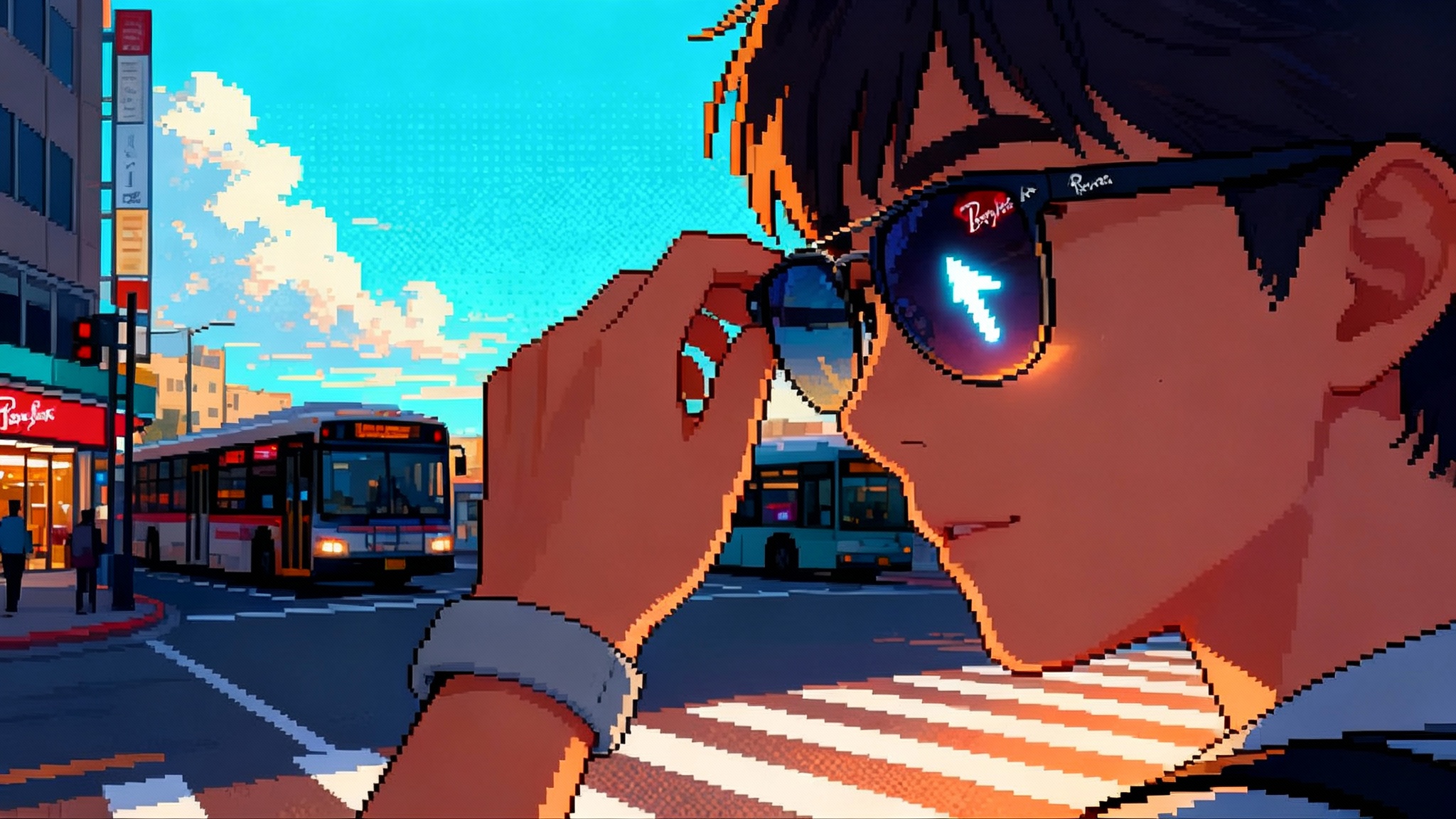

After years of camera-first glasses and voice assistants that mostly talk back from a pocket, Meta just put a real screen on your face and a quiet controller on your wrist. At Meta Connect 2025, the company unveiled Ray-Ban Display smart glasses and an accompanying Neural Band, a package designed to let an AI agent see what you see, whisper what you need, and accept subtle commands without pulling out a phone. The launch was framed as a step toward everyday, credible augmented computing rather than a lab demo or a VR detour, with US price and ship date set for September 2025. Meta made that shift explicit with a built-in display for heads-up info and a wristband for microgestures that keep your hands free while you move through the world, as detailed in an AP report on the launch.

If the last two years were about seeing whether people would wear AI camera glasses at all, this year is about whether a lightweight, glanceable UI can drive useful actions. With Ray-Ban Display, that UI finally exists in a familiar frame that does not scream prototype. The Neural Band adds the missing control surface, so your agent is not limited to wake words and broad commands. Together they form a proper I/O loop: vision in, context in, brief text or icons out, tiny gestures for confirmation or correction, and the agent takes it from there.

The unlock: eyes up, hands free, just in time

The appeal is not that a notification floats in your periphery. The appeal is the combination of hands-free capture, glanceable output, and low-friction input that shrinks task overhead. That trifecta switches the agent from reactive to proactive.

- Navigation becomes glance-guided rather than phone-bound. The glasses can prompt a micro-turn at the right corner, show your platform number as you enter a station, and confirm you are boarding the correct bus. You never break stride to check your phone.

- Live captions keep you on pace in noisy places. The agent can highlight names and actions, and with a nudge from your wrist it can save a snippet or set a reminder tied to the moment.

- Just-in-time guidance reduces friction. Think of a two-step safety checklist that appears when you enter a workshop, or quick cues when you are cooking and both hands are messy. You gesture to advance, not to type.

- On-the-go translation gets practical because you can see and hear it without juggling a device. You glance to confirm a phrase or a menu item, and you wrist-tap to save new vocabulary to your personal deck.

These are small wins, but they stack into a daily habit loop. The agent does not need to be 3D holograms and floating dragons. It needs to quietly shorten real tasks by seconds, many times a day.

What the Neural Band really adds

The Neural Band is not magic mind reading. It is an electromyography wristband that picks up the electrical signals that travel from your brain to your hand, which lets the system detect microgestures with less motion and more privacy than waving at your face. Pair that with a tiny heads-up display and you get confirmation clicks, scrub-throughs, and lightweight text input when voice is awkward. It also solves the social friction of voice-only assistants in public space, because a quick finger squeeze or thumb press can steer the agent without performing for bystanders. Meta is positioning the glasses and band as a set, with the wristband serving as the primary way to navigate apps, see notifications, and confirm actions on the right-lens display, according to Reuters coverage of the control pairing.

Text entry will still be the hardest problem. Dictation works until it does not, and midair writing is slow. The practical play is templated responses, short confirms, and intent-first commands that let the agent fill in details. Expect a grammar of gestures to emerge, like a one-second press to confirm, a pinch-and-hold to cancel, and a swipe to cycle options.

UX gains vs the physics of battery and latency

Glanceable UIs, ambient capture, and vision models all consume power. Radios and displays do too. Battery will cap sessions. Think in hours per session with top-ups, not dawn-to-dusk endurance. That pushes designers toward microinteractions that respect energy budgets.

- Bias to read over render. A short caption or arrow is cheaper than a complex overlay. Visuals should be sparse and high contrast so the brain does most of the work.

- Cache the next step. If the agent expects you to take a left turn in 20 meters, it should preload that cue locally so it can survive a dead zone or a brief radio hiccup.

- Defer heavy tasks. If you ask for a full trip plan with three stops and two bookings, the glasses should hand off the heavy lift to your phone or the cloud, then deliver a single-screen summary with confirm buttons.

- Offer a low-power mode. Captions at a lower refresh rate, audio-only guidance while the display naps, and opportunistic syncing on Wi-Fi all stretch run time.

Latency is the other hard limit. Real-time captions and turn-by-turn cues need sub-200 ms end to end to feel natural. That points to a hybrid stack where on-device models do first-pass perception and alignment, and the cloud handles high-entropy reasoning. If the round trip to the cloud exceeds a threshold, the agent should degrade gracefully with shorter answers, fewer tokens, and a bias for deterministic heuristics when confidence is adequate. For how enterprises are assembling this stack, see how NIM blueprints make agents real.

On-device versus cloud: the new division of labor

A practical split looks like this:

- On the glasses: sensor fusion, wake word detection, simple slot filling for commands, short sequence models for captions and hotword actions, local retrieval for recent context, and safety filters for capture. These must run predictably on a tight power budget.

- On the phone: heavier models that still need low latency, like translation packs, summary pipelines for short videos, and more robust wake word fallback. The phone also helps with BLE and Wi-Fi handoffs and acts as a caching layer for the last few hours of context.

- In the cloud: large models, media understanding, long-horizon planning, web search, and secure payments. This is where the agent composes and schedules complex actions, then sends back minimal UI states rather than long text.

The glasses should choose the smallest model that solves the request well enough, then escalate if the user asks for more. This is not just about cost. It is how you keep the device responsive. A five-word “what next” in a workshop should never wait for a server round trip when a local state machine can show the next checklist item immediately. The broader shift toward cloud-scale agents is already reshaping software, as argued in our analysis of the cloud-scale agent shift.

Privacy optics in public spaces

Smart glasses still carry baggage. Cameras make people nervous, and microphones even more so. Getting this right will be as much about social design as technical safeguards.

- Clear recording signals are essential. Visible indicators and a tactile or audible cue when capture starts prevent accidental or covert recording. The agent should also make that event easy to review and delete within seconds.

- Context-aware limits help. In sensitive places like restrooms, classrooms, or theaters, a default that disables capture and shows a visible disabled state removes ambiguity. For enterprise settings, admins should be able to set zones and rules.

- Privacy by default matters. Ephemeral processing for captions and translation, on-device transcription that needs an explicit save gesture, and tight redaction when content leaves the device all reduce risk.

- Consent as a feature. If the agent wants to identify people or read name badges at a conference, it should require explicit opt-in for both wearer and subject, and it should respect badges or stickers that signal do-not-capture.

Culturally, the Neural Band may help by shifting controls to the wrist. If people see fewer face or head gestures, the device will feel less performative. Over time, social norms will firm up as happened with AirPods and smartwatch glances.

A platform bet: from chats to actions

Most AI business models today monetize tokens. That makes sense for text apps, but it underprices real-world outcomes. Glasses flip the incentive. When your agent can see the shelf you are staring at and can tap a payment method you actually use, success is an action, not a paragraph.

Here is what a glasses-first agent economy could look like:

- Per-action fees. A small platform cut for a booked ride, a paid transit fare, a coffee ordered for pickup, or a tool rented from a maker space. The UI may be a two-second confirm on the lens, but the value is the completed transaction.

- Local commerce rails. Inventory from nearby stores becomes a first-class source. The agent can see the part you need, check local stock, and suggest a pickup path that fits your route home.

- Contextual affiliate. Instead of generic links, attribution flows from sensor-confirmed actions. If the agent uses your instructions to select a product in a physical aisle and the user confirms, the referral can be fairly credited.

- Service bundles. Fitness coaches, language tutors, and travel concierges can build micro-flows that run continuously on the glasses. You pay a monthly fee for a real-time layer that nudges you at the right moments.

For developers, this means building for intent and completion rather than conversation length. The best glasses apps will feel like tiny assistants that close loops, not chatbots that explain things. Standards will help these loops interoperate, which is why the USB-C standard for agents matters.

What builders should ship first

- Navigation-plus. Not just arrows, but transit awareness, curb details, and last-50-feet cues, all with offline fallbacks and short confirms.

- Live captions with memory. Captions that can tag names, action items, and follow-ups, all saved only when you gesture to keep them.

- Micro-coaching. Cooking, lifting, photography, and instrument practice are perfect for a glance-and-gesture loop. Start with three-step routines and build from there.

- Visual answer keys. Point at a machine, get the next step. Point at a plant, get the likely disease and the first fix. Keep it tight and defer the deep dive to the phone if requested.

- Safety helpers. PPE checks at job sites, ladder angles, and hazard reminders. These are high value because seconds matter and hands are busy.

Each of these should respect the battery and latency budget. That means short bursts of display time, audio that fills gaps when the display sleeps, and local state machines that keep things moving when the network dips.

Friction and failure modes to watch

- Heat and comfort. A display, radios, and cameras in a thin frame is a thermal puzzle. If the glasses get warm on your nose bridge, usage drops fast.

- Reliability of microgestures. False positives are annoying, false negatives kill trust. The model must calibrate quickly and adapt to different skin tones, sweat, and motion.

- Software seams. Handoffs between glasses, phone, and cloud must be invisible. If the agent gets stuck waiting for a permission dialog on your phone, the magic evaporates.

- App ecosystems. For the agent to complete actions, it needs deep hooks into messages, payments, calendars, and maps. Platform lock-ins will slow that down. Developers should aim for robust fallbacks and graceful degradation when APIs are off limits.

- Social pushback. Some venues will ban cameras, and some people will object to captions in live conversations. The defaults will matter. Clear signals and opt-ins reduce friction.

How success will be measured this year

Hardware sales are a vanity metric. The leading indicators to watch are:

- Daily sessions per wearer and median session length

- P50 and P95 time to confirm an action after an agent suggestion

- Completion rate of navigation and pickup flows without a phone unlock

- Fraction of tasks handled fully on-device

- Battery drain per hour in mixed use and number of case top-ups per day

- Developer attach rate for Neural Band gestures in third-party apps

If these numbers move the right way, a new pattern of personal computing will be forming in plain sight.

The bigger picture

We are not leaping to fully spatial, full-color AR that replaces your phone tomorrow. We are getting a humble, well-scoped UI for agents that you can actually wear. A tiny display that can show a direction, a line of conversation, or a confirm button is enough to make AI useful when you are moving through the world. A wristband that lets you steer the agent without speaking is enough to make it polite in public. The rest is iteration.

Give this combo a year of developer attention and social acclimation and the shift from chats to actions will become obvious. When you can glance and confirm your next step in under two seconds, and when those confirms turn into real outcomes, the agent stops being a novelty. It becomes the operating system for the parts of life that never fit on a phone.

And that is the real news from Connect. The UI exists now. The question is no longer if people will wear AI. It is whether the industry can make those glances count.