AWS’s Quiet Play to Own the Enterprise Agent Runtime

Amazon is quietly assembling an enterprise agent platform under Bedrock that spans runtime, memory, identity, tools, observability, and a new agentic IDE. Here is why the competitive front is shifting from model choice to runtime control, and how to evaluate AWS against Azure and Google over the next year.

The platform play behind July’s reveal

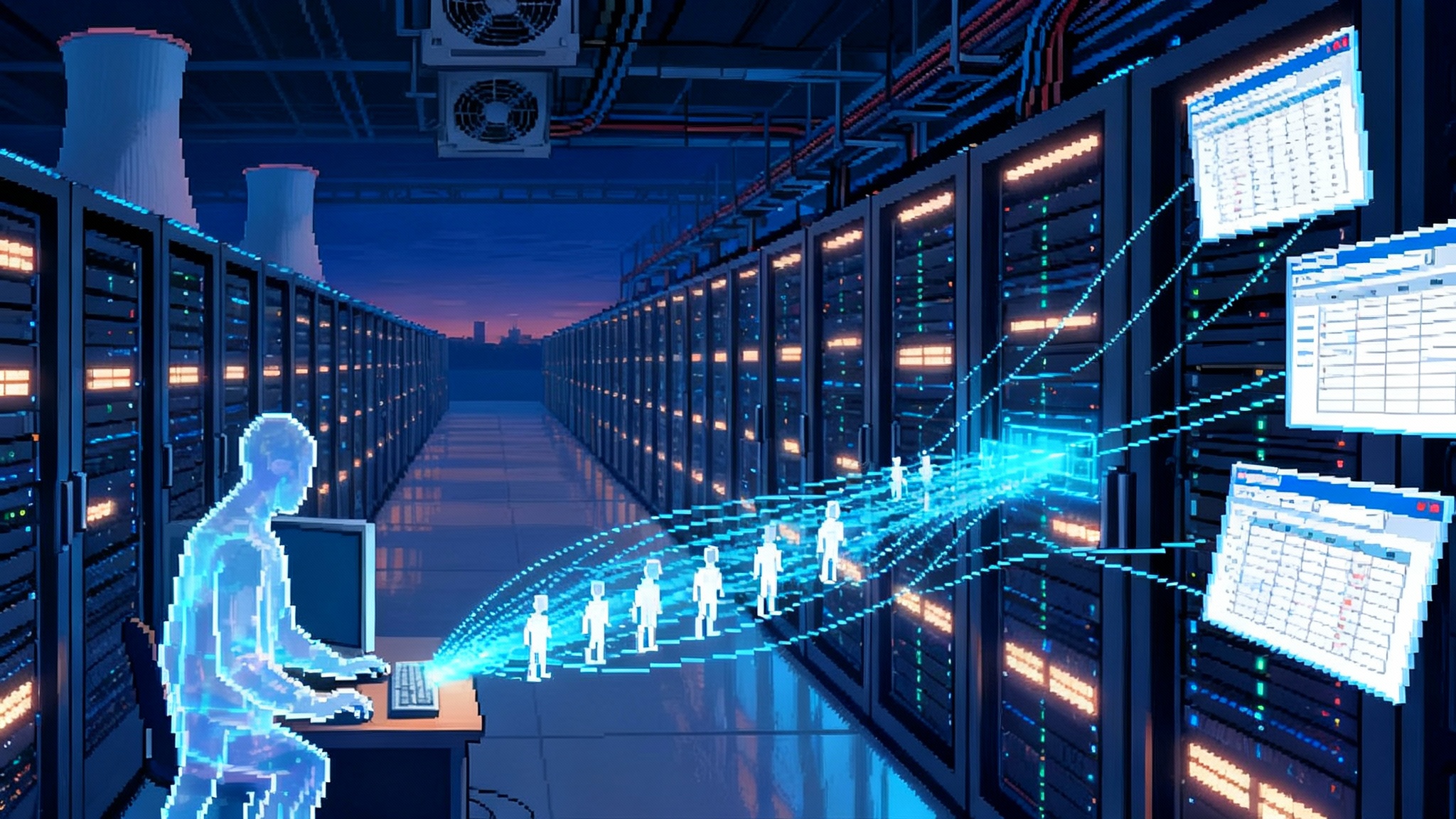

On July 16, 2025 Amazon unveiled Amazon Bedrock AgentCore in public preview, a set of composable services meant to carry AI agents from prototype to production at enterprise scale. The messaging was pragmatic. Instead of another demo agent, AWS shipped the rails: secure runtime, long‑running sessions, first‑class memory, tool gateways, a browser and code interpreter, identity integration, and deep observability. The promise was model agnosticism and production hygiene out of the box, and it landed with customers stuck in pilot purgatory. See the AgentCore preview announcement.

In the weeks that followed, Amazon rounded out the story with developer tooling on the edge where agents meet code. Strands, an open SDK AWS teams have used internally, gives a clean agent runtime abstraction with support for MCP and agent‑to‑agent patterns. Kiro, a new agentic IDE, gives developers spec‑driven workflows that feel like disciplined software engineering rather than vibe coding. Mid‑September leadership moves around AgentCore and Kiro underscored that this is now a top‑line AWS bet, not a side project. For context on open protocols, see how MCP crosses the chasm standard.

The thread through all of it is simple. AWS is building a cloud‑native agent platform layer. If that layer becomes the default way enterprises deploy and govern agents, the center of gravity shifts from which model you pick to who runs your agent runtime.

What AgentCore actually is

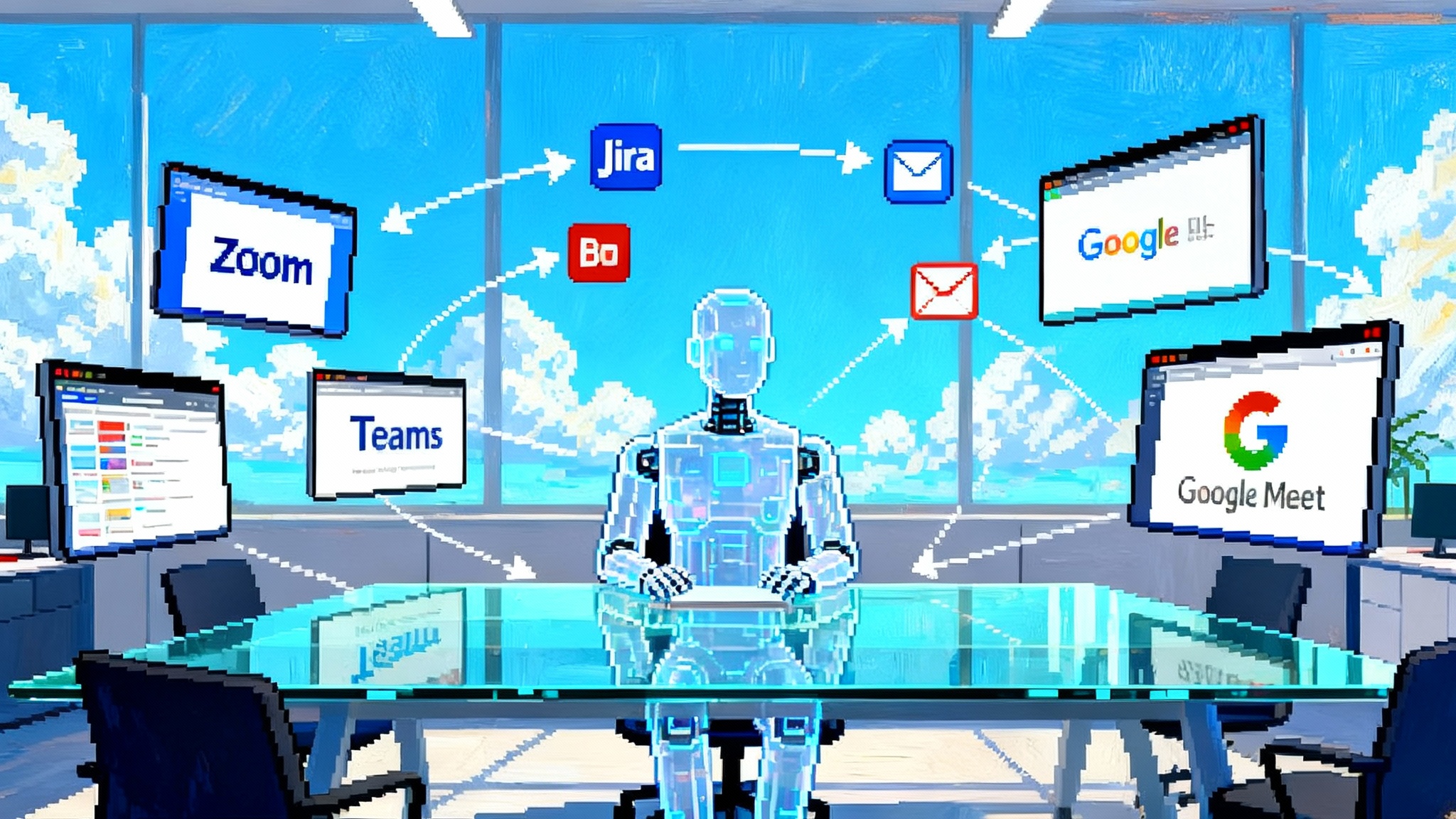

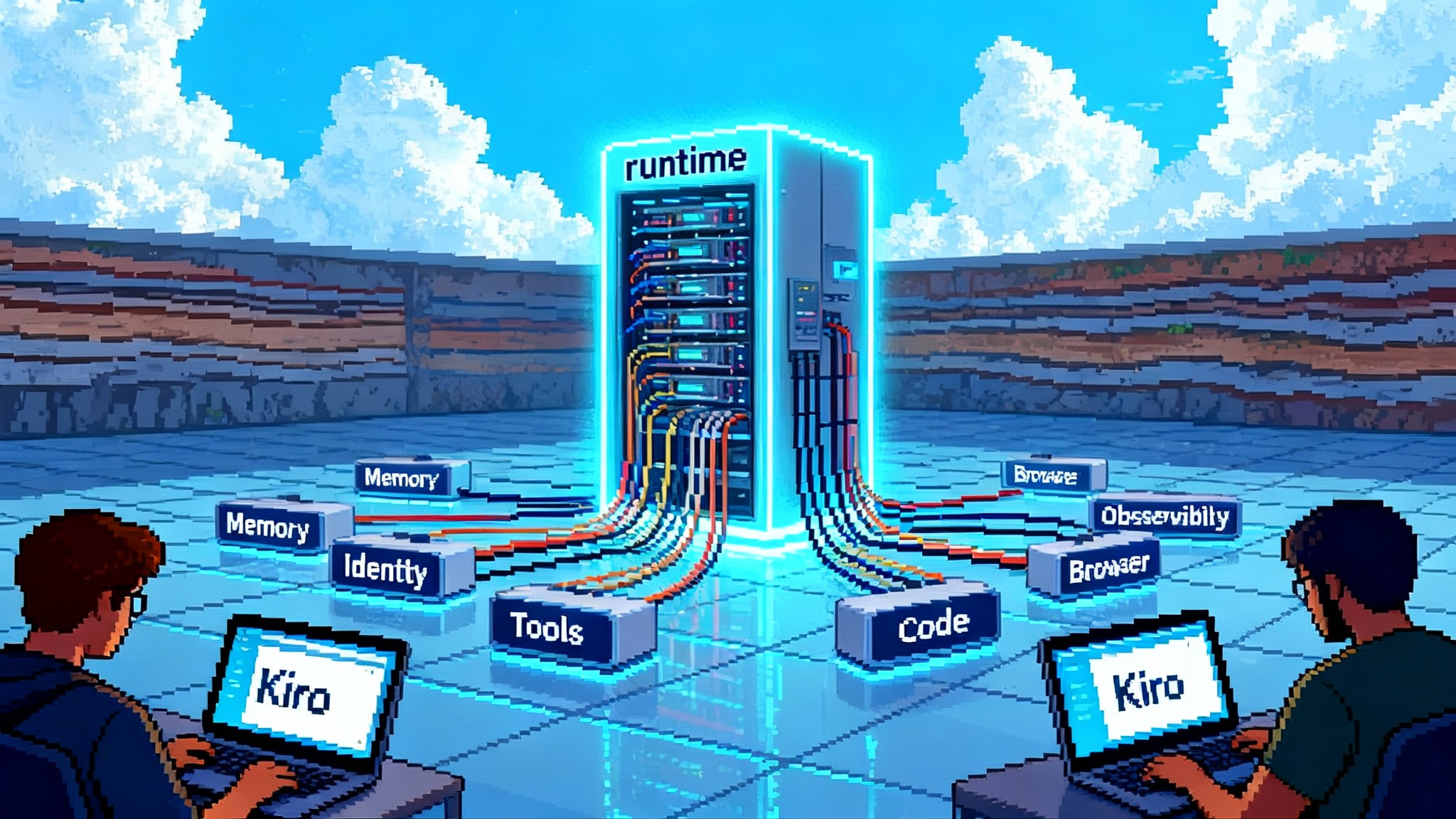

AgentCore is not a single service. It is a clustered set of primitives that combine into a managed agent runtime:

- Runtime. A managed execution environment for interactive and asynchronous agent workflows with session isolation and long‑running jobs. Think of it as an application server tuned for agents.

- Memory. Short‑ and long‑term memory services that persist context across sessions without standing up vector databases and state machines by hand.

- Identity. Native tie‑ins to existing identity providers so agents can act on behalf of users and services with the right permissions. That matters when an agent needs to fetch records from a CRM or kick off a pipeline.

- Tooling surface. A Gateway that turns APIs, Lambdas, and services into agent‑compatible tools, plus a secure Browser runtime and a sandboxed Code Interpreter for agentic tool use.

- Observability. Built‑in traces, metrics, and dashboards so teams can debug tool calls, cost spikes, and safety events. Agents will not reach production without this.

Each piece is modular. You can take just the browser or the code sandbox. You can bring your own model, inside or outside Bedrock. The aim is to eliminate the hidden scaffolding that has kept agent projects sitting in notebooks and sandboxes.

Strands and Kiro bring the developer edge

Enter Strands and Kiro.

- Strands Agents SDK. A model‑first, open approach that makes MCP and agent‑to‑agent patterns feel routine rather than bespoke. The design lets you swap Anthropic, Meta, or open models under the hood while keeping the higher‑level agent loop intact. Strands can run locally for quick iteration, then deploy to AgentCore, Lambda, Fargate, or EC2 for scale. That gives teams a path from a developer laptop to a governed runtime without rewrite.

- Kiro. It looks like an editor, but its worldview is spec‑driven development for AI‑assisted software. You draft requirements, Kiro turns those into design artifacts and ordered tasks, and the agent helps implement code while tying changes back to the spec. Hooks trigger actions like running tests or generating docs. Under the hood, Kiro leans on agentic workflows and the same tool protocols that Strands and AgentCore speak. The result is less prompt soup and more traceable engineering.

Together, Strands and Kiro form the edge where developers live, while AgentCore is the substrate where teams operate and govern. That is the stitching Amazon is doing. For how runtime choices are consolidating elsewhere, see Chrome makes Gemini the default runtime and how ChatGPT’s agent goes cloud scale.

Why runtime control beats model choice

For the last two years the conversation has been dominated by models: which one is smartest, fastest, cheapest. Enterprises are discovering that the bottleneck is rarely the model. It is everything around it.

- Security and identity. Least‑privilege access is not a prompt pattern. It is a runtime feature tied to your IdP, secrets, and policy.

- Durable execution. Long jobs, retries, backoff, idempotency, and side effects require an orchestrator with state and isolation guarantees.

- Tool governance. Tool discovery, permissioning, and auditing need a managed layer, not a library.

- Observability and cost. You cannot control blast radius without tracing tool calls, model invocations, and data movements with consistent telemetry.

When these are platform features, the model becomes a pluggable component rather than the surface you program directly. That unlocks multi‑model strategies without multiplying operational risk. It also moves the switching cost from models to runtimes, which is exactly where cloud providers have advantage.

How the pieces fit together

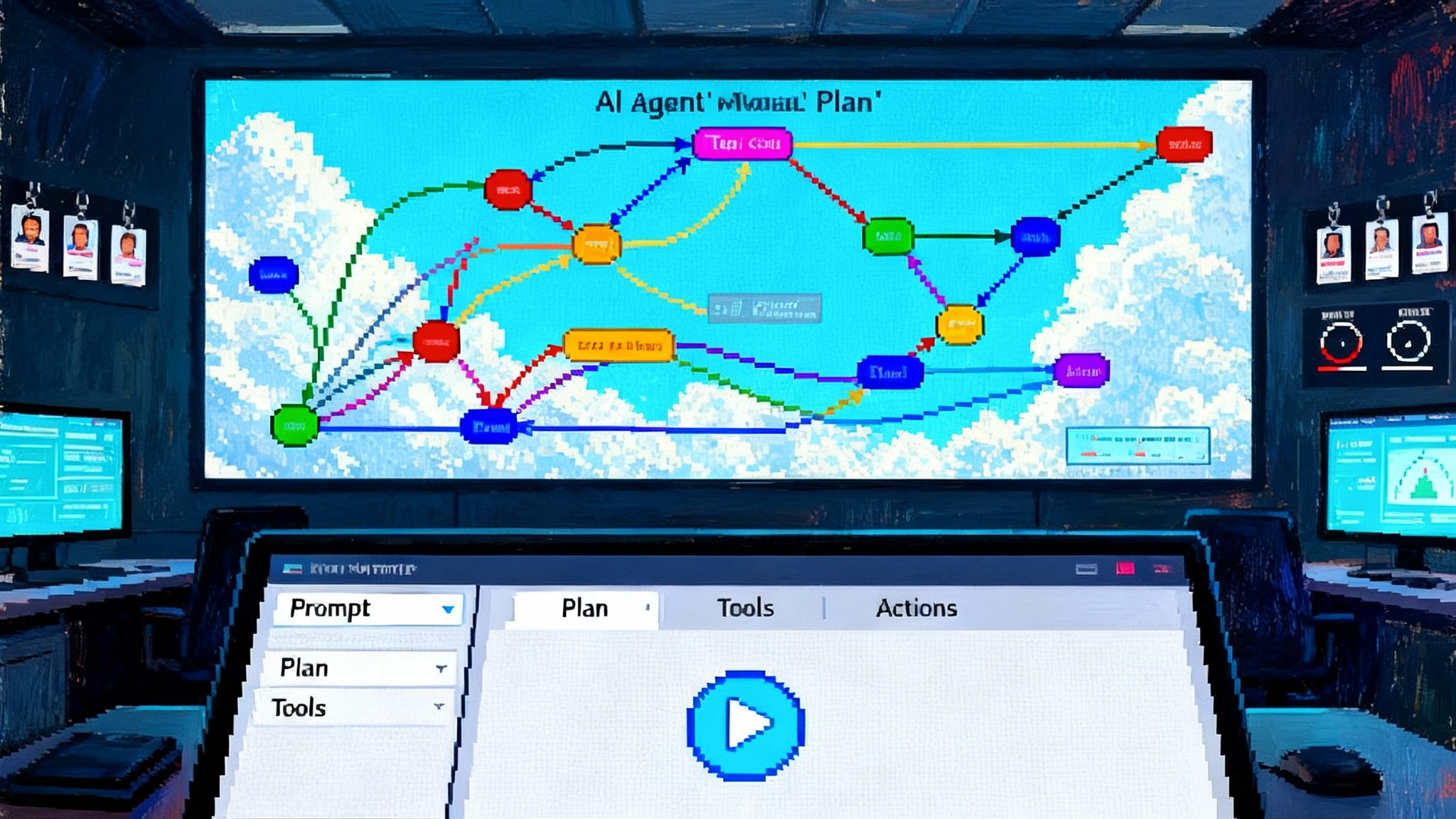

Look at the workflow end to end and the platform shape is clear:

- A developer drafts a spec in Kiro, guided by an agent that already knows how to turn requirements into design and tasks.

- The agent uses Strands under the hood to plan and decide when to call tools via MCP or native integrations.

- Those tools run through AgentCore Gateway, Browser, or Code Interpreter inside a governed runtime with session isolation.

- Identity flows from the user or service account via AgentCore Identity so every call is authorized and auditable.

- Memory stores context and artifacts so the agent does not forget and you do not bolt on a vector store.

- Observability pipes traces and metrics into dashboards where SREs and app owners can debug, optimize, and enforce policy.

That is a platform layer. It looks more like Kubernetes for agents than a single managed model endpoint.

Multi‑model, Bedrock and beyond

AgentCore’s pitch is model agnostic. That matters in a world where buyers want to mix closed and open models for cost, capability, and data residency. With Strands, teams can point the same agent logic at Anthropic in Bedrock, a local Llama, or an external API. With MCP, the tools side is standardized so agents can reach across SaaS, clouds, and internal services without custom glue for each integration.

Two outcomes follow if this approach sticks:

- Standardized deployment. The runtime becomes a uniform target for dev, test, and prod, regardless of the underlying model, which means teams finally get CI and rollback for agent systems.

- Standardized governance. Policies for identity, data access, tool use, and safety can be defined once and enforced everywhere the agent runs.

If you are a platform leader, that is the difference between a pilot and a portfolio.

How it compares with Microsoft and Google

Microsoft is racing in the same direction, and in some places is already ahead in production ergonomics for Microsoft‑centric shops. The Azure AI Foundry Agent Service is GA with its own fully managed runtime, an observability plane, A2A and MCP support, and identity through Entra Agent ID. Its browser automation, deep research tooling, and BYO storage are maturing quickly, and the VS Code extension makes the dev experience familiar. See Microsoft’s summary of what is new in Agent Service.

Google’s Vertex AI Agent Builder and Agent Engine emphasize fast turn‑up of grounded agents, tight integration with Google data services, and an opinionated developer toolchain. Google is leaning into open protocols for agent‑to‑agent and model choice inside Vertex. If you are already invested in Google’s data stack, that coherence is a draw.

Where AWS differentiates is the depth of the agent runtime primitives and the breadth of tool surfaces. It is aiming to be the reliable, compliant home for agents that need to act in the real world, not just chat. Identity, browser, and code execution are first class and wired to CloudWatch‑level observability. Strands and Kiro close the loop for developers who want structure, not just prompts.

What this means for buyers in the next 6 to 12 months

If you buy platforms, treat agents like a new application tier and evaluate the runtime first.

Questions to take into pilots and RFPs:

- Runtime guarantees. What is the maximum job duration, what isolation is provided, and how are retries, timeouts, and idempotency handled?

- Identity and policy. Can agents assume user or service identities cleanly? How do you scope permissions and rotate secrets? Can you audit each tool call and data access?

- Tool surface. How easy is it to publish and discover tools? Is MCP supported? Can you convert existing APIs and Lambdas without heavy wrappers? Can agents call other agents safely?

- Memory. What are the options for short‑term and long‑term memory? Can you bring your own storage or vectors? How are data retention, residency, and encryption handled?

- Observability. Are traces, metrics, and safety events unified? Can you pipe telemetry into your existing SIEM and SRE stacks? Are there built‑in evaluations for quality, cost, and safety?

- Developer workflow. How do specs, tests, and code changes tie back to agent actions? Can you run locally and deploy to the same runtime without rewrites? How opinionated is the IDE?

- Multi‑model. Can you swap models per environment or per task? Are quotas, costs, and safety settings enforceable at the runtime layer, not just in app code?

- Portability. If you build with this SDK and runtime, what parts are portable to other clouds? Are protocols and interfaces open, or are you coding to a proprietary surface?

- Cost and capacity planning. Does the vendor provide unit‑level cost controls by tool, by model, by agent? How do you cap spend without breaking SLAs?

Pilot design suggestions:

- Start with one high‑leverage workflow that crosses systems, for example an agent that triages support tickets, files issues, and pushes fixes. You want tool use, identity, and long‑running work.

- Run the same blueprint on two clouds. Use MCP for tools, keep the agent loop identical, and evaluate runtime behavior, not model quality.

- Wire up traces and cost dashboards on day one. You are testing operability as much as capability.

- Gate promotion to production on non‑functional outcomes. Time to trace, mean time to recovery on agent errors, cost predictability per unit of work, and policy compliance should decide the winner.

Procurement signals to watch:

- AWS. How quickly AgentCore regions expand, and how tightly Kiro and Strands integrate with Identity, Observability, and Gateway by default. Closer coupling means less glue for customers.

- Microsoft. How far Entra Agent ID and Purview‑level governance can automate agent sprawl controls across Foundry and Copilot Studio, and whether its browser automation and deep research tools become robust enough for regulated use.

- Google. Whether Agent Engine becomes the default managed runtime for Vertex agents and how cleanly it interoperates with open protocols and non‑Google tools.

Risks and uncertainties

The agent stack is moving fast. Two risks stand out for buyers:

- Pricing volatility. Early agent services are experimenting with pricing units. Nail down how your vendor measures interactions, tool calls, and long jobs, and insist on hard spend controls.

- Protocol churn. MCP and agent‑to‑agent are converging toward de facto standards, but details will shift. Insulate yourself by keeping tool definitions and agent loops as portable as possible.

The bottom line

AWS is not trying to win because it has the smartest model. It is trying to win because it can operate the most trustworthy agent runtime at cloud scale, and because it can make building agents feel like software engineering rather than prompt alchemy. AgentCore is the substrate, Strands is the open development layer, and Kiro is the human interface that turns specs into code with agents in the loop.

If Amazon succeeds, the enterprise conversation will stop being which model is best and start being whose runtime you trust to run your agents. Over the next 6 to 12 months, evaluate accordingly. Treat agents as a new tier in your stack, pilot runtimes head to head, and buy the platform that makes your agents both capable and governable at the same time.