Citi’s agentic AI pilot goes live in finance at scale

On September 22, 2025, Citi activated agentic capabilities inside Stylus Workspaces for 5,000 employees. Here is what that means for regulated AI, how the stack is evolving, and a 12‑month roadmap leaders can execute with confidence.

The day agentic AI goes live in finance

On September 22, 2025, Citigroup moved agentic AI from demo to daily work, turning on agentic capabilities inside Citi Stylus Workspaces for 5,000 employees. Early reporting describes single prompt experiences that trigger multistep workflows over internal and external data, supported by strong controls and monitoring. It is a watershed moment because it shows a global bank can put agents in front of real users while satisfying governance, cost, and change management requirements that would normally stall these launches. See the initial scope in CIO Dive's detailed report.

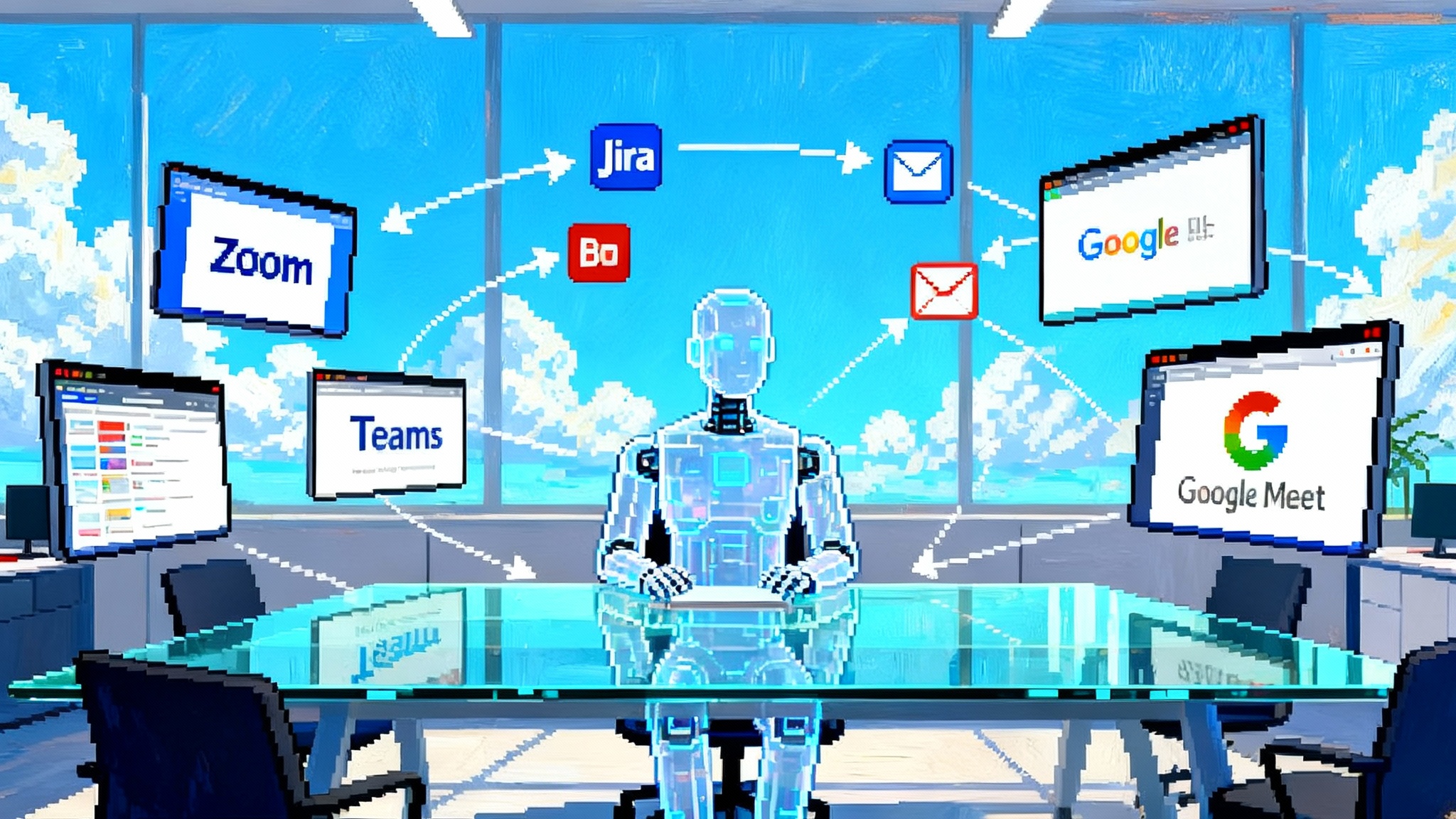

Agentic systems combine reasoning models, tool use, and retrieval across enterprise systems to complete tasks rather than only answer questions. Instead of asking for a paragraph about a client, a banker issues a single prompt like “Prepare a client briefing for Thursday” and the agent searches entitlements compliant sources, drafts slides, checks approvals, and packages the output for review. The promise is cycle time compression with full traceability.

From demos to dependable workflows

For the last two years, many teams showcased agents that looked magical in controlled demos. Production reality in a regulated bank is different. You need identity and access checks, human in the loop, audit trails, model risk assessments, cost controls, and a predictable user experience. Citi’s rollout signals that the maturity curve has shifted. The ingredients exist to make agents dependable enough for frontline roles without sacrificing oversight.

What changes when agents move into production inside a bank

- The unit of work becomes a task, not a message. The experience must accept a goal and decompose it into steps, tools, and approvals.

- Guardrails are explicit and layered. The agent must provably avoid acting outside the user’s entitlements, and it must capture a full log of the steps it took.

- Latency, cost, and accuracy must be measured per step, not just end to end. That enables tuning of the plan and model choices.

- Outcomes must be reproducible. You need determinism controls, prompts under version control, and frozen tool contracts.

What Citi appears to be rolling out

While details will evolve as the pilot expands, the shape is visible. Inside Stylus Workspaces, users can initiate agentic workflows from a familiar UI. The agent orchestrates retrieval across entitlements compliant repositories, invokes bank approved tools, and presents outputs for review and submission. Think client briefings, RFP responses, portfolio summaries, policy comparisons, and risk checklist preparation. Tasks that previously required a dozen clicks across multiple systems are condensed into a single prompt with step by step transparency.

The bank’s approach reflects a pragmatic pattern many Fortune 500 companies now adopt: combine frontier models with an orchestration layer that prefers deterministic tools when available, lean on retrieval for institutional memory, and route to the most cost efficient model that can hit the task’s quality bar. Human review remains central whenever the agent proposes external communications, risk relevant judgments, or system updates.

The stack that makes agentic work possible

Below is a vendor agnostic breakdown of the stack behind an enterprise agentic rollout. Specific technologies will vary by company, but the architecture is converging on these layers. Many teams combine models such as Gemini or Claude for reasoning with internal connectors and bank approved tools. The point is not a single model, but reliable orchestration. For deeper design patterns, see our guide to enterprise agentic architecture.

1) Experience layer

- Chat plus forms. Users start with natural language, then the agent surfaces structured fields to reduce ambiguity. This helps with approvals, handoffs, and downstream automation.

- Task gallery. Prebuilt workflows for common jobs reduce prompt crafting and standardize outputs. Each template has visible governance and owners.

- Draft, review, submit. Every agent run ends with a review stage where users can edit or reject before anything is published or sent.

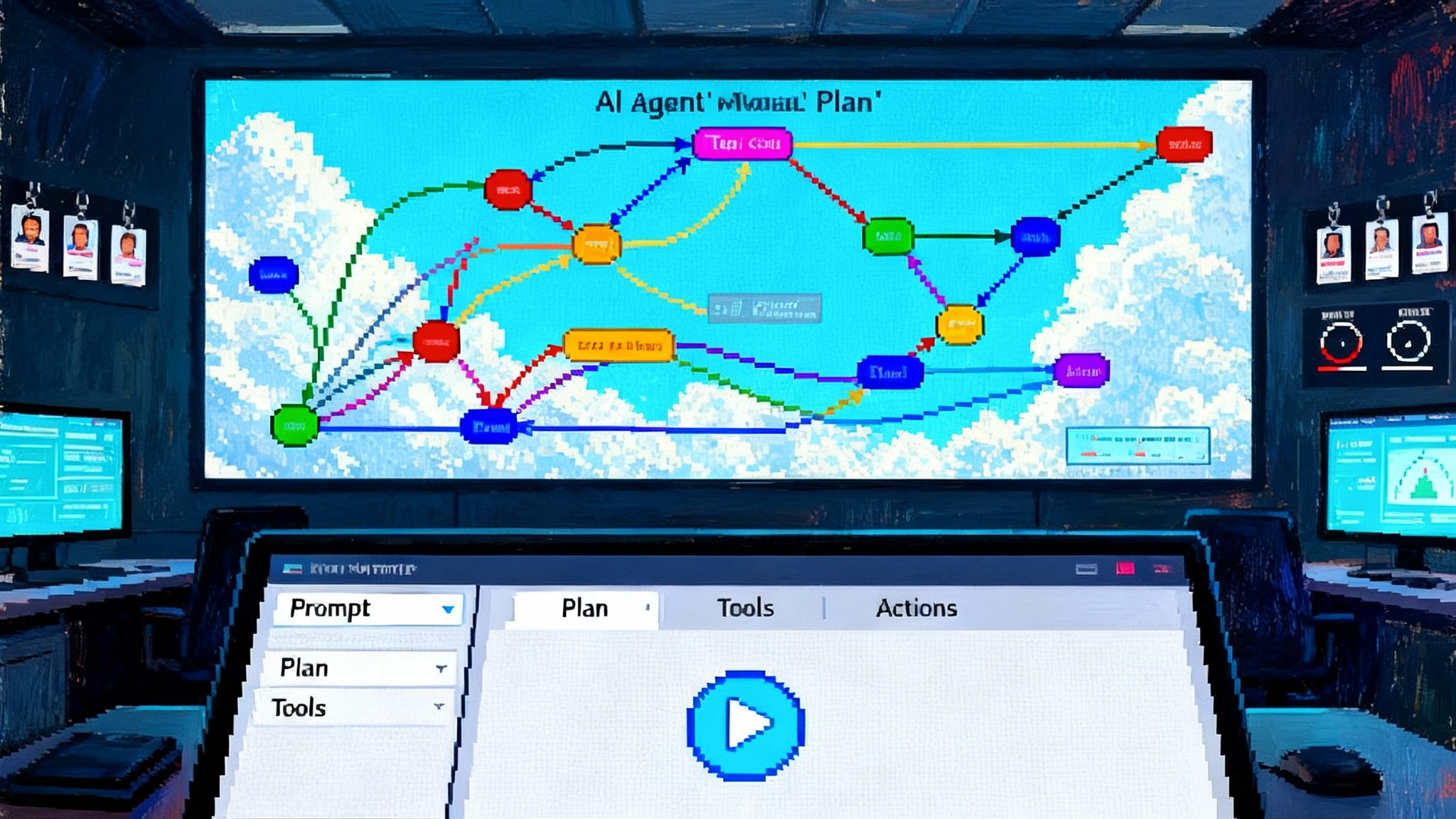

2) Orchestration and planning

- Planner. Breaks the goal into steps, selects tools, and sequences calls. Plans are versioned and testable.

- Tool calling. Function contracts keep inputs and outputs typed, logged, and validated. Agents do not get raw database access; they call curated tools.

- Memory. Short term run memory lives with the plan. Long term memory is explicit through retrieval. Implicit long term memory is minimized to keep behavior predictable.

- Determinism controls. Temperature, top p, and tool fallback rules are set per step. For critical steps, the agent uses deterministic tools or cached snippets instead of free form generation.

3) Model layer

- Multimodel router. Selects a model per step based on cost, latency, and required reasoning depth. Lighter models handle extraction and classification. Heavier models handle synthesis and planning.

- Frontier models. Teams often pair models like Gemini or Claude for strong reasoning and tool use, while maintaining portability to alternative providers.

- Small models for safety. Lightweight models perform PII detection, redaction, and policy screens before outputs leave the workspace.

4) Data and retrieval

- Connectors to internal content systems, email archives, CRM, policy libraries, product docs, and market data. Each connector enforces the user’s entitlements.

- Retrieval augmented generation. Indexes are built with clear retention and refresh rules. Queries are constrained to approved sources. Attribution is mandatory in outputs.

- Data minimization. Only the minimum required snippets are passed to the model. Sensitive fields are masked when possible.

5) Tooling and execution

- Bank approved tools for search, pricing, risk rules, and document generation. Tools run in a sandboxed environment with strict outbound rules.

- Code execution for light data wrangling, with resource limits, deny lists, and static analysis.

- Office and slide generation plugins that preserve branding and accessibility standards.

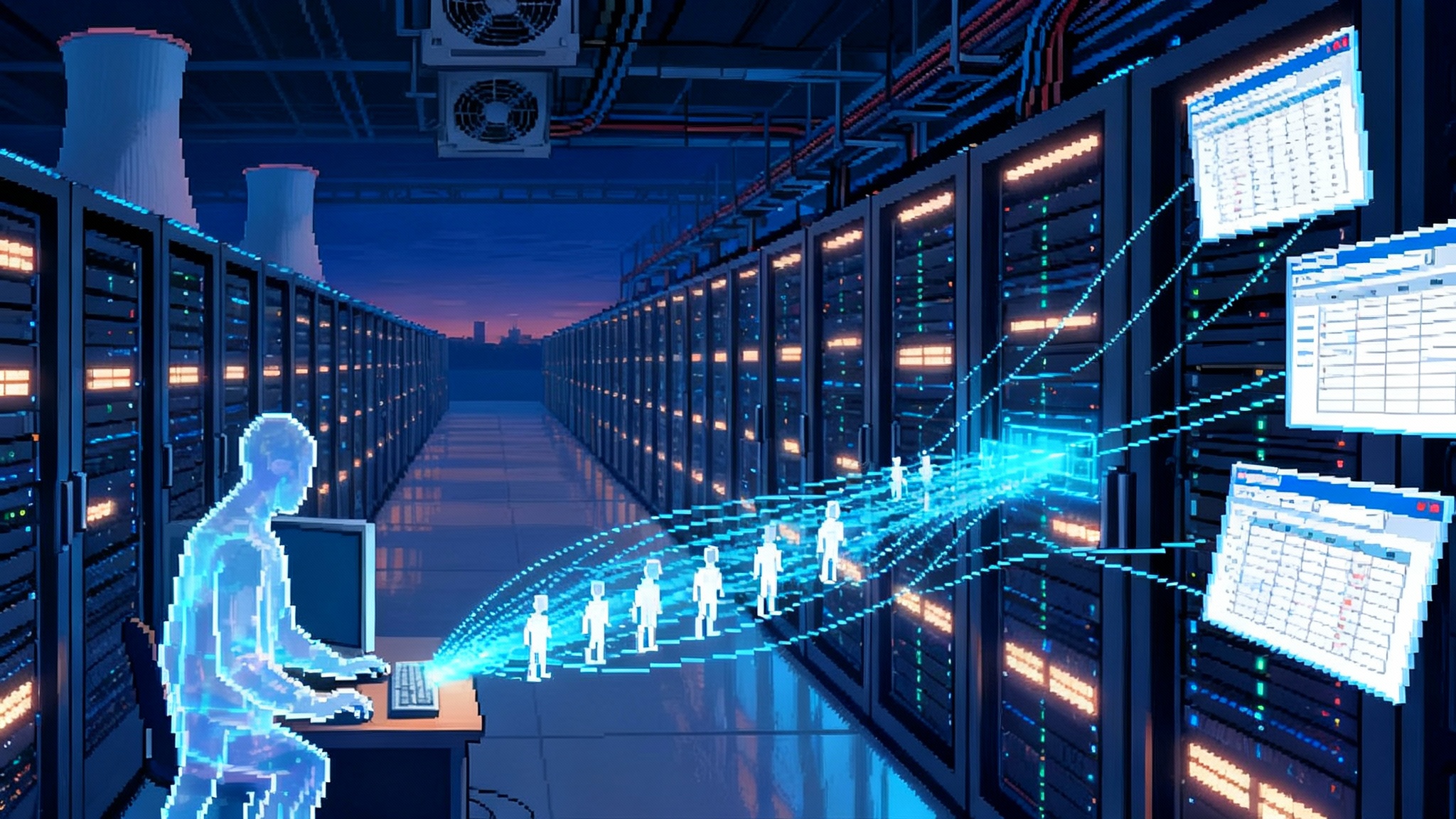

6) Observability and evaluation

- Step level logs with structured metadata for prompts, model calls, tool calls, and results. This forms the audit trail.

- Evaluation harnesses with golden sets for the top workflows. Quality is measured on content correctness, source attribution, policy compliance, latency, and cost.

- Drift detection. When data, tools, or prompts change, the system alerts owners and automatically re run evals.

Governance that satisfies regulators

Putting agents in front of bankers requires evidence, not assurances. The controls below map cleanly to expectations in the NIST AI Risk Management Framework. For a concise reference, read the NIST AI RMF core guidance. For practical checklists, see our regulated AI governance checklist.

- Role based access. Agents inherit user entitlements. If a user cannot see a repository or tool, neither can the agent.

- Action allowlists. Agents can only perform actions from a preapproved catalog. Anything outside that set forces a human review.

- Two person checks for critical steps. When an agent proposes sending external communications or initiating system updates, it requires a second human approver.

- Data lineage and attribution. Every output references its sources. Users can click back to the origin content with timestamps and access context.

- Model risk documentation. Prompts, tools, and models are versioned. Changes trigger validation and regression tests.

- Safety screens. PII detection, policy filters, and content classifiers run before outputs leave the workspace.

- Audit readiness. Structured logs tie each run to the user, inputs, plan, tools, and outputs.

Cost controls that make scale possible

Agentic systems are sensitive to cost because a single task fans out into many steps. Without discipline, unit economics can spiral. The following controls are what mature pilots, including Citi’s, tend to test early. For hands on guidance, review our playbook on AI FinOps cost controls.

- Tiered model policy. Route extract and classify steps to lightweight models. Reserve large models for planning and high judgment synthesis.

- Prompt budgets. Each plan step has a context limit and token target. The planner can prune context or switch models to meet budget.

- Caching and reuse. Retrieve once, reuse across steps and users when content and entitlements match. Cache tool results with short TTLs for popular queries.

- Batch where possible. Group similar calls, such as classification across a set of documents, to reduce per call overheads.

- Incremental retrieval. Start with a tight window of sources. Expand only if quality checks fail.

- Cost observability. Every run includes a cost breakdown by model and tool. Teams track trend lines and set alerts on anomalies.

- Guarded creativity. Noncritical steps allow mild creativity. Critical steps pin deterministic settings.

- Test on synthetic data. Use generated but realistic corpora to evaluate prompt and plan changes before exposing them to production data.

Early ROI signals to watch

Leaders should look for leading indicators that precede hard dollar benefits. The first six to eight weeks of a pilot should produce signal on:

- Cycle time reduction. Time from prompt to reviewed draft for a standard workflow. Target a 40 to 60 percent reduction before full automation.

- Touchpoints removed. Count of systems a user must open for the task. A drop from six to two is material for adoption.

- Adoption health. Daily active users over eligible users, median tasks per user, and the percentage of repeat users.

- First pass quality. Share of drafts accepted with light edits. You want a rising trend as prompts and templates stabilize.

- Compliance exception rate. Frequency of policy flags per 100 tasks. This should trend down as safety screens and templates improve.

- Cost per task. Measured including model calls and tool execution. Sustainable gains show as quality rises while cost holds flat or drops.

- Rework avoided. Minutes saved in reformatting and sourcing citations due to standardized outputs and attribution.

Hard benefits follow once teams codify stable workflows into templates and production SLAs. Expect the first dollar savings to show up in reduced contractor hours for document preparation, faster turnaround on client deliverables, and fewer compliance reviews per artifact because every output is prestructured and cited.

What could go wrong, and how to prevent it

Agentic rollouts fail in predictable ways. Anticipate these pitfalls:

- Tool hallucinations. The agent invents a tool or misreads a contract. Prevent with strict tool catalogs and schema validation.

- Stale sources. Retrieval indexes lag reality. Prevent with freshness policies, timestamp checks, and content quality monitors.

- Automation surprise. Users assume the agent sent a message or booked a trade. Prevent with clear UI separation of draft, review, and submit, plus two person checks.

- Access escalation. The agent attempts to combine entitlements across users. Prevent with user bound contexts and signed tool calls that include user identity claims.

- Policy drift. Prompt tweaks change behavior over time. Prevent with prompt versioning and automated regression evals before release.

- Hidden unit costs. Complex plans fan out to dozens of calls. Prevent with plan level budgets and per step caps, plus caching.

- Support burden. Agents create new failure modes. Prevent with L1 runbooks, L2 prompt and tool debugging procedures, and L3 engineering on call for orchestration.

A 12 month roadmap any Fortune 500 can execute

Every company’s context is different, but the sequence below is repeatable. It assumes you start in Q4 of 2025 and want production agents in a year.

Quarter 1: Prove the stack on three workflows

- Select three high frequency workflows that are document heavy, low risk, and have clear owners. Example pairs include client briefing prep, policy comparison summaries, and internal Q&A with citations.

- Stand up the orchestration layer with a planner, tool catalog, retrieval, and logging. Choose two frontier models for routing and one lightweight model for safety screens.

- Build connectors to the few sources these workflows need. Enforce entitlements at the connector, not in prompts.

- Define your evaluation harness with golden sets for each workflow. Lock in quality, latency, and cost SLOs.

- Establish a change advisory process for prompts, tools, and indexes. Document everything from day one.

Quarter 2: Pilot with 300 users in two business units

- Launch a limited pilot with power users and their managers. Use a chat plus forms UI with standardized templates.

- Keep human in the loop for all external facing outputs. Require a second approver for anything that leaves the bank.

- Instrument adoption, quality, exception rate, and cost per task. Review weekly. Kill or fix failing templates fast.

- Begin a red team program for prompt injection, data exfiltration, and policy evasion. Log every finding and mitigation.

- Stand up FinOps for AI. Track spend by model, use model routing policies, and set run time budgets.

Quarter 3: Scale to 2,000 users and expand tools

- Promote the best performing templates to a task gallery. Onboard two more workflows that touch structured systems.

- Introduce automation tiers. Tier 0 is draft only. Tier 1 allows internal submissions after one review. Tier 2 allows external sends with two approvers.

- Train SMEs as template owners and reviewers. Publish SLAs for assistance and fixes.

- Harden production. Add disaster recovery for the orchestration layer, tool health checks, and index freshness alerts.

Quarter 4: Enterprise rollout with governance by design

- Move from a project to a platform. Offer a catalog of approved tools, connectors, and templates that product teams can reuse.

- Institutionalize the AI change advisory board, red team, and model risk review. Tie releases to eval thresholds.

- Contract with at least two model providers to avoid lock in. Document your router policies and failure fallbacks.

- Commission an independent validation. Invite audit to review logs, lineage, and approvals on a random sample of tasks.

- Codify your playbook for new workflows, from intake to template to production, so business teams can self serve.

Why this moment matters

Citi’s activation of agentic capabilities for 5,000 employees is not just a big bank milestone. It is a proof point that a regulated enterprise can ship agents that are useful, auditable, and economically viable. The breakthrough is not a single model. It is the system design that blends planning, retrieval, tools, human review, and governance into a repeatable factory for task automation.

If you are a CIO or COO in any regulated industry, the path is now clearer. Start with a few high impact workflows. Pair frontier models with tight tools and retrieval. Measure everything. Hold the line on governance and cost. Teach the organization to write, own, and improve templates. In twelve months, you can be where Citi is today, with agents doing real work and your teams asking for more.