How Citi Is Moving From Copilots To True AI Agents

An inside look at how Citi is moving beyond copilots to production AI agents in banking. We unpack the stack, identity and data guardrails, and the ROI math so CIOs can scale safely in high‑compliance environments.

Why agents, and why now

For years, large banks experimented with copilots that suggest, summarize, and draft. Those tools helped, but they required constant human steering and never touched the middle of work where decisions, handoffs, and multi‑system actions live. Citi’s emerging approach signals a new phase: agents that can plan, act across internal systems, and ask for help only when it matters.

In a recent leadership note, Citi publicly affirmed that it is well underway with agentic AI exploration inside the firm, with an emphasis on practical impact across service, fraud, markets operations, and more. That declaration matters because it sets expectations for autonomy, auditability, and scale inside one of the world’s most complex banks. See the statement from Citi leadership Citi perspective on AI innovation.

This article reverse‑engineers what it takes for a tier‑1 bank to make that jump. We cover the agent stack, identity and data governance foundations, guardrails that pass regulatory muster, the ROI math that actually closes, and a pragmatic rollout path for CIOs. For background on definitions, compare the difference between agents and copilots.

From copilots to agents: what changed

Copilots help individuals. Agents help systems. The shift is less about models and more about architecture.

- Copilots are request in, response out. A human remains the orchestrator.

- Agents are goal in, plan out, actions executed, with the agent calling tools and services. Humans become supervisors or approvers.

Inside a bank, that difference unlocks use cases that copilots could not finish end to end:

- KYC case triage where the agent gathers evidence, proposes a risk score, and routes exceptions for adjudication.

- Controls attestations where the agent drafts evidence, checks control mappings, and creates tickets for missing artifacts.

- Developer productivity where the agent opens pull requests, runs test suites, and comments on failing cases before asking for a review.

Citi has signaled this progression in software development by complementing coding assistants with agentic tools that can execute tasks. The firm’s technology leaders have discussed moving beyond suggestions to agents that take actions for developers. See reporting on Citi’s rollout American Banker on agentic AI rollout.

The agent stack: the boring parts that make autonomy safe

Agent capability lives at the top of a stack. Reliability and trust live underneath. In a high‑compliance bank you will need each layer to be explicit.

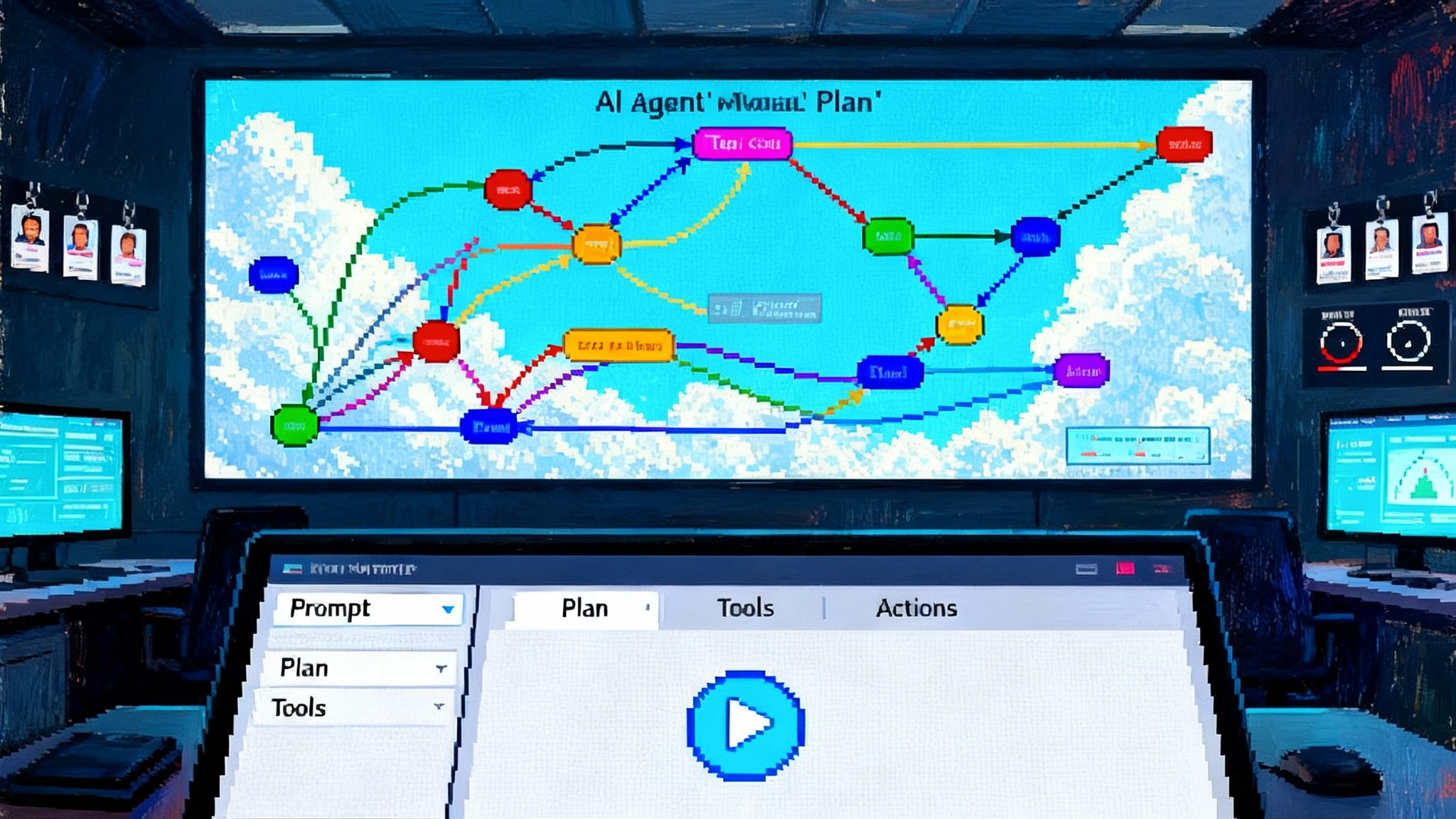

1) Orchestration and planning

- Planner and executor. Agents need a planning loop that decomposes goals into steps, chooses tools, and tracks progress. In production, the plan and each action must be recorded in a structured execution graph for audit and replay.

- Tooling surface. Define a narrow, typed interface for what the agent may do: read from data services, write to ticketing systems, post to chat with scoped channels, submit forms, query search indices. Tools should return structured outputs with error codes and redaction metadata.

- Memory policy. Distinguish between short‑term scratchpads per run, medium‑term task memory per ticket, and long‑term knowledge that requires approval to persist. Never let an agent write back to a knowledge base without provenance and review.

- Deterministic wrappers. Where possible, wrap fragile agent steps with deterministic services. For example, PDF table extraction should be a tested deterministic function rather than a prompt pattern.

2) Identity and entitlements

In a bank, an agent is a first‑class identity.

- Service principals for agents. Issue each agent its own identity rather than reusing user tokens. Tie that identity to a catalog entry that describes its purpose, owners, and allowed systems.

- Just‑enough privileges. Map agent roles to least privilege groups. Use just‑in‑time elevation with explicit reason codes for any temporary access.

- Session boundaries. Separate interactive user sessions from agent sessions. Log token issuance, scope, and expiry. Rotate keys often.

- Delegation clarity. When an agent acts on behalf of a user, record the user, the delegation reason, and the consent scope. Make that trace visible in the audit log.

3) Data governance and retrieval

Agents amplify whatever data they touch. You need strong rails.

- Data zones. Place data into zones by sensitivity: public, internal, confidential, restricted. Agents are bound to zones. Crossing zones triggers reviews or policy checks.

- Catalog and lineage. Every dataset the agent can reach must exist in a catalog with owners, SLAs, and lineage. Agent prompts should include dataset quality flags so the agent can prefer higher‑quality sources.

- Retrieval that respects policy. Use retrieval to limit tokens and scope the world, but only from approved indices. Enforce attribute‑based access control at retrieval time, not just at index build time. For patterns that scale, see enterprise retrieval best practices.

- Redaction at the edges. Apply consistent PII redaction and masking on both ingestion and egress. Mark redacted spans so humans know what was hidden.

4) Runtime controls and observability

- Per‑run budgets. Every agent run enforces time and token caps. If the cap is hit, the agent must produce a partial result and a help request.

- Kill switches and canaries. Enable instant disable by owner, by tool, by model, or by dataset. Route a fixed percent of runs through a shadow or canary environment.

- Structured telemetry. Capture prompts, tool calls, model responses, costs, durations, and final outcomes as a graph. Redact sensitive payloads but keep structure. You cannot govern what you cannot see.

Guardrails that satisfy regulators without killing autonomy

Regulated workflows need predictable control points. The goal is not to remove humans. The goal is to place them where judgment and accountability matter most.

Human‑in‑the‑loop patterns

- Breakpoints. Insert breakpoints where a human must approve, decline, or edit. Examples: final submission to a regulatory system, a policy exception, a customer communication.

- Dynamic risk scoring. Use a risk model to decide if the workflow needs a breakpoint. Factors include customer segment, transaction size, data quality, and novelty of the agent’s plan.

- Dual controls. For material actions, require a second human approver if the agent’s confidence is low or the monetary exposure is high.

- Counterfactual review. For selected runs, automatically generate a simple alternative plan and flag any decision points for reviewer attention.

Policy and compliance integration

- MRM alignment. Treat each agent as a model under your model risk management framework. Register the agent, its purpose, its toolset, its model versions, and its performance metrics. Maintain a list of material changes and attach validation results. For a deeper dive, read model risk management for LLMs.

- Explainability by construction. Store the plan, evidence snippets, and tool outputs that led to the result. Humans should not need to reverse engineer a transcript to reconstruct a decision.

- Content safety that fits the domain. Enforce bank‑specific red lines: no speculation about customers, no free‑form product promises, no unapproved disclosures. Domain tuned safety filters outperform generic ones.

- Immutable logs. Write signed, tamper‑evident logs for critical steps, including approvals and overrides. If an examiner asks how a decision happened, you produce the execution graph and the log in minutes.

Sandboxes and staged rollout

- Agent simulators. Build a simulator with mocked tools and synthetic data to test plans, timeouts, and error recovery without touching production.

- Shadow mode. Let the agent run in parallel with humans and compare outcomes. Measure acceptance rates, edit distance, and exception patterns.

- Gradual write access. Start with read‑only. Then allow the agent to propose actions. Then allow limited writes behind approvals. Finally allow direct writes with dynamic risk checks.

The ROI math that actually closes

CIOs do not need a glossy ROI. They need a budget they can enforce and a scoreboard that improves every month. Anchor everything to three numbers: cost per successful task, time to completion, and error rate.

Step 1: Establish baselines

Pick a well‑bounded workflow. Example: KYC alert triage.

- Human baseline: 25 minutes average per alert. 8 percent rework due to missing evidence.

- Monthly volume: 30,000 alerts. Loaded cost per hour for a senior analyst: 110 dollars.

Baseline monthly cost: 30,000 alerts × 25 minutes ÷ 60 × 110 dollars ≈ 1,375,000 dollars.

Step 2: Define budgets and caps

Create budgets that the runtime can enforce:

- Token budget per run: 120K input, 20K output across all steps, hard cap. Cached retrieval reduces input tokens by 40 percent for repeats.

- Time budget per run: 180 seconds soft, 240 seconds hard. Past that, the agent must escalate with a structured help request.

- Tool budget: maximum 12 tool calls, no more than 2 writes.

- Cost cap: 0.18 dollars per run for model usage plus 0.03 dollars per run for vector queries and function calls. If breached, the run ends with a partial result.

Step 3: Model the new unit economics

Assume after two months of tuning:

- 68 percent of alerts are fully handled by the agent with single‑click approval.

- 24 percent require edits, adding 6 minutes of human time.

- 8 percent are escalated to full human handling.

Average human time per alert becomes 25 minutes × 0.08 + 6 minutes × 0.24 ≈ 3.5 minutes.

Monthly human time: 30,000 × 3.5 ÷ 60 ≈ 1,750 hours. Human cost: ≈ 192,500 dollars.

Agent runtime cost: 30,000 × 0.21 dollars ≈ 6,300 dollars.

Total monthly cost: ≈ 198,800 dollars, down from 1,375,000 dollars. Even if you haircut those gains by 50 percent for conservatism and add 150,000 dollars per month for platform and validation overhead, you still bank roughly 340,000 dollars in monthly savings.

Step 4: Track outcome quality

- Acceptance rate. Aim for 80 percent acceptance within six months.

- Edit distance. Measure how much humans change agent output. Use this to guide prompt and tool improvements.

- False positive and false negative rates. Tie these to risk appetite and set gates that must be met before each expansion.

Step 5: Tie budgets to business SLOs

- SLO 1: 95 percent of runs complete under 180 seconds.

- SLO 2: 99 percent of runs stay under 0.25 dollars.

- SLO 3: 98 percent of actions have traceable evidence links in the execution graph.

When SLOs slip, the platform throttles or falls back to safer modes. Autonomy is earned, not assumed.

Operating model: who owns what

Autonomous agents are products. Treat them that way.

- Product owner. Owns the problem statement, adoption targets, and user experience. Decides where breakpoints live.

- Engineering. Owns the agent runtime, tools, and reliability. Maintains the deterministic wrappers and simulators.

- Data governance. Owns data zones, catalogs, retention, and redaction policy. Signs off on new retrieval sources.

- Security. Owns identity, secrets, and entitlement reviews. Runs chaos days where tokens are rotated and access is intentionally broken.

- Model risk and validation. Owns registration, validation, challenger models, and change controls. Performs periodic backtesting on sampled runs.

- Compliance and legal. Own product rules. Reviews outbound communications templates and disclosure language.

Establish a request‑for‑autonomy process. Teams can ask to increase the agent’s write scope after meeting performance gates for four consecutive weeks. The process is public inside the firm to promote consistency.

A reference blueprint for CIOs

Use this blueprint to structure your first three quarters of production agents.

Quarter 1: Foundation and a single bounded pilot

- Pick one workflow with measurable baselines and a clear owner. Avoid customer‑facing first.

- Build the agent with a minimal toolset, strong observability, and read‑only access.

- Stand up the simulator and shadow mode. Prove acceptance above 60 percent in shadow.

- Implement per‑run budgets and global kill switches.

- Register the agent in your model inventory. Write the one‑page risk card that explains purpose, tools, data, and human controls.

Quarter 2: Human‑in‑the‑loop and limited writes

- Introduce breakpoints and dual controls on high‑risk actions.

- Allow the agent to write into non‑critical systems behind approvals, for example, creating tickets or drafting case summaries.

- Run red team scenarios. Prompt injection, tool confusion, and stale index attacks. Validate that the agent fails safe and that logs tell a coherent story.

- Start cost and time SLO enforcement. Publish weekly scoreboards to stakeholders.

Quarter 3: Scale to a second domain and increase autonomy

- Clone the platform to a second workflow that stresses different tools, such as controls attestations.

- Introduce dynamic risk scoring to reduce unnecessary approvals.

- Allow direct writes in narrow scopes with real‑time monitoring and automatic rollbacks.

- Begin A/B testing of model choices and retrieval strategies. Use challenger models to detect drift.

Lessons emerging from Citi’s approach

While every bank has unique constraints, several lessons translate well.

-

Autonomy is a permission. Start with agents that propose, then allow narrow writes, and level up only after sustained performance. This keeps audit conversations simple.

-

Identity first, not last. Create service principals for agents and bake least privilege into tool wrappers. Retro‑fitting identity later is slow and risky.

-

Tools beat prompts. When a step is critical, make it a deterministic function with defined error codes. Use LLMs to orchestrate, not to improvise high‑impact writes.

-

Budgets are your governor. Enforce time, token, and tool caps per run. When the agent hits a cap, it should pause and ask for help with a structured diff of what remains.

-

Observability is your safety blanket. Store execution graphs with redacted payloads. You need them for debugging, model risk validation, and regulatory reviews.

-

Retrieval is policy. Build indices only from approved sources. Enforce access at retrieval time. Tag evidence with dataset lineage so reviewers can trust the trail.

-

Shadow first, then small writes. Measure acceptance and edit distance in shadow. Only then let the agent write to production with approvals. Expand incrementally.

-

Train the humans. Supervisors need dashboards that show plan, confidence, evidence, and cost so they can approve quickly. Without that, agents become inbox noise.

-

Keep the vendor mix simple. Standardize on one orchestration runtime per line of business, one retrieval service, and a small set of models. Complexity torpedoes validation.

-

Reinvest gains. Use early savings to fund data cleanup, deterministic tool building, and better simulators. These investments compound.

Common pitfalls to avoid

- Letting a copilot masquerade as an agent. If it cannot act without constant human prompting, it will not move the cost needle.

- Unlimited context windows. Big prompts feel powerful but raise costs and risk. Retrieval with tight scopes works better.

- Silent failures. If the agent cannot reach a system, it must fail loud with a clear next step. Silent retries burn time and tokens.

- PII leaks through logs. Redact at the edges and carry redaction marks through the entire pipeline so reviewers know what was hidden.

- Over‑engineered approvals. If every step needs sign‑off, you built a slower copilot. Use dynamic risk to place humans only where they matter.

The road ahead

Agentic AI in banks will not be a big bang. It will be a series of expansions in scope, each justified by tight ROI math and protected by identity, data governance, and auditable guardrails. Citi’s direction shows that the shift is not theoretical. It is a practical change in how complex work gets done when autonomy is paired with controls that regulators can understand.

For CIOs, the mandate is clear. Pick one workflow with high volume and clear rules. Build the runtime once with budgets, identity, and observability baked in. Prove real savings with human‑in‑the‑loop safety. Then scale with discipline. The result is not a bot that chats. It is a colleague that plans, acts, and asks for help when it should. That is how copilots turn into agents inside a bank that cannot afford mistakes, yet cannot afford to wait.