How OpenAI–Nvidia’s 10GW bet unlocks true AI agents

A staged 100 billion dollar buildout of 10 gigawatts of Nvidia-powered capacity could push agentic AI from pilot to production. See how the compute surge intersects with GPT-5 routing, unit economics, energy supply, real product patterns, and vendor risk so you can plan your next quarter with confidence.

The 100B, 10GW catalyst for agentic AI

OpenAI and Nvidia set a new bar for AI infrastructure with a letter of intent to deploy at least 10 gigawatts of Nvidia systems for OpenAI’s next-generation stack, backed by up to 100 billion dollars in staged investment as each gigawatt comes online. The first 1 GW tranche is targeted for the second half of 2026 on Vera Rubin systems. This is framed as AI factories sized like utility-scale power projects, built to train and run models that drive autonomous agents at enterprise scale, according to the Nvidia partnership announcement.

This move follows a broader shift from single-cloud dependence toward a network of compute partners. The goal is clear: secure predictable capacity and co-optimize hardware, systems software, and training schedules for the next wave of intelligent agents. See our background on agent orchestration patterns for how this capacity gets translated into dependable workflows.

Why 10 GW matters specifically for agents

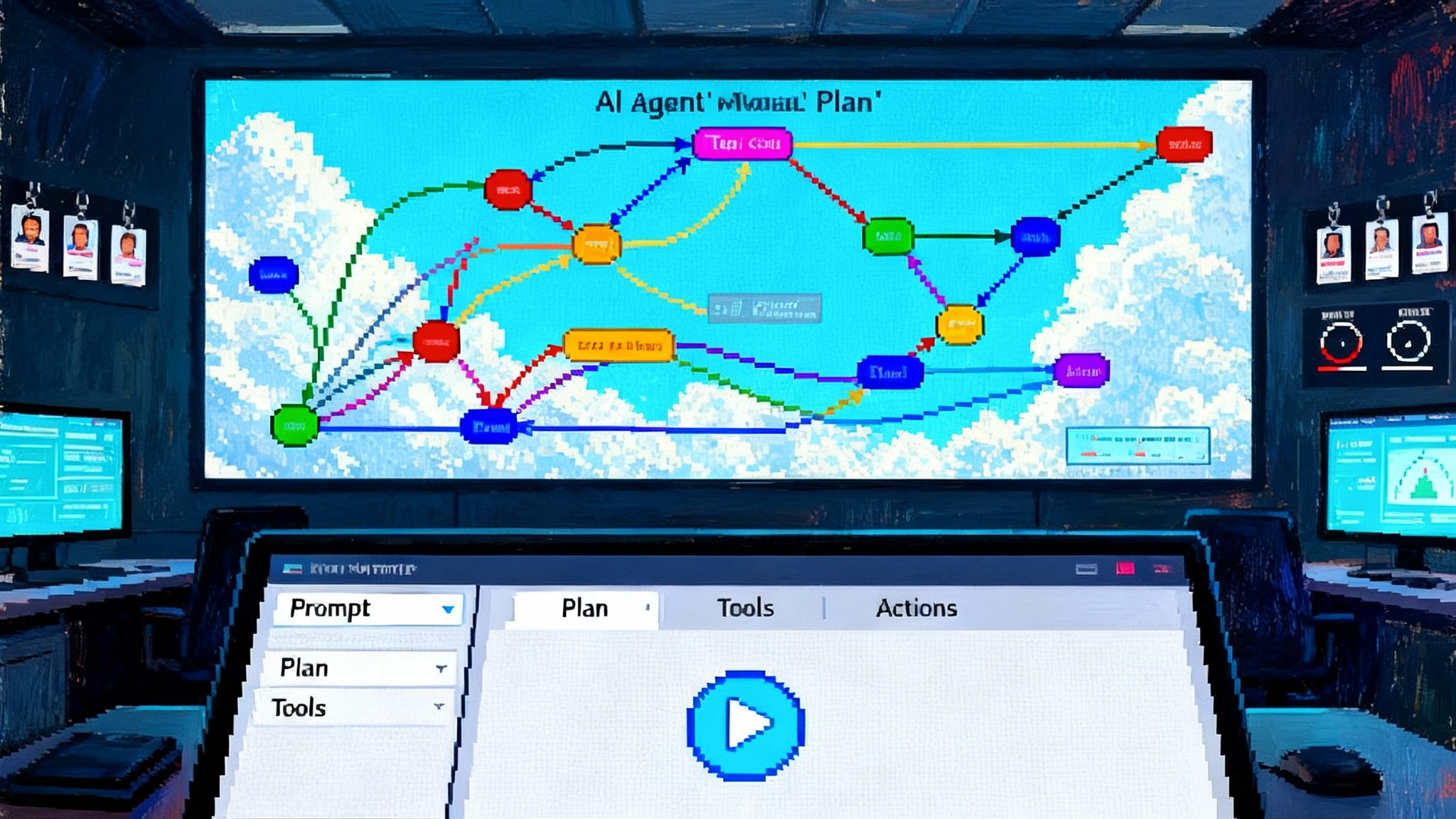

Bigger clusters do not just train bigger models. They unlock behaviors and service levels agentic workflows require: long-horizon reasoning, reliable memory, and multi-application action pipelines that stay predictable under heavy load. GPT-5 introduces a unified router that chooses between a fast path and a deeper thinking mode, applying expensive reasoning only when the task demands it. The combination of routing and abundant headroom is what changes the economics.

1) Long-horizon reasoning becomes a standard setting

Enterprise tasks are projects, not prompts. GPT-5’s router keeps most work on the fast path and escalates only the hard subproblems. That only works at scale if bursts of deep reasoning do not spike latency for other users. The 10 GW plan is essentially headroom insurance that makes long-horizon reasoning viable for thousands of concurrent agent runs, not just demos.

2) Persistent memory crosses from toy to tool

Agents must remember goals, preferences, guardrails, and evolving project context across days or weeks. That requires large, low-latency vector indexes, knowledge graphs, and safe-write stores adjacent to inference. Extra compute supports background distillation and continuous evaluation so memory becomes a living system that learns from outcomes. For design guidance, review LLM memory architectures.

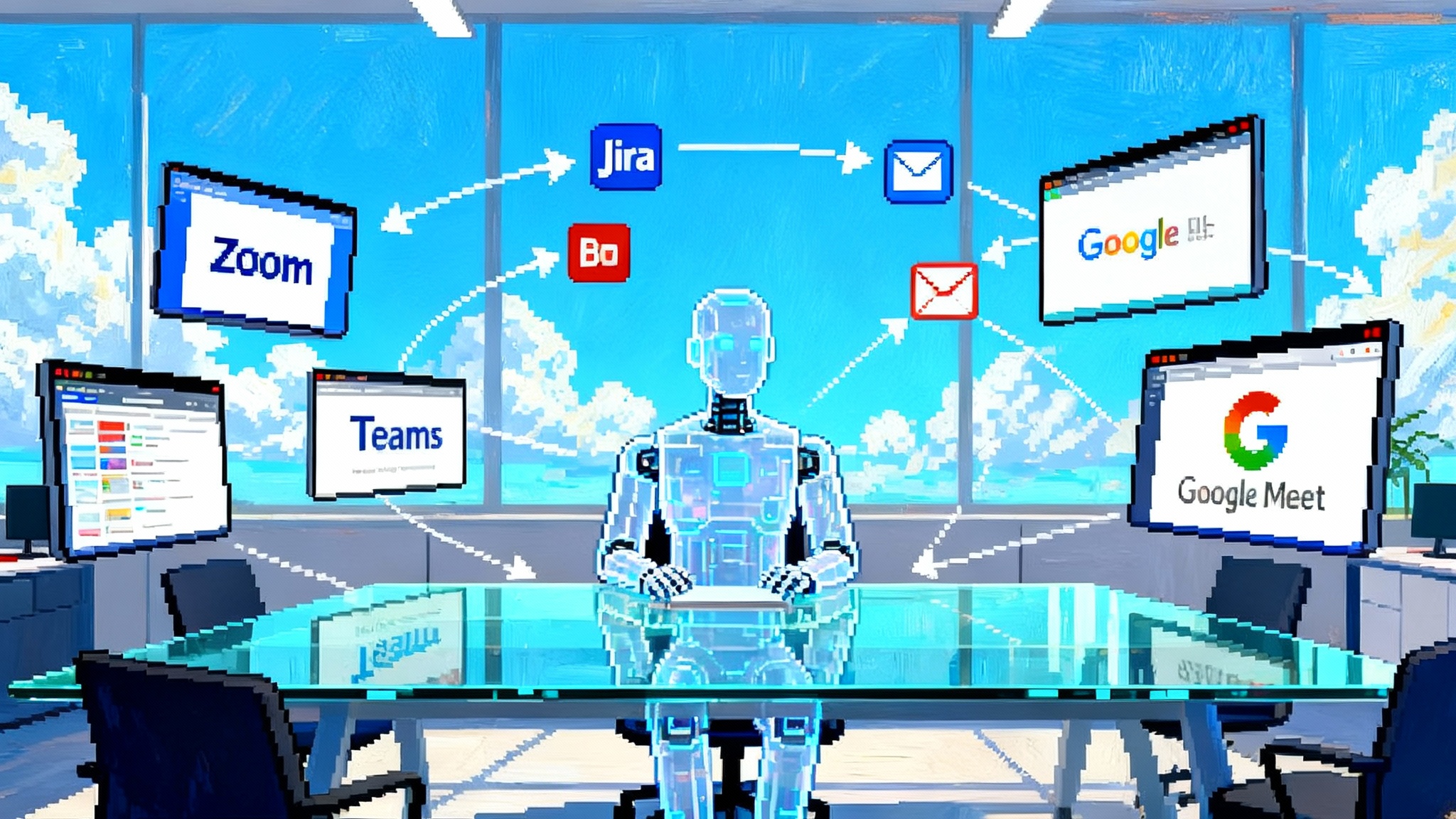

3) Multi-app action pipelines get dependable

Real agents hop across CRM, ERP, email, code repos, risk engines, and payment rails. Reliability collapses when one slow tool stalls the chain. Bigger, tightly integrated clusters let schedulers reserve resources for tool-heavy phases, maintain parallel subplans, and prefetch context, which trims tail latency and raises predictability.

Proof points and early enterprise agents

Financial institutions and insurers are moving first, extending copilots into more autonomous patterns such as software maintenance and multi-step research workflows. The winning pattern is consistent: start with narrow, measurable workflows that can run for minutes or hours, touch multiple systems through approved connectors, and produce auditable outputs. New capacity reduces cost spikes on long runs and shortens queues at peak hours, which makes service-level guarantees believable.

Cost curves: from tokens to gigawatts

Per-run agent costs hinge on three dials that this deal helps stabilize:

- Reasoning escalation frequency: how often the router invokes deeper thinking. With abundant compute, thresholds can be less conservative without starving other workloads.

- Tool call parallelism: more concurrent API calls and searches per step cut wall time. This is a scheduling and bandwidth problem as much as a model problem.

- Memory refresh and indexing: continuous re-ranking and vector maintenance improve retrieval quality without adding latency to live runs.

Expect early dividends to appear as reliability and lower tail latency rather than immediate list price cuts. As Vera Rubin-class systems land and software stacks mature, unit cost per complex workflow should trend down because more of each run remains on the fast path and orchestration improves caching, preplanning, and reuse.

Energy and grid reality: can 10 GW be green and available

Ten gigawatts of peak datacenter capacity is also a power-sector story. The International Energy Agency projects a steep rise in data center electricity use, with AI-accelerated servers driving a large share of the increase, per the IEA energy demand analysis. The implication for enterprises is twofold:

- Location will matter: AI factories will cluster where power is abundant, affordable, and cleaner.

- Sustainability disclosures will get specific: expect questions about when and where agent workloads run, how power is sourced, and whether schedules align with grid conditions.

What products get unlocked next

The compute surge and GPT-5 routing make several agent products practical at scale:

- Financial operations agents: close and reconciliation flows that pull from ledgers and ERPs, propose balanced entries, and open tickets for exceptions.

- Customer operations swarms: a coordinator routes to retrieval, translation, and policy agents, then assembles a final action without blowing SLAs.

- Engineering reliability agents: CI agents that reproduce flaky tests, bisect commits, and open merge requests with diffs and rollback plans.

- Risk and compliance autopilots: continuous monitors that assemble justifications and escalate only when thresholds are crossed.

- Revenue ops planners: multi-horizon forecasting that turns CRM signals and spend data into structured action plans.

- Knowledge stewards: meeting summaries, document tagging, and taxonomy updates tuned to your domain.

Vendor lock-in: real risks and practical hedges

A preferred-supplier path concentrates both benefits and risks. Benefits include tighter hardware-software co-design and better performance per watt. Risks include hardware lock-in, supply chain exposure, and future pricing leverage. Practical hedges you can implement now:

- Dual-run patterns: design orchestration to swap between at least two model providers for non-critical paths, and run weekly parity tests.

- Retrieval-layer independence: keep embeddings, indexes, and graphs portable. Avoid provider-specific black boxes for core memory.

- Tooling abstraction: wrap external systems behind stable internal adapters so you can trial an alternative runtime without touching every integration.

- Cost and quality telemetry: log reasoning depth, tool calls, latency distribution, and success metrics per run.

- Exit ramps in contracts: caps, outage credits, model lifecycle clarity, and change notifications for routing defaults that affect cost.

For governance checklists, see our guide to enterprise AI governance.

Adoption playbook for Q4 2025

- Inventory candidate workflows: favor clear definitions of done, rich tool access, and measurable outcomes. Time-box runs to hours.

- Define agent roles: split planners, doers, and verifiers into separate agents. Keep a human gate for external actions at the start.

- Bake in governance: add structured logging, reason traces, tool provenance, and policy checks. Decide what becomes long-term memory versus transient context.

- Establish SLOs: track p50 and p95 latency, success rates, exception categories, and unit cost per completed workflow. Tie budgets to these metrics.

- Red-team before scale: attack tool connectors, prompt injection, and data exfiltration paths. Practice kill switches.

- Scale by cohort: roll out to one business unit at a time, publish the metrics, then widen access.

Use early quarters to tune routing thresholds and memory compaction. As capacity grows through 2026, increase parallelism and shift more subflows to autonomous execution.

What to watch through 2026

- Delivery milestones: the first 1 GW tranche targeted for the second half of 2026 on Vera Rubin systems. Watch on-time delivery and early performance data from the Nvidia partnership announcement.

- Pricing signals: quotas, latency, and reliability tend to improve before posted prices move.

- Memory primitives: first-party tools that make long-term memory safer and more portable, including governance-friendly APIs.

- Enterprise case studies: banks, insurers, and manufacturers will publish benchmarks and incident reports that separate hype from value.

- Energy contracting: expect long-duration PPAs, behind-the-meter projects, and power-aware scheduling features to show up in AI platform roadmaps, consistent with the IEA energy demand analysis.

The bottom line

The OpenAI and Nvidia partnership is not just bigger chips for bigger models. It is the infrastructure that helps autonomous agents behave like dependable software. GPT-5 routing applies compute where it matters most, and 10 GW of planned capacity makes it practical to do so at scale with predictable latency and cost. Build for portability and governance now, watch reliability and concurrency first, and let falling variance pave the way for better unit economics.