Rollup Fees Mispriced: Cheap DoS, Prover-Killers, Delays

Fresh peer-reviewed research argues that today’s Ethereum rollup fees underprice the real bottlenecks. Attackers can cheaply flood data availability or craft prover‑killer transactions that stall finality. This review breaks down what fails, what it costs, and practical fixes teams can ship now.

The wake-up call

If you felt Ethereum L2s were past their fee-market growing pains after Dencun, a new warning says otherwise. A recent peer-reviewed preprint presents evidence that today’s rollup fee mechanisms systematically misprice three core resources, enabling two practical exploits: saturating L1 data availability to throttle throughput, and crafting transactions that crush provers and delay finality. The authors lay out adversarial strategies, quantify costs, and test exposure across leading optimistic and ZK stacks in production. You can read the full methodology in the new academic analysis of rollups, but here is the essence and what builders and advanced users should do now.

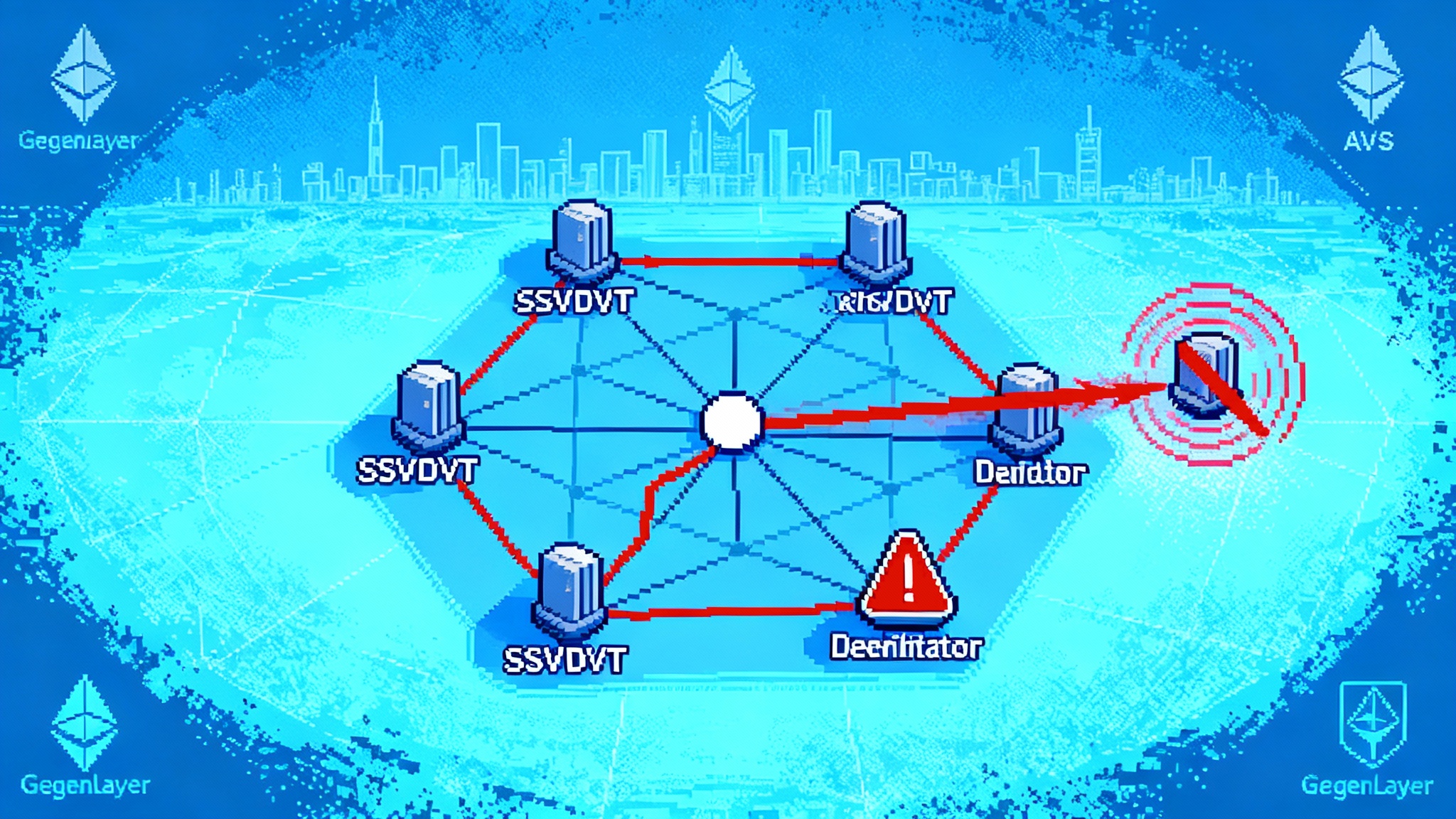

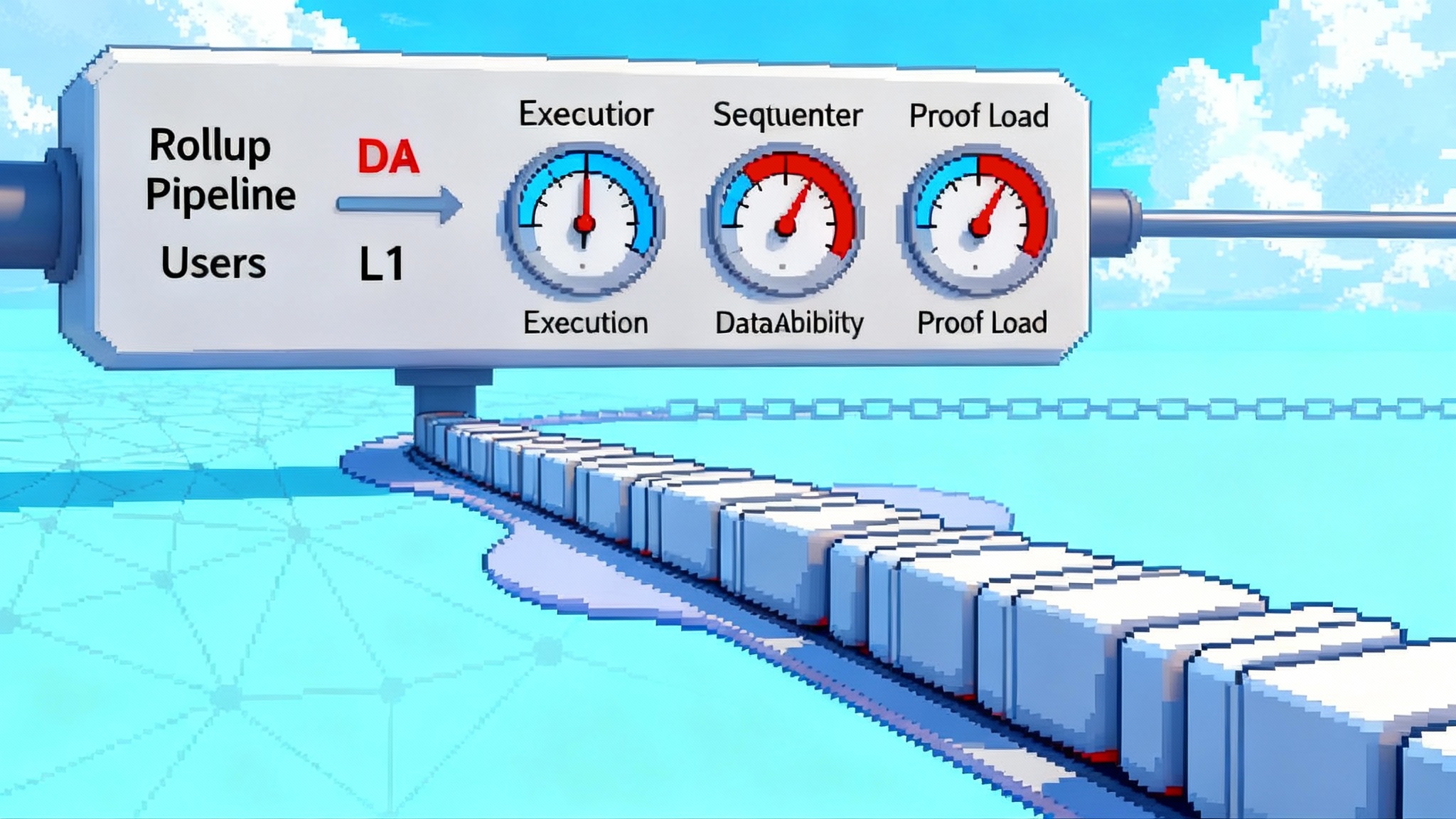

The three resources fee models must price

A rollup’s transaction fee mechanism has to charge for costs across three planes that do not move in lockstep:

- L2 execution work. The gas you burn to run EVM opcodes, storage writes, and state transitions.

- L1 data availability. The bytes you write to Ethereum via blobs or calldata, subject to a separate EIP-1559-style market with its own base fee and volatility.

- Proof settlement. For optimistic stacks that means fraud or fault proof costs during challenges. For ZK stacks it means proving time, memory bandwidth, and verification overhead.

When any one of these is priced out of proportion to its scarcity, a rational attacker can spend in the cheap dimension to stress the expensive one.

Attack 1: Data availability saturation throttles throughput and finality

The first exploit is conceptually simple. The attacker submits many compute-light but data-heavy transactions to consume a rollup’s blob budget per batch. Sequencers fill batches, but the L1 data lane is the bottleneck, not L2 execution. As the blob market tightens, honest traffic is displaced or delayed. The result is a throughput drop and slower settlement, and that delay can be more cost-effective for the attacker than trying to jam L1 directly.

What the researchers found:

- Short, periodic DoS on multiple rollups for under 2 ETH, with congestion windows around the 30 minute mark.

- Three major rollups showed exposure to sustained DoS where an attacker could keep the system throttled for roughly 0.8 to 2.7 ETH per hour.

- The same tactic, tuned for finality drag rather than outright DoS, can increase settlement delay by about 1.45x to 2.73x compared to simply buying blobs on L1.

Why it works:

- Single-dimensional fees. If a rollup flattens L2 gas and L1 DA into a single price without a responsive DA component, users can cheaply send high-byte transactions that crowd out honest flow, yet pay little marginally.

- Batch pricing and fixed parts. Batches often blend a fixed base with an averaged per-byte cost. That hides the DA externality inside the batch and blunts fee feedback when DA becomes scarce.

- Refund and rebate policies. If unused execution gas is refunded or if the rollup credits certain opcodes, attackers can compose transactions that maximize bytes while avoiding much of the execution bill.

Attack 2: Prover-killer transactions stall finality

The second exploit targets proof systems. For ZK rollups, the attacker crafts transactions that are cheap in L2 gas terms but expensive in constraint count, memory, or hashing inside the proving circuit. The sequencer accepts them at the posted fee, but the proving pipeline bogs down. For optimistic rollups with fault proofs, adversarial sequences can force expensive single-step proofs or pathological worst-case witnesses. Either way, finality stretches while the proving queue grows.

Key observations from the study:

- Prover-killer batches increased finalization latency by roughly 94x in the test configurations.

- The attacker’s cost is dominated by the mispriced proving externality. Because users pay little for proof difficulty, small spend can cause big delays at the rollup level.

This is not hypothetical. The gap between L2 gas accounting and proving cost is structural. Many fee schedules are unaware of proving hotspots like repeated hashing patterns, large touch sets, and worst-case storage access sequences that explode circuit complexity.

Why design choices matter: who is more exposed

Not all rollups are equally vulnerable. The paper’s taxonomy maps cleanly to real design decisions found across optimistic and ZK stacks.

- Refund policies. Generous gas refunds or credits amplify both attacks. In optimistic stacks, refunds incentivize bloated but computation-light payloads that still consume DA. In ZK stacks, they allow adversaries to fit more prover-unfriendly operations per fee unit.

- Batch pricing vs per-transaction pricing. Batching hides cost signals. If a rollup charges a single blended fee for a batch, a crafty mix of high-byte, low-compute transactions can ride along on the coattails of honest flow. Per-transaction, per-byte accounting, with dynamic components, raises attacker marginal cost.

- Fixed vs dynamic components. Static or slowly moving L1 data price multipliers cannot react to blob scarcity. DA risk rises when a rollup uses a long half-life smoother for its L1 cost estimator. Conversely, a dynamic DA component that tracks blob base fee with a shorter memory makes saturation more expensive.

- Proof cost unawareness. Any ZK rollup that does not include a proof-aware surcharge is exposed to prover-killer payloads. On the optimistic side, if the challenge game or single-step proof is allowed to become pathologically expensive relative to inclusion fees, latency can stretch.

- Opcode weighting and storage pricing. Underweighting hashing, big memory moves, and worst-case storage patterns in L2 gas accounting leaves a wedge between what a user pays and what the prover must do.

- DA lane choice. Rollups that rely primarily on blobs, with blob base fees near the floor, are cheaper to flood than those that still lean on calldata. Blobs are cheaper by design and are governed by an independent fee market under the EIP 4844 specification on blobs, which improves UX in normal times but lowers the bar for saturation unless priced through correctly.

Quantifying attacker costs and impact

The study’s headline ranges are useful for intuition and planning roadmaps:

- Periodic DoS: under 2 ETH to force a 20 to 30 minute throughput drop on several rollups.

- Sustained DoS: approximately 0.8 to 2.7 ETH per hour on the most exposed systems.

- Finality stretch via DA: roughly 1.45x to 2.73x longer settlement timelines compared to direct L1 blob-stuffing for the same spend.

- Prover-killer latency: on the order of 94x increase in finalization latency under tailored adversarial batches.

These are not worst-case theoretical ceilings. They come from empirically evaluated configurations that resemble production settings, which makes them relevant for current upgrade cycles.

Mitigations teams are evaluating

The good news is that most fixes slot into existing upgrade plans for optimistic and ZK stacks.

- Multidimensional fee mechanisms. Price execution, DA bytes, and proving difficulty separately. This can be a vector price with three meters and three base fees. The sequencer sets the marginal rate for each meter per block. Users pay the dot product.

- Proof-aware surcharges. For ZK rollups, include a proving term in the fee that estimates constraint or cycle weight based on opcode mix, call graph features, and storage access patterns. Start coarse with buckets that track known hotspots. For optimistic rollups, surcharge transactions that would trigger high-cost single-step proofs in the event of a dispute.

- Admission control. Rate limit high-byte transactions per address and per block. Use moving windows keyed to blob base fee and current batch utilization. During stress, gate low-value high-byte traffic in favor of critical system transactions and market makers that keep spreads tight.

- Batch size caps and shape control. Cap per-batch blob bytes, and constrain the ratio of bytes to compute, so that one dimension cannot dominate. Consider multiple parallel batch lanes with independent queues for high-byte and compute flow, each with its own base fee.

- Dynamic blob pricing passthrough. Track the blob base fee with a short half-life so price signals reach users quickly. Tie per-transaction DA charges to the most recent blob base fee and observed inclusion delay in the rollup’s own pipeline.

- Shared-sequencer safeguards. If you use or plan to use a shared sequencer, isolate per-rollup rate limits and introduce per-domain fairness so an attacker cannot starve other chains via a cheap flood on one. Add pre-admission scoring for high-byte traffic from new or untrusted sources.

- Prover pipeline hardening. Pre-screen batches for prover-killer patterns and split them across provers. Add queue depth based fee multipliers so users feel the real cost of slowed proving. Keep a reserve proving pool for emergency throughput when latency spikes.

- Fault proof cost caps. For optimistic stacks, ensure worst-case single-step proofs and on-chain dispute costs have bounded complexity. Deny sequences that would trigger pathological witnesses unless they pay a commensurate fee.

Connecting the dots to current upgrade cycles

OP Stack chains in the Superchain, Arbitrum Orbit deployments, and ZK ecosystems all have active roadmaps that touch fee policy, fault proofs, and proving pipelines. The push since March 2024 to exploit low blob fees has driven record L2 usage and cheaper UX, but it also made DA the lever attackers pull first. Market structure changes, like the onshore crypto perps market shift, have pulled more latency-sensitive flow into L2s, while ecosystem plays such as Polygon's AggLayer and liquidity increase cross-domain routing pressure. At the protocol layer, heightened Ethereum slashing and restaking risk keeps reliability in focus. Pricing the three resources accurately is not a nice-to-have. It is a prerequisite for healthy finality during bursts of flow and during market stress.

Actionable checklist for builders

Prioritize what you can instrument and patch within a quarter.

Instrumentation and observability:

- Add per-transaction meters for execution gas, DA bytes, and an estimated proving weight. Persist these as indexed fields in your analytics DB.

- Track blob base fee, blob inclusion delay in your batches, and a histogram of bytes per transaction by type.

- Monitor proving pipeline metrics: queue depth, time-to-first proof, average proving cycles per batch, and memory pressure. Alert on multi-sigma deviations.

- Expose a live finality SLA: last finalized L1 block height for your rollup, time since last proof posted, and batch backlog.

- Build detectors for DA floods: rising share of high-byte low-compute transactions from new addresses, per-minute blob consumption, and blockspace displacement of system transactions.

Policy and engineering changes:

- Ship a simple three-meter TFM: execution gas, DA bytes, proving weight. Use a base fee and priority fee per meter. Keep the UI simple by quoting an all-in price but publish the breakdown in receipts.

- Introduce proof-aware surcharges. Start with opcode buckets and storage access heuristics that map to known proving hotspots. Iterate toward circuit-aware pricing as you refine your profiler.

- Tighten smoothing. Reduce half-life on L1 cost estimators so your DA price tracks blob base fee faster. Apply a surge multiplier when your own batch queue grows beyond a threshold.

- Cap per-batch bytes and enforce byte-to-compute ratios. Allow a small reserve for system transactions and market maker flow during stress.

- Add admission control in the sequencer. Rate limit high-byte senders and prioritize whitelisted infra like oracles and bridges when DA is tight.

- For optimistic stacks, bound worst-case fault proof costs and refuse sequences that would exceed a configurable proof budget unless they pay a premium.

- Harden your prover fleet. Add overflow capacity in another region. Split adversarial shapes across provers. Consider a community proving pool as a surge valve.

Operational drills:

- Run a red-team exercise that replays the study’s adversarial mixes against staging. Measure total ETH needed to degrade your finality and set alert thresholds accordingly.

- Publish transparent status pages that show users finality health, blob utilization, and proving backlog in real time.

Actionable checklist for users and market makers

Power users, HFT shops, and market makers can reduce risk and help stabilize markets during stress.

- Monitor finality risk. Track your rollup’s last proven batch height, time since proof, and blob base fee. If finality lag grows, widen quotes and reduce leverage.

- Watch DA congestion. Rising blob base fees, growing batch size, and increasing bytes per transaction are early signals. Adjust routing before spreads blow out.

- Reroute flow. Maintain integrations with at least two L2s and one L1 path. If one rollup’s finality SLA degrades, migrate sensitive flow until metrics normalize.

- Use bridge safety rails. Prefer bridges that expose proof status and finality estimates. Avoid long-tail assets during elevated DA fees.

- Automate alerts. Set thresholds for time since last proof, sequencer backlog, and blob base fee percentiles. Trigger circuit breakers in your trading stack.

The bigger picture

Ethereum’s scaling roadmap moved contention out of L1 execution and into L1 data availability and proving. That is the point of rollups. It also means that the most effective attacks will spend on the cheapest vector that forces the most expensive bottleneck. The latest research shows that today’s fee designs do not fully internalize those externalities. The fix is precise pricing, better feedback loops, and safety rails in sequencers and provers. If teams move quickly, the same upgrade cycle that brings shared sequencing and new proofs can also close the pricing gaps that make cheap DoS and finality drag profitable.

The tradeoff that made L2s attractive in 2024 and 2025 remains intact. Users get lower fees and faster UX. Builders absorb the complexity of multidimensional markets and proof pipelines. Price the right things, measure the right signals, and rollups can keep their promise without giving attackers a bargain path to disruption.