2025’s enterprise agent stack is here: architecture and rollout

Microsoft, NVIDIA, and OpenAI turned AI agents from demos into deployable systems in 2025. See why they are production ready, a secure reference architecture, real cost and latency tradeoffs, and a pragmatic 30-60-90 day rollout plan.

The year agents grew up

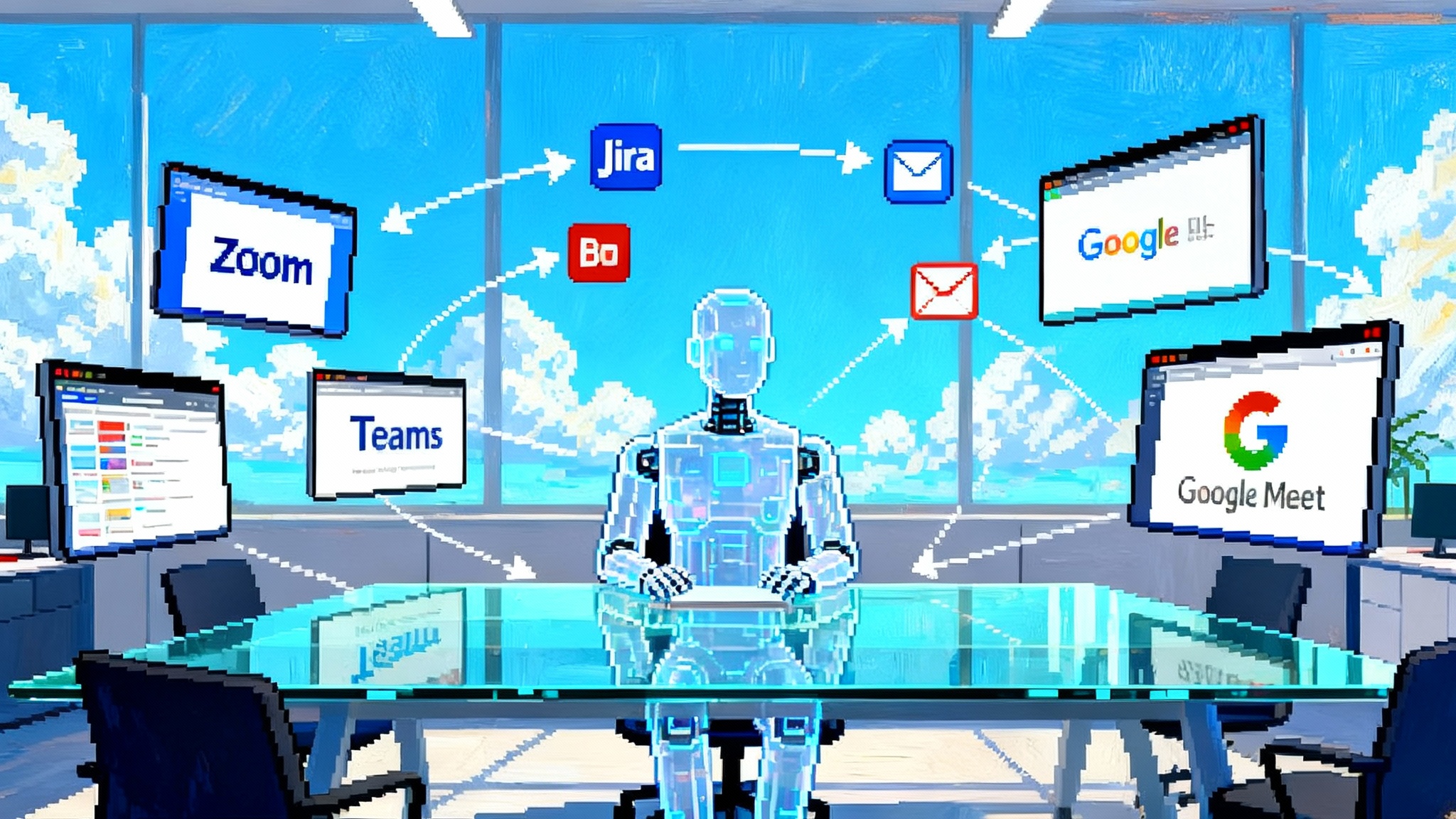

Three 2025 announcements flipped enterprise AI agents from interesting to inevitable. On May 19 at Build, Microsoft introduced multi agent orchestration in Copilot Studio, an Agent Store inside Microsoft 365, and Entra Agent ID so agents are first class identities with policy and audit. Those are the controls and distribution channels enterprises were waiting for, arriving in one platform. See the details in Microsoft’s post on Microsoft multi agent orchestration.

In January, NVIDIA added missing safety and reliability pieces for autonomous workflows. New NeMo Guardrails NIM microservices provide content safety, topic control, and jailbreak detection, and ship alongside agent blueprints so teams can start from a tested workflow rather than a blank page. NVIDIA explains these additions in NVIDIA Guardrails NIM microservices.

Also in January, OpenAI released Operator, a browser automation agent that clicks, types, scrolls, and hands control back for sensitive steps. Operator showed that agents can use the same interfaces humans do, which means they can work before every vendor exposes perfect APIs. Put together, agents now have identity, governance, distribution, guardrails, and practical tool use. That is the difference between a demo and a deployment.

Why this wave matters

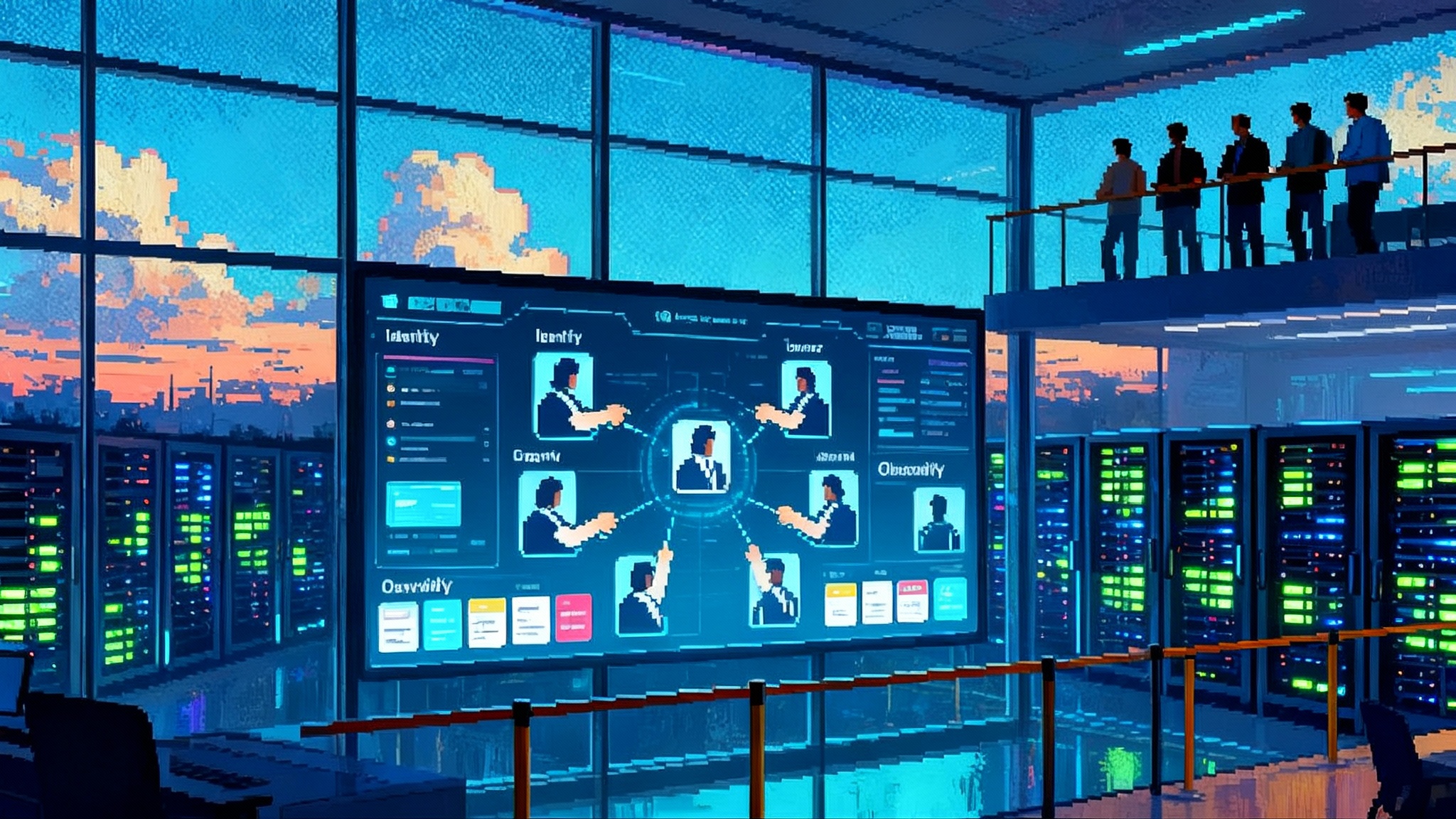

- Identity becomes policyable. Entra Agent ID lets each agent get an organizational identity by default, enabling lifecycle management, conditional access, sign in logs, and the same RBAC you use for humans.

- Orchestration is built in. Multi agent tasks can be modeled so planners, solvers, and reviewers coordinate without brittle custom code. Agent Store simplifies discovery and routing so employees can find vetted agents from one place.

- Guardrails are layered. Instead of relying only on model alignment, you can compose small, purpose built rails that moderate content, keep interactions on topic, and intercept jailbreak attempts.

- Real world tools are reachable. Browser control raises the ceiling on what agents can do in messy enterprise environments where APIs are partial or delayed.

The outcome is not just better assistants. It is autonomous workflows that can comply with policy, pass audits, and meet SLOs.

A reference architecture for secure multi agent workflows

Use this blueprint as a starting point. Adjust vendor choices to your stack, but keep the separation of concerns and control points. For deeper background on security patterns, see our primer on agent security architecture.

1) Identity, access, and secrets

- Principals: Issue a distinct service principal for every agent and every high risk tool. If you use Microsoft, Entra Agent ID assigns identities as agents are created. Map each to an owner team and application steward.

- Authentication: Prefer OAuth 2.0 client credentials for server to server with short lived tokens and automatic rotation. For cross product access, use OIDC with audience scoping. Disallow long lived static API keys outside the vault.

- Authorization: Enforce least privilege with RBAC at the resource level. When feasible, layer ABAC for data sensitivity and environment. Keep permissions in a policy as code repo and deploy through change control.

- Secret management: Centralize credentials in a vault. Agents fetch secrets at run time with audience scoped tokens. Prevent agents from writing secrets back to logs with output filters.

2) Guardrails and policy

- Input rails: PII scrubbing, prompt linting for insecure tool use, topic control to keep conversations on approved domains.

- Output rails: Content safety checks, data loss prevention for regulated fields, and redaction of sensitive attributes before persistence or display.

- Tool rails: Allowlist tool functions per agent. Block dangerous operations unless a human approval step is satisfied. Require structured function calls with schemas that declare side effects.

- Continuous evaluation: Attach evals for jailbreak susceptibility, hallucination on proprietary entities, and policy violations. Use canary prompts as part of deployment gates.

3) Orchestration and planning

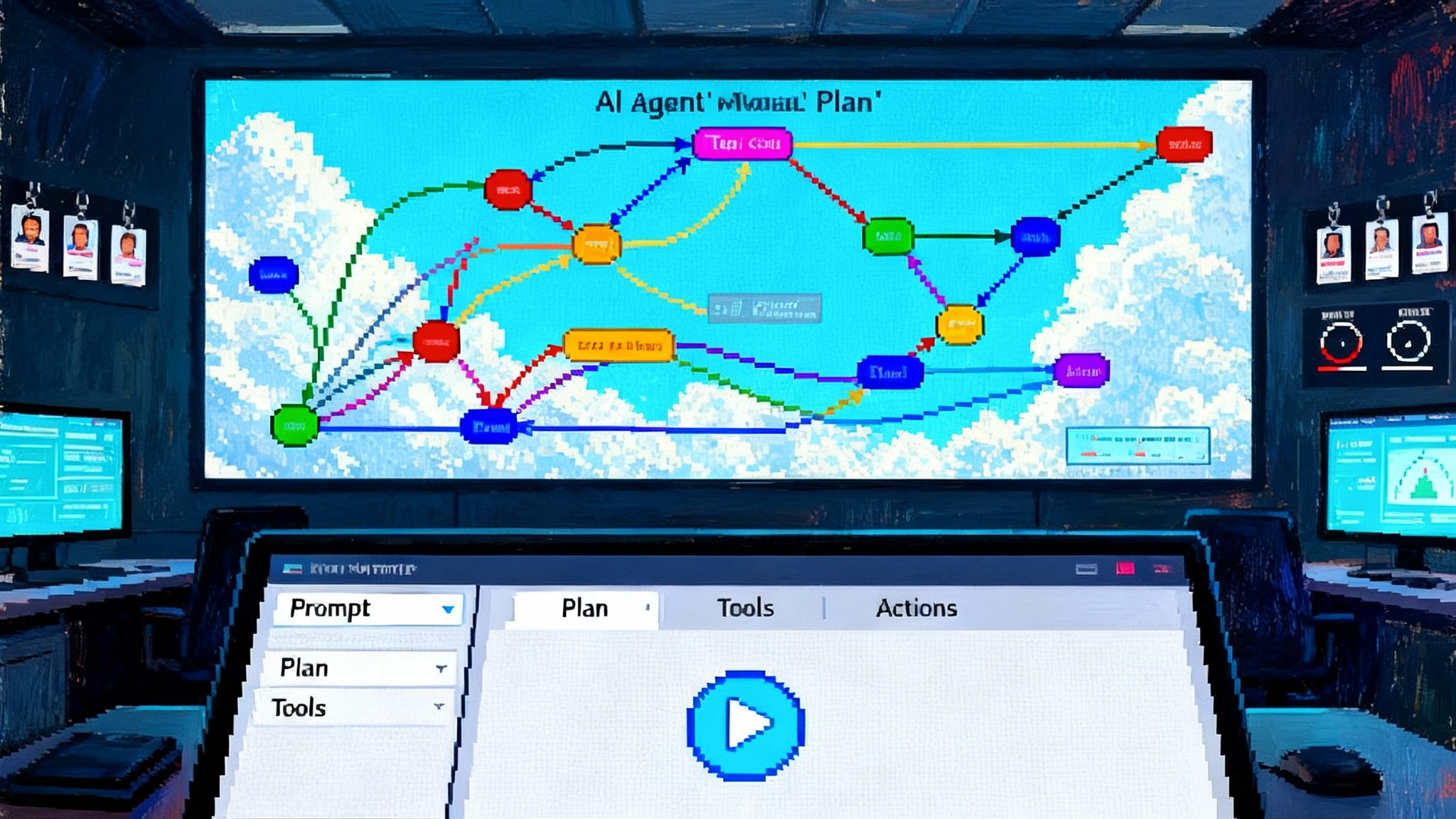

- Roles: Use a simple pattern of Router → Planner → Specialist(s) → Reviewer → Executor. For design tradeoffs, review our guide to multi agent design patterns.

- Router: A small, fast model routes tasks to the correct agent team or hands off to a human.

- Planner: Builds a plan with explicit steps, required tools, and success checks.

- Specialists: Domain agents with narrow scopes, tuned prompts, and explicit tool permissions.

- Reviewer: Audits high risk steps and verifies acceptance criteria.

- Executor: Applies actions with idempotence, retries, and rollback logic.

- Workflows: Represent plans as declarative graphs with timeouts, compensating actions, and circuit breakers. Keep long running steps idempotent.

- State: Maintain world state in a durable store with versioned artifacts. Cache context snippets and tool outputs to avoid recomputation.

4) Data access and retrieval

- Retrieval: Use a retriever that tags documents with sensitivity labels. The retriever enforces access decisions before embedding or response assembly.

- Context assembly: Bound input context by role and task. Inject only the minimum fields required and label every field with its source and handling rule.

- Write paths: Any write to a system of record must carry the agent principal, the plan step ID, and the approval artifact ID if applicable.

5) Observability and audit

- Tracing: Emit spans for each plan step, tool call, and guardrail decision with correlation IDs.

- Prompt versioning: Record prompt templates, model versions, and parameter settings. Tie these to deployments for rollbacks.

- Safety telemetry: Log every rail decision and the text that triggered it, redacted as required.

- Cost telemetry: Capture tokens in, tokens out, tool runtime, and GPU minutes. Attribute spend by agent and business unit. See our walkthrough on AI governance and SLO metrics.

- Dashboards: Track TTFT, P50 and P95 latency, success rate, and safety block rate. Set SLOs per use case.

6) Human in the loop

- Approvals: Gate steps with external side effects. Require reviewers for financial transactions, role changes, and data exports.

- Suggestions before actions: For customer responses and code changes, produce drafts first. Promote to auto execute only after meeting accuracy thresholds.

- Escalation: Define timeouts and escalation paths. The planner must include fallbacks when a reviewer is unavailable.

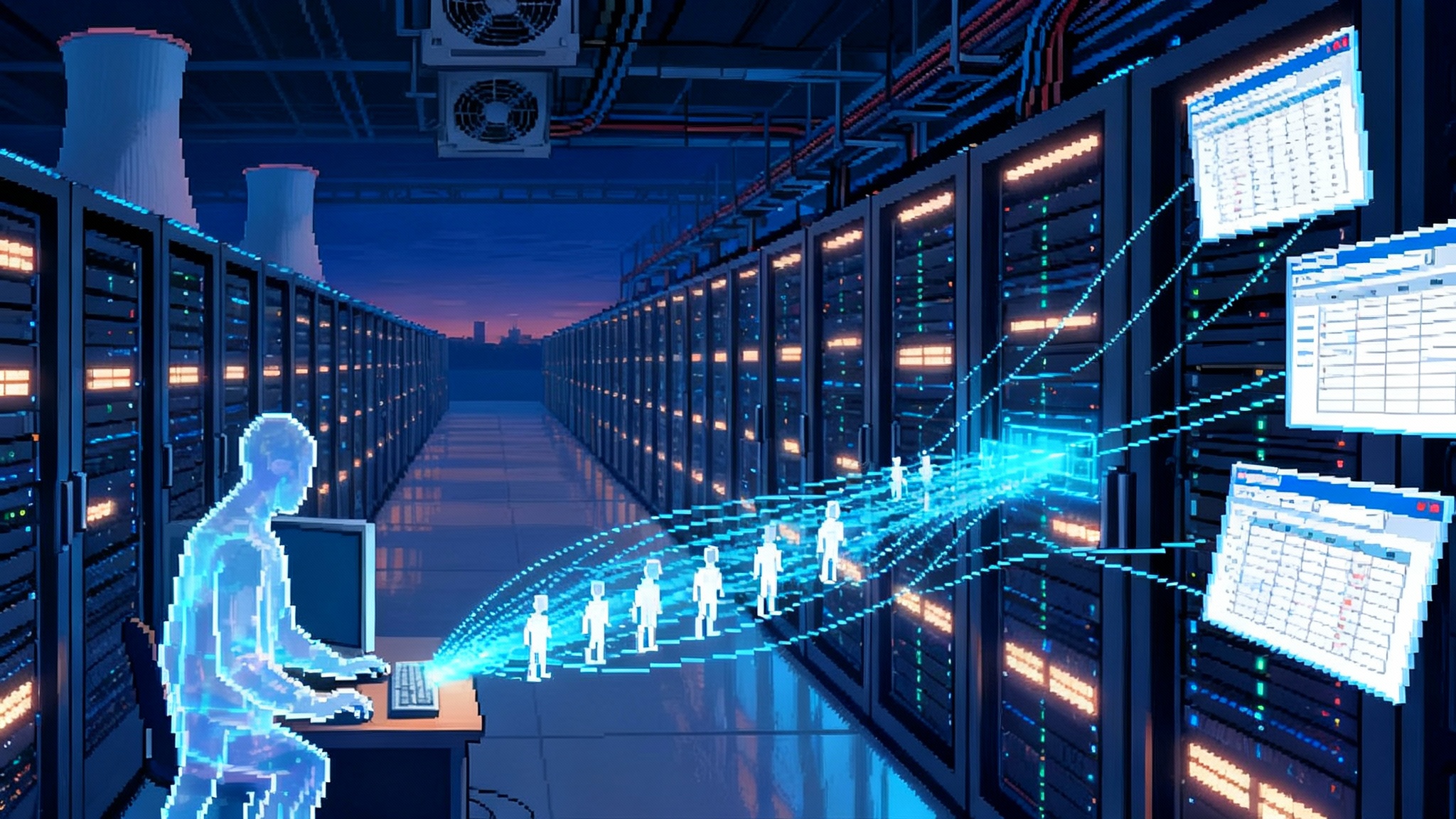

Cost and latency on Blackwell class hardware

Blackwell GPUs raise the performance ceiling for agent systems, especially when you combine four techniques: batching, speculative decoding, quantization, and right sizing models per role.

- Batching: Agent workloads are bursty. Use dynamic batching so small requests share a forward pass. Keep batch size tuned to maintain P95 under your SLO. For interactive steps, prioritize queue time over absolute throughput.

- Speculative decoding: Let a small draft model propose tokens that the larger model verifies. Pair it with early exit if confidence thresholds are met.

- Quantization: FP8 or FP4 on Blackwell can save memory and cost for inference with minimal quality loss when calibrated on your domain. Always run A/B evals that include safety tests.

- Right sized roles: Do not run every step on your largest model. Use a small model for the Router and routine retrieval, a mid size for Specialists, and reserve your largest model for the Planner or complex synthesis.

A practical budgeting approach

- Start with user visible targets: TTFT under 400 ms for interactive chat, P95 under 2 seconds for responses without tool calls, and under 8 seconds for responses with one tool call.

- Translate targets into concurrency and throughput. For example, an IT helpdesk with 1,000 agents and 20 percent concurrent usage might target 200 active sessions with bursts to 400.

- Choose a serving layout. For the Planner and Reviewer, dedicate a small pool on Blackwell for consistent latency. For Specialists and Router, consider a mixed pool that can burst to cloud or use H200 class capacity when Blackwell is saturated.

- Model shard or cache. If a Specialist uses a large context window or long tool results, consider KV cache reuse to keep latency predictable during multi turn tasks.

Cost knobs to watch

- Tokens per completion. Treat prompt bloat as a budget item. Track growth weekly and prune long lived context.

- Tool call time. Many agent costs are in tools, not tokens. Optimize API pagination, pull fewer fields, and cache authority lookups.

- GPU minutes. Run nightly capacity tests to keep batch and tensor parallel settings aligned to traffic shape. Autoscale with guardrails to avoid cold starts during business hours.

Concrete enterprise use cases that benefit now

IT automation

- New hire onboarding: A Planner coordinates a credentials Specialist, a device Specialist, and a compliance Reviewer. The Executor files tickets in ITSM, provisions accounts via SCIM, and schedules orientation. Approvals gate admin role grants and VPN access.

- Patch and remediation: A vulnerability Specialist cross references advisories with your asset inventory. The Reviewer checks impact windows. The Executor rolls out patches in waves with rollback. Guardrails block actions outside maintenance windows.

- Access requests: A Router triages requests, the Specialist verifies training status and manager approval, and the Executor applies the change through IAM APIs with just in time entitlements.

Customer operations

- Case triage and response: A Router classifies inbound messages, a retrieval Specialist assembles facts, and a response Specialist drafts replies. A Reviewer approves high risk categories like legal or credit. The Executor updates CRM, schedules follow ups, and posts public replies.

- Returns and RMAs: A Planner maps policy to steps, a fraud Specialist checks signals, and an operations Specialist books carrier pickups. Output rails ensure no private data leaks in customer emails.

- Knowledge refresh: A crawler Specialist proposes article updates; a Reviewer checks tone and accuracy. Guardrails enforce topic control to prevent out of policy advice.

Finance back office

- Invoice processing: A document Specialist extracts fields, a reconciliation Specialist matches POs, and a Reviewer verifies exceptions over a threshold. The Executor posts entries and tags them for audit.

- Spend analysis: A retrieval Specialist aggregates transactions, a planning Specialist proposes savings opportunities, and a Reviewer checks category definitions. Output rails remove PII from dashboards.

- Close automation: A Planner sequences close tasks. Specialists prepare reconciliations and schedules. The Executor opens and closes periods and posts journal entries with human approvals on material adjustments.

A 30 60 90 day rollout plan

Days 0 30: Prove value and lock basics

- Pick two pilots where the data is accessible and the savings are clear. Suggested pairs: IT onboarding plus case triage, or invoice processing plus knowledge refresh.

- Identity and access: Enable Agent IDs or equivalent service principals, define RBAC, and put secrets in a vault. Require short lived tokens and conditional access.

- Guardrails: Wire input and output rails for PII redaction, topic control, and content safety. Set approval rules for external side effects.

- Observability: Stand up tracing, prompt versioning, and dashboards. Define SLOs for latency and success rates per use case.

- Success criteria: Set KPIs, for example a 30 percent handle time reduction or a 25 percent accuracy lift on invoice matching.

Days 31 60: Go multi agent and integrate

- Expand to multi step processes with Planner and Reviewer patterns. Capture plans as graphs with retries and compensating actions.

- Tooling integration: Connect ITSM, CRM, ERP, and your data warehouse. For each tool, define a schema, side effects, and an allowlist.

- Safety and change control: Add canary evals per rail. Introduce approval workflows for prompt changes and model version upgrades.

- Cost control: Instrument token usage, GPU minutes, and tool time. Add budgets with alerting. Tune batch sizes and draft verify settings to hit P95 targets.

- Early distribution: Publish vetted agents to your internal directory or Agent Store with clear descriptions, permissions, and owner contacts.

Days 61 90: Harden for production and scale

- Reliability: Set error budgets. Add circuit breakers and bulkheads so one failing tool does not cascade across agents. Run chaos drills for tool outages.

- Compliance: Map controls to SOC 2, ISO 27001, or your regulatory framework. Ensure change logs, approvals, and audit artifacts are complete.

- Security posture: Pen test the agent surface. Attempt jailbreaks and prompt injection from untrusted data sources. Monitor rail hit rates over time.

- DR and capacity: Define RTO and RPO. Build hot standby capacity for the Planner pool. Pre warm autoscaling to absorb known traffic spikes.

- Scale out use cases: Graduate the two pilots to GA. Start two more pilots in new domains. Establish an Agent Review Board to oversee new submissions to your store.

Common pitfalls and how to avoid them

- One giant agent: Monolithic prompts get slow, expensive, and hard to maintain. Split by roles and use small models where possible.

- No human gates: The first time an agent changes a role or issues a refund without approval will be memorable. Gate external side effects.

- Prompt drift: Without versioning and approvals, well meaning edits degrade quality. Treat prompts like code and use pull requests.

- Unbounded context: More context is not always more accuracy. Bound and label inputs. Cache reusable snippets.

- Invisible spend: Tool calls and silent retries will eat your budget. Instrument everything and put alerts on token and tool usage.

The bottom line

2025 delivered the missing pieces for enterprise agents. Microsoft brought identity, policy, orchestration, and distribution together. NVIDIA made safety and control modular and testable. OpenAI showed that agents can work with the tools you already have, even when APIs are imperfect. With a secure reference architecture, clear SLOs, and a 30 60 90 plan, you can move from demo to dependable outcomes. The organizations that operationalize agents now will set the standard for how work gets done next.