Citi’s September Pilot Marks the Agentic Enterprise Shift

Citi’s September pilot of autonomous agents inside Stylus Workspaces marks a real move from demos to production. See how browser agents and modern orchestration reshape enterprise rollouts, why the agent cost curve matters, and a concrete blueprint with KPIs to ship in Q4 2025.

The week the agents got real

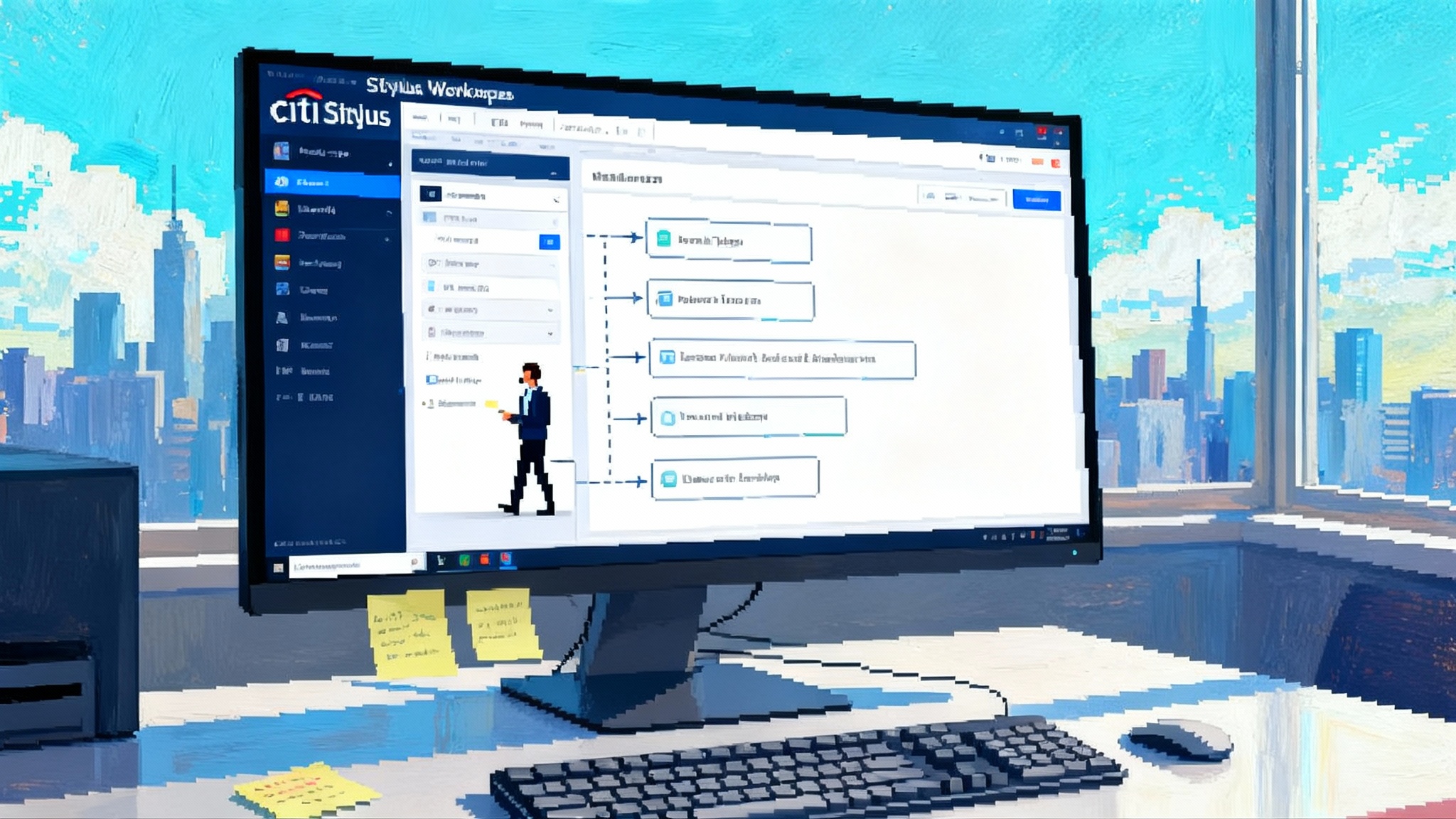

This month, Citi began piloting autonomous, multi-step AI agents inside Stylus Workspaces. The agents chain together research, writing, translation, and workflow updates across internal systems, then ask for confirmation before anything irreversible happens. Citi is not alone in experimenting with agents, yet the company’s move is a useful inflection point because it blends three signals at once: agents are moving beyond demos into production environments, the browser is becoming a first-class execution surface, and the economics of longer, more capable agents are now front and center. Reporting has already detailed Citi piloting autonomous agents in Stylus Workspaces, with careful cost controls and confirmations for sensitive actions.

Across financial services and other regulated industries, agent pilots look similar. A narrow set of workflows is selected. The agent is permissioned to act inside a defined sandbox. Risky actions require user confirmation. Every step is logged and attributable. And teams watch the runtime and token meter closely.

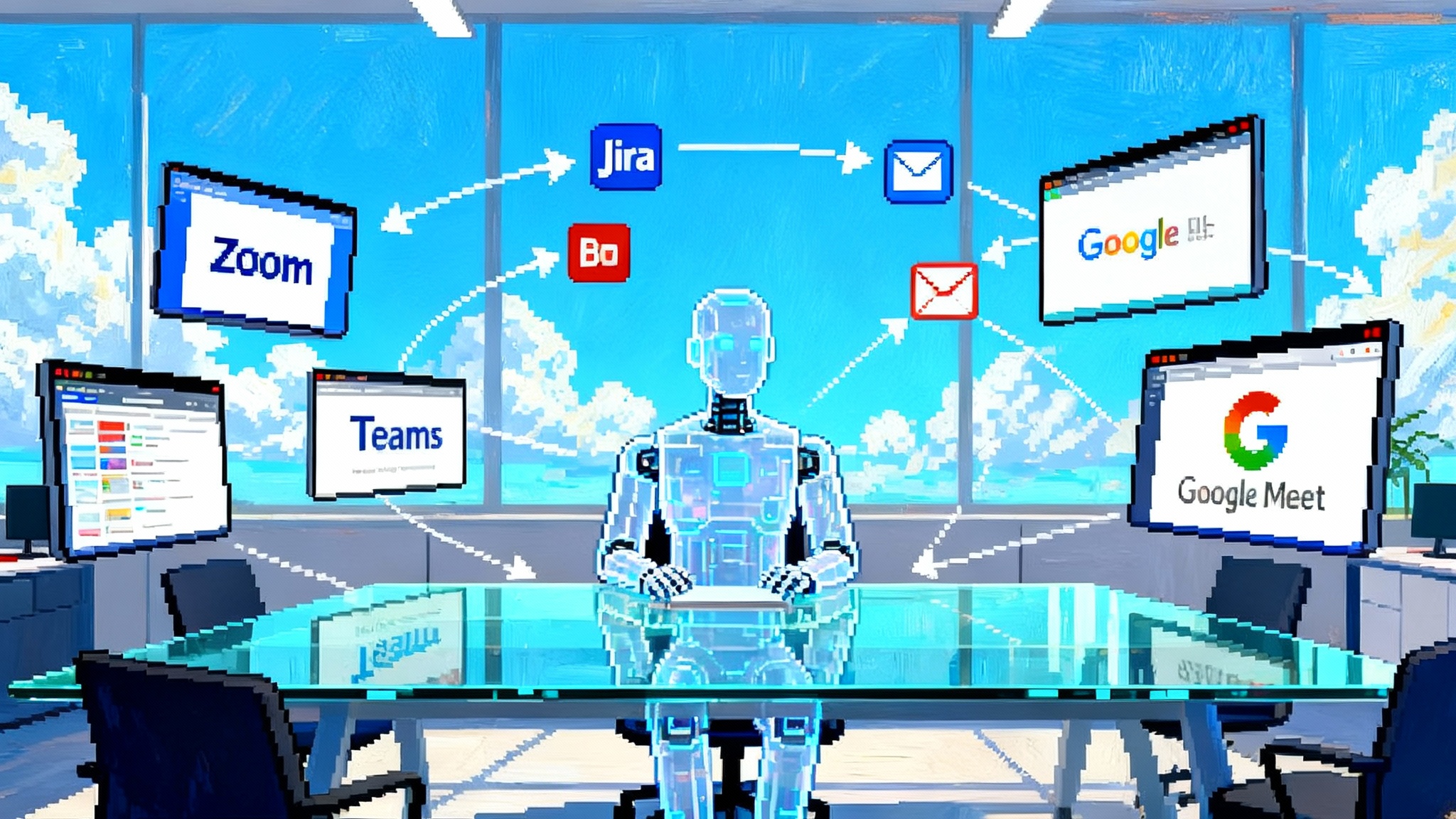

The emerging pattern: where agents live and how they are orchestrated

Two deployment patterns are converging.

-

Browser-level agents. The browser is becoming the universal runtime. Teams have watched previews of assistants that live in the sidebar, with the ability to read pages, click buttons, fill forms, and ask for confirmation on high-risk actions. Browser agents are powerful because they work where employees already live, across SaaS tools, intranets, and vendor portals. This expands what an agent can do without waiting on every vendor to publish an API. It also introduces new safety concerns, like prompt injection from web pages and the need for strong site-level permissions. For design guidance, see our take on enterprise browser agent design.

-

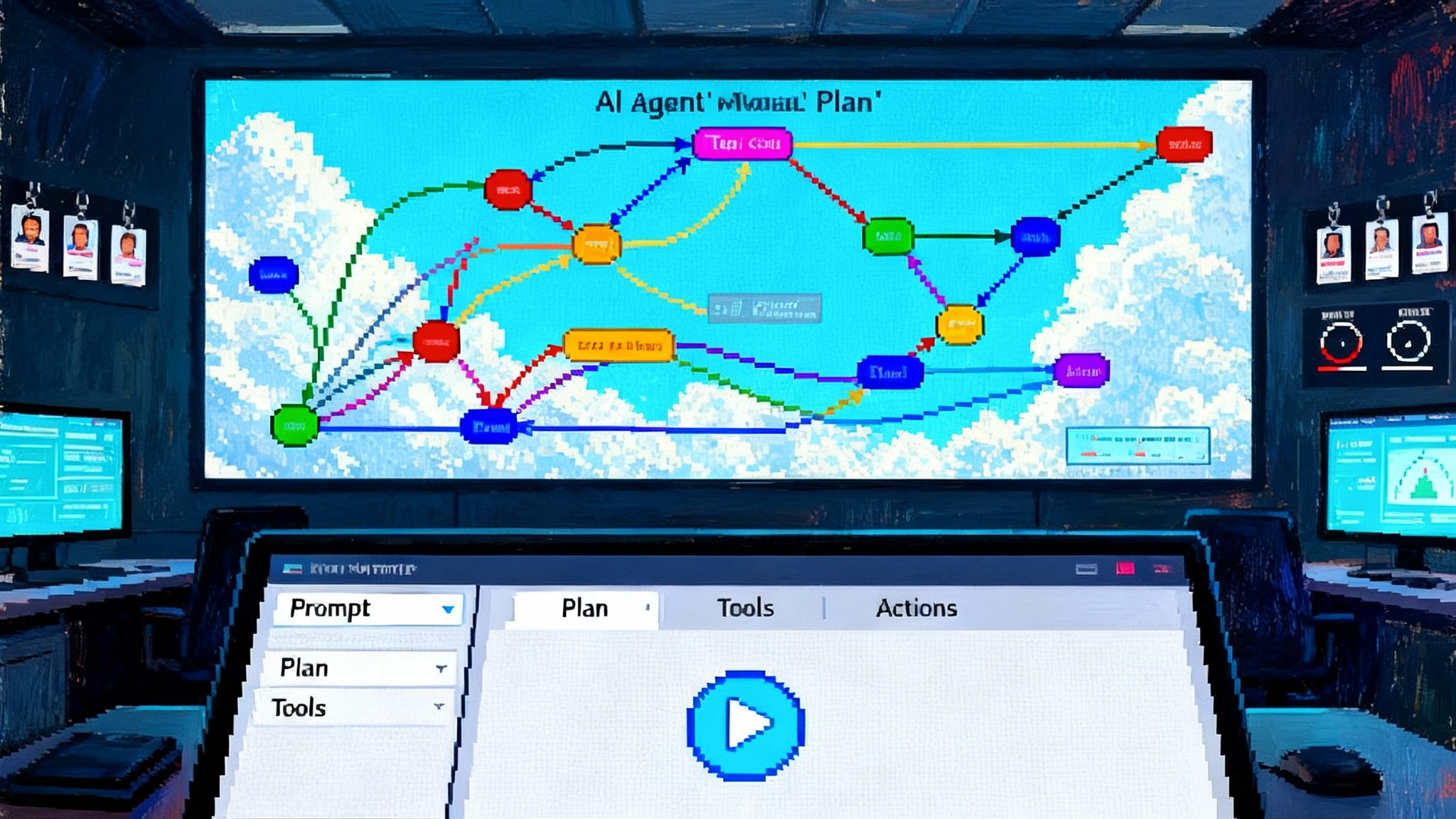

Vendor toolchains for orchestration. Orchestration structures tasks into steps, calls tools, handles retries, and logs everything. OpenAI’s Responses API is a good example of where orchestration is heading, with built-in tools, background execution for long jobs, and support for model context protocols that plug agents into enterprise systems without messy glue code, as outlined in the OpenAI Responses API update. For deeper patterns, review orchestration patterns for AI agents.

Put those together and you get what Citi and others are now piloting: agents that can operate at the browser layer when needed, but are orchestrated and governed by enterprise-grade pipelines that enforce controls and provide a complete audit trail.

Why the agent cost curve bites

If your proof of concept felt cheap, your production pilot may not. Here is why agent runs get expensive fast:

- Steps multiply context. Every additional step adds prompts, tool responses, retrieved documents, and screenshots. The agent carries part of that state forward, which increases tokens and time.

- Tools are not free. Each web browse, file search, retrieval call, or code-execution step is a new compute event, often fan-out, with its own cost and latency profile.

- Memory expands. To remain coherent, longer chains preserve reasoning tokens across requests. This is essential for quality but it has real cost.

- Replanning and retries. Good agents do not fail silently. They replan, try alternatives, and backtrack. That boosts success rates but adds more steps and tokens.

A useful mental model: cost per successful task = steps × average context per step × tool intensity. If any of those three grows faster than linearly, costs spike. In practice, spikes come from two places: web or file retrieval that drags large documents into context, and long-running chains that keep the reasoning window warm across dozens of actions. For mitigation tactics, use our agent cost control playbook.

This is why the most disciplined pilots bake in guardrails from day one. Citi’s pilot emphasizes cost controls and confirmations before sensitive actions, a pattern we expect to see everywhere that agents touch regulated workflows. The way to make that concrete is a set of budgets, gates, and circuit breakers that keep experiments from turning into surprise invoices.

Guardrails that make agents enterprise ready

Leading adopters are converging on a similar control plane. If you are moving from demo to production, plan on at least the following:

- Step budgets. Hard caps on total steps per run, per user per day, and per workflow. If a chain needs to go longer, the agent requests explicit approval with a short summary of why.

- Permissioned high-risk actions. Agents require human confirmation before any action that changes state outside the agent’s sandbox, for example sending email, posting to a CRM, placing an order, or scheduling meetings.

- Tiered risk policy. Define action classes. Low risk, for example navigation and reading. Medium risk, for example workspace edits. High risk, for example purchases, emails, data sharing. Tie each class to required safeguards and logging.

- Complete audit trails. Persist prompts, tool calls, retrieved artifacts, screenshots, confirmations, and diffs of any changed object. Make it searchable and tamper evident.

- Provenance and data boundaries. Tag every artifact with source, time, and policy context. Keep customer and regulated data in the right region and store. Apply automatic redaction and PII guardrails for logs and evals.

- Sandboxes, not superpowers. Let agents operate in constrained browser profiles or virtualized desktops with pre-approved credentials, stored secrets, and least-privilege permissions.

- Deterministic fallbacks. For brittle steps, prefer deterministic utilities such as template mappers, regex extractors, or form-filling micro-services when predictability is more valuable than creativity.

- Human in the loop by default. For early production, require confirmation at a set interval of steps, and for every action that has external impact.

- Red teaming and prompt-injection tests. Treat browser agents like you would treat an email gateway. Test them against injection, clickjacking, malicious forms, and dark patterns.

A pragmatic blueprint for Q4 2025

Here is a template you can lift, adapt, and ship before year end.

-

Pick two workflows that already burn hours. Aim for narrow, frequent, unglamorous work with clear baselines. Examples: compliance policy lookups and templated customer emails, or weekly competitor research briefs and CRM updates.

-

Draw the map. For each workflow, document the target chain: inputs, tools, systems, high-risk actions, and expected outputs. Mark which steps are brittle and which are deterministic.

-

Set budgets and gates. Before you write code, decide:

- Max steps per run, soft and hard limit

- Max tokens per step

- Max total tool calls per run, especially browse and retrieval

- Timebox for each run, for example three minutes wall time

- Which actions require confirmation, and the exact confirmation text

-

Instrument first, then scale. Build logging, traces, screenshots, and searchable metadata into the very first version. You will need this for debugging, compliance, and cost reports.

-

Start in a sandboxed browser. Give the agent a dedicated profile with only the permissions and cookies it needs. Pre-approve domains for browsing. Keep secrets in a managed vault.

-

Wire in orchestration. Use an orchestration layer that supports background jobs for long tasks, tool calling with state, and resumable runs. The point is not just getting an answer. The point is being able to explain how the answer happened.

-

Establish a human confirmation cadence. Require explicit yes or no for any step that changes systems outside the sandbox. Keep the confirmation prompts short and high signal.

-

Run daily evals. Create synthetic tasks that mirror production. Mix in failure modes like slow pages, login prompts, or partial data. Track pass rates and token usage per step.

-

Put someone on agent SRE. Treat the agent like a service with SLOs. Triage failures, investigate spikes in cost, and tune steps, tools, or prompts daily.

-

Publish a simple scorecard weekly. Show leadership and users three things: productivity delta versus baseline, cost per successful task, and safety posture. Keep it consistent and transparent.

KPIs that actually predict success

Do not track only vanity metrics like total queries. Use measures that tie to quality, cost, and safety.

Quality and reliability

- Task success rate by workflow, counted only when outputs meet acceptance criteria

- Average steps per successful task, and the distribution tails

- Tool call error rate, for example browse failures, auth prompts, timeouts

- Replan frequency, how often the agent deviates from initial plan

- Mean time to resolution for successful tasks, wall time

Cost and efficiency

- Tokens per step by tool type, and tokens per successful task

- Compute cost per successful task, bucketed by workflow

- Confirmation density, confirmations per 100 steps, a proxy for risk mix

- Cost of failed runs as a percent of total agent spend

Safety and compliance

- High-risk action approval rate and rejection rate

- Unintended side-effect rate, measured via diffs and user reports

- Prompt-injection detection rate in weekly eval suites

- Audit log completeness, percent of runs with all artifacts present

- Mean time to safe stop when a policy is triggered

Adoption and satisfaction

- Weekly active users in targeted groups, with cohort stickiness

- Assisted minutes saved per user, time recovered from baselines

- Net satisfaction, short pulse responses on usefulness and trust

When you publish the KPI deck, pair numbers with two qualitative sections: a one-page incident log that shows what went wrong and what you fixed, and three short win stories that capture value beyond the spreadsheet. This mix keeps the program grounded and defensible.

What to expect when you scale in 2026

If your Q4 pilots look like Citi’s, you will exit the year with hard data on cost, safety, and value. The next phase will expand breadth, automate confirmations for low-risk actions, and push more workflows from assistive to autonomous. You will also begin to separate the easy wins from the stubborn cases. Browser-level agents will keep spreading, particularly where vendor portals and legacy workflows resist API automation. Orchestration layers will get better at long-running jobs and resumable chains. Procurement will start asking for agent readiness in vendor contracts.

Most importantly, the agent cost curve will stop being a surprise. You will allocate budgets by workflow, not by team, and you will design chains that pay for themselves within a quarter. The pilots this fall are about proving that the combination of browser-level agency, disciplined orchestration, and hard cost guardrails can deliver real, durable value inside the constraints that matter. Citi’s pilot is the clearest sign yet that this formula works.