Firedancer Hits Mainnet: Solana’s New MEV and Throughput Math

Pieces of Firedancer are now live on Solana mainnet as top validators begin migrating. Here is how a true multi‑client network will change block production, fee capture, and reliability, plus what builders and operators should do next.

The news: Firedancer is no longer a thought experiment

On November 11, 2025, one of the largest institutional validators, Figment, said it migrated its flagship Solana validator to Firedancer’s hybrid mainnet configuration, known as Frankendancer, and published early results showing higher rewards from better block packing and fee capture. Figment’s post also details scheduler modes and networking choices that matter for operators deciding how to switch. That is the signal many teams were waiting for: real operators are moving, not just testing. Figment migration to Firedancer offers concrete numbers and a recipe for others to follow.

This caps a quiet but steady rollout that began at Breakpoint 2024 when Frankendancer was demonstrated live on mainnet. Since then, components of the new client have made their way into production clusters, with a growing set of validators running Frankendancer in leader rotation while the fully independent Firedancer client continues to mature.

Why client diversity matters right now

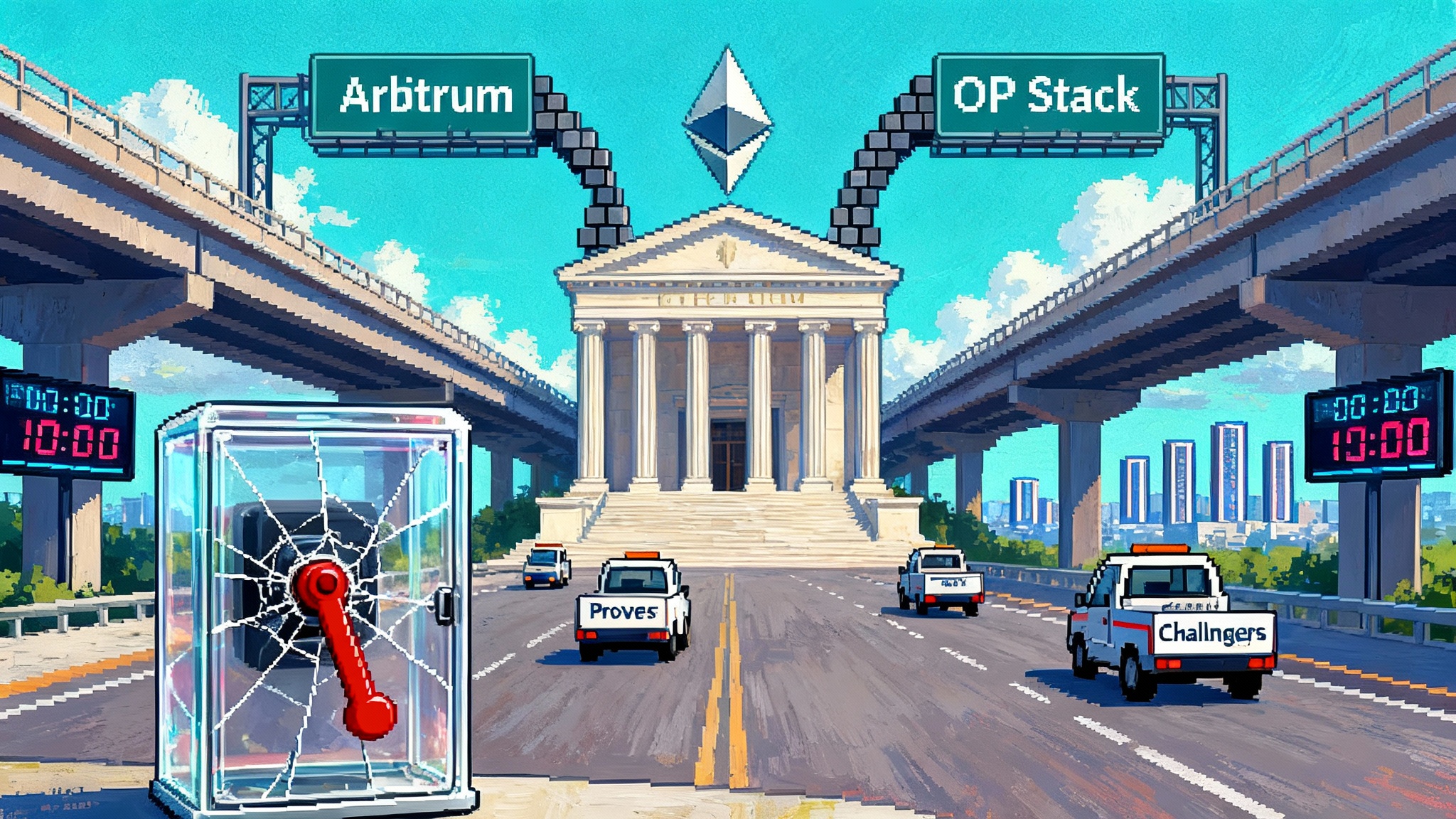

Solana did not lack speed. It lacked redundancy. Until recently, the overwhelming majority of stake ran variants of the original Labs client, Agave, with many operators choosing Jito’s fork to capture maximal extractable value. That gave the ecosystem performance, but it also created a single family of code risk. If one logic bug, consensus oddity, or performance regression hits Agave and its forks in the same way, the chain inherits correlated instability.

A second client changes that equation. With independent code paths for networking, transaction verification, block scheduling, and pack logic, a bug in one client is less likely to be a bug in the other. Diversity in implementation is like railroads using different signal systems. Trains can still run if one system glitches. For a network that aims to be the transactional backbone for high frequency markets and consumer apps, reducing correlated failure is as valuable as raw throughput. As capital and users expand after developments like the Solana ETF with staking, the cost of correlated failures only rises.

There is another reason diversity matters now: incentives. When only one client dominates, the competition tends to be for tweaks and flags. With two serious clients in the leader schedule, operators can choose architectures with different strengths. That competition pressures both sides to ship better scheduling, better filtering, and better block assembly. The result is a faster moving frontier for fees and reliability.

What a second client actually changes in block production

Think about block production as loading cargo onto a train that leaves every 400 milliseconds. The leader has a fraction of a second to choose what to pack and in which order. Packed well, the train carries valuable goods that pay high freight rates. Packed poorly, space is wasted, and profit is lost.

Frankendancer’s pack pipeline differs from Agave’s in a few practical ways:

- Networking and ingestion. Firedancer’s packet I/O and signature checks are engineered for low jitter and high parallelism. That can reduce the time between a transaction arriving at the leader and being eligible for packing.

- Scheduling. Operators can favor different scheduler modes that balance standard transactions with bundles and fee‑rich flows. Figment reported higher tips and priority fees after switching, which is consistent with a scheduler that makes better pack choices under pressure.

- Block density. By shaving microseconds in critical paths, leaders can fit more non‑vote transactions within the slot without blowing through timing thresholds, which translates into more total compute consumed and more total fee revenue.

One nuance: denser blocks are not free. Figment observed a modest increase in block duration and a rise in failed transactions at very high load, which is expected when leaders push closer to the slot’s practical limits. Operators must tune for cluster health, not just individual revenue. That is the new craft of block production in a multi‑client world.

The new economics of MEV on Solana with Firedancer in the mix

Maximal Extractable Value, or MEV, is the extra value a block producer can capture by ordering, including, or excluding transactions in a certain way. On Solana today, most MEV is coordinated through Jito’s block engine, which accepts bundles and pays tips. The early rap on Frankendancer was that it trailed Jito‑Solana in MEV capture. That is changing.

- Firedancer integrates with the Jito block engine. Operators can enable bundle ingestion and tip payment in their Firedancer configuration, aligning Frankendancer with the same MEV order flow that powers Jito. Jito’s own documentation includes a Firedancer setup guide with the key parameters for bundle support, clarifying that Firedancer and Jito are not mutually exclusive. See the Jito guide for Firedancer setup.

- Scheduler modes matter. Firedancer exposes modes that tilt toward fee maximization, toward general performance, or a balance. As more stake migrates, we should expect a race to the most profitable stable configuration, not simply the most aggressive one.

- Fee markets are getting richer. The combination of priority fees, tips, and better pack logic means that the marginal value of a microsecond inside the slot is rising. That creates a new calculus for hardware, networking routes, and even private relay networks that promise lower latency between block engine and leaders.

The takeaway is not that MEV gets bigger in absolute terms. It is that the distribution of fee and tip revenue across validators can shift as Frankendancer earns a seat in leader schedules and as its integration with MEV order flow tightens. Operators that once avoided Frankendancer for fear of missing MEV are beginning to reconsider.

From Frankendancer to full Firedancer: what is live and what is next

It helps to separate the two names:

- Frankendancer is a hybrid that swaps in Firedancer’s fast networking and block builder while leaving runtime and consensus to Agave. It is designed for production. That is what many validators are running today on mainnet.

- Firedancer, the full client, is a clean room implementation in C. It has been running on testnet and on mainnet in non‑voting configurations while the team drives conformance and safety work. Full voting participation follows once the client clears audit, interoperability, and performance gates at cluster scale.

On the protocol side, Solana’s compute budget is also evolving. In July 2025 the network raised the per‑block compute ceiling to relieve congestion under peak load, and there is an open path to lift it further and even make it dynamic after the next consensus upgrade. The point is not to chase headline throughput. It is to let well provisioned leaders fill slots more efficiently while the network retains guardrails that keep slower validators in the loop.

What this unlocks for 2026

High frequency DeFi

- Faster, denser blocks reduce slippage for order book markets and improve fill quality under stress. When the cluster consistently fits more compute per slot and leaders pack intelligently, price discovery looks more like an exchange and less like a congested queue. For context on competitive dynamics, see the lens of Solana‑speed EVM competition.

- Auctions and batch systems can run more often with tighter windows. That benefits liquidations, rebalance events, and time‑sliced market makers.

Games

- Real‑time mechanics, such as on‑chain state updates tied to frame ticks or turns that settle in sub‑second windows, become more practical when the tail of block times shortens and failed transaction rates drop. That does not require a million transactions per second; it requires reliability at tens of milliseconds inside the parts of the slot game logic depends on.

Consumer apps

- Payment streams and loyalty updates benefit from consistent inclusion times and predictable fees. Denser blocks with better scheduling should reduce the frequency of budget‑exceeded errors that plague crowded moments, which is what users remember. Wave growth from recent USDC checkout integrations should compound when inclusion becomes more predictable.

The common thread is not only raw speed. It is predictability. A second client plus better scheduling equals more consistent inclusion for the same unit of fee, which is what builders can design around.

A short playbook for validators

- Run Frankendancer on at least one production validator. Shadow it behind your main node if needed. Measure slot wins, missed credits, block duration, and reward deltas across epochs. If you operate a large stake pool, start with a minority node to avoid correlated risk during tuning.

- Enable Jito bundle support in Firedancer and test scheduler modes. Measure changes in tips, priority fee capture, and non‑vote transactions per block. Focus on revenue per compute unit, not only headline reward rate. It is a better indicator of pack quality.

- Revisit networking. Latency variance kills pack performance. Whether you use public routes, direct peering, or specialized backbones, instrument your path between block engine, leader, and peers. Use consistent telemetry to decide if a dedicated route improves vote latency and reduces missed credits without creating a single point of failure.

- Hardware discipline over headline clocks. Modern CPUs with strong memory bandwidth and Non‑Uniform Memory Access awareness, fast NVMe storage for ledger writes, and tuned kernel networking typically beat raw core counts in this workload. Test with realistic ledger replay and pack profiles, not just synthetic benchmarks.

- Keep an Agave or Jito fallback warm. Multi‑client does not mean single‑client heroics. You want rapid rollbacks when cluster conditions change.

A short playbook for builders

- Budget compute with the new ceilings in mind, but keep write locks minimal. Most real gains come from raising the amount of parallelizable work per block. Avoid account hot spots, break large writes into independent pieces, and use program‑derived addresses to spread contention.

- Make fees adaptive. Use fee strategies that react to slot conditions instead of static priority bumps. The packers are getting smarter; your transactions should too.

- Design for bursty inclusion. Even with better scheduling, inclusion will cluster around busy leaders. Engineer retries, preflights, and user interfaces that treat a failed attempt as a transient, not a fatal error.

- Embrace data locality. Wherever possible, co‑locate read heavy state and aim for fewer cross program invocations per user action. It reduces your exposure to compute spikes.

The remaining bottlenecks that could cap gains

- Compute unit policy. Raising block ceilings helps, but it is not a magic wand. Max Writable Account Units and other per‑block or per‑transaction limits still gate parallelism. If these remain tight while Max Block Units rise, some of the capacity increase will be stranded.

- Networking fairness. As more operators optimize their routes, the network must balance low latency with open access. If specialized backbones or private relays become de facto requirements for good performance, the ecosystem will need clear norms to prevent a two‑tier validator set.

- Execution hot spots. Popular programs that lock the same state can throttle packers even with higher compute ceilings. Program developers should continue to refactor around narrower writes and transactional sharding patterns.

- Leader variability. The slowest leaders still set the tempo for user experience outliers. Multi‑client competition should reduce the tail, but operators that cannot meet the cluster’s median timing will cause occasional hiccups. Monitoring and reputation systems that encourage upgrades without pushing smaller validators out will matter.

How to measure whether this is working

- Stake share on Frankendancer. Watch the percentage of stake and number of distinct operators running Frankendancer. Diversity by operator and geography is the real hedge.

- Leader schedule coverage. More Frankendancer leaders across epochs should correlate with lower failed transaction rates during peak windows if scheduling is the reason users see improvements.

- Revenue composition. If Firedancer’s pack logic is working as designed, validators should see a higher share of tips and priority fees relative to inflation, even if headline reward rates move with market activity.

- Cluster latency distribution. The median slot time matters less than the 95th percentile under load. That tail is where user trust is won or lost.

The bottom line

Solana is entering its multi‑client era. That is not a slogan. It is a different operating model for the chain. With Frankendancer in production and full Firedancer on deck, block production is becoming a competitive craft rather than a single‑tool trade. The early data from major operators says the switch can pay for itself in both reliability and revenue if you do the engineering work. The next twelve months will be about turning that promise into durable practice: lifting ceilings without breaking fairness, capturing fees without sacrificing liveness, and building apps that feel instant because the network is predictably fast, not just occasionally fast. For builders planning the next wave of Solana‑native experiences, see how capital formation intersects with validator incentives in our report on the Solana ETF with staking, then pressure‑test your user flows against the inclusion patterns you expect in a two‑client network.