Inside Citi’s 5,000‑User AI Agent Pilot and the Enterprise Playbook

Citi just pushed autonomous AI agents from demo to production with a 5,000-user pilot. See how the stack, controls, and unit economics work in practice and what it signals for Fortune 500 rollouts.

The moment autonomous agents met a Tier‑1 bank

Today’s most important AI news may not be a flashy model release. It is a controlled field test inside a highly regulated institution. Citigroup has begun a 5,000‑user pilot of agentic workflows on its internal platform, giving staff single‑prompt access to multi‑step tasks like research packs, profile compilation, and translation across internal and external sources. The bank is running the pilot for several weeks and closely tracking performance, cost, and operational risk. The capabilities are powered by models such as Google’s Gemini and Anthropic’s Claude and orchestrated through the firm’s own workspace layer. That is not a hackathon. It is production. See the WSJ on Citi's 5,000‑user pilot.

This move is a signal that autonomous agents are crossing from consumer novelty to enterprise workflow. It also provides an early blueprint for how large firms will actually deploy agents at scale. Below, we break down the stack, the control plane, and the economics, then map Citi’s approach to recent product moves from OpenAI and Anthropic. We close with a 90‑day implementation checklist and a look at the compliance and procurement patterns that will shape adoption across the Fortune 500.

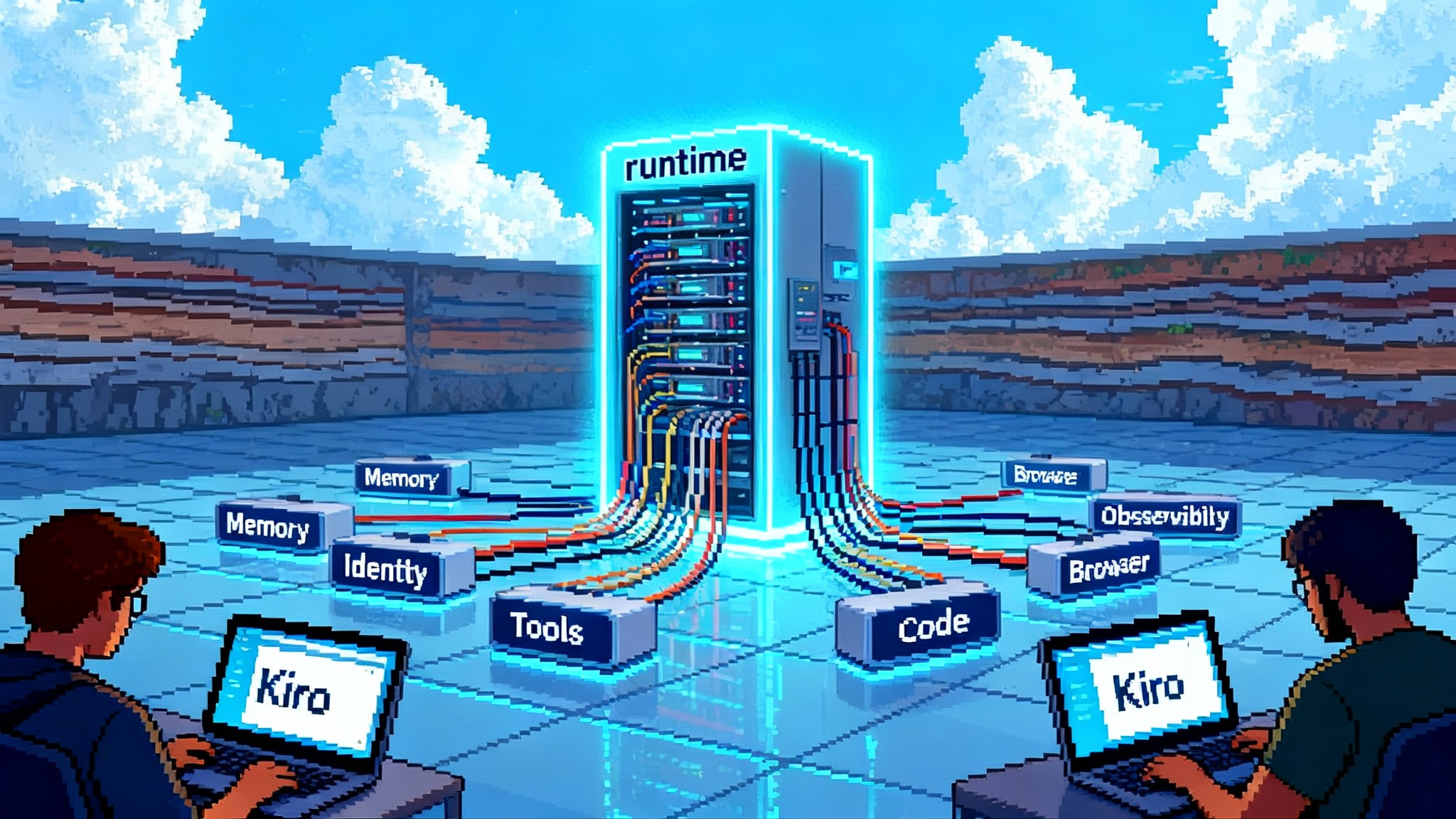

Architecture: how an enterprise agent actually runs

Think of Citi’s pilot as three layers that sit inside a zero‑trust perimeter and talk only through controlled interfaces.

1) The reasoning and planning layer

- Base models: At least two frontier models are in play for planning and tool use. Using multiple models is not only about quality. It gives the bank hedging power on availability, pricing, and vendor risk. It also lets teams choose the right model for the job. Short reasoning tasks may run on a faster, lower cost model. Long horizon tasks that need multi‑step planning can route to a higher accuracy model.

- Orchestration: The bank’s workspace coordinates tool calls, memory, and stepwise plans. In practice, that means an agent can draft a plan, call a retrieval tool, summarize, then call a translation tool, and finally prepare an output formatted for a specific internal template. The orchestration engine enforces timeouts, retries, and backoff to prevent runaway loops.

2) The tool and data layer

- Connectors: Agents do not have blanket access. They call specific tools via scoped connectors: internal search, policy libraries, document stores, ticketing, or knowledge bases. External access is typically brokered through secure egress with URL allowlists and proxy inspection.

- Memory and scratchpads: Stateless prompts are unreliable for long tasks. The pilot likely uses short‑term scratchpads for subplans and a compact task memory persisted in the orchestration layer. Anything durable flows into governed stores where retention and legal hold policies apply.

- Output validators: Deterministic checks validate formats and required fields. For example, a research pack must include source lists, date stamps, and a confidence note. Validators sit between the agent and the user so that malformed outputs are caught without human review time.

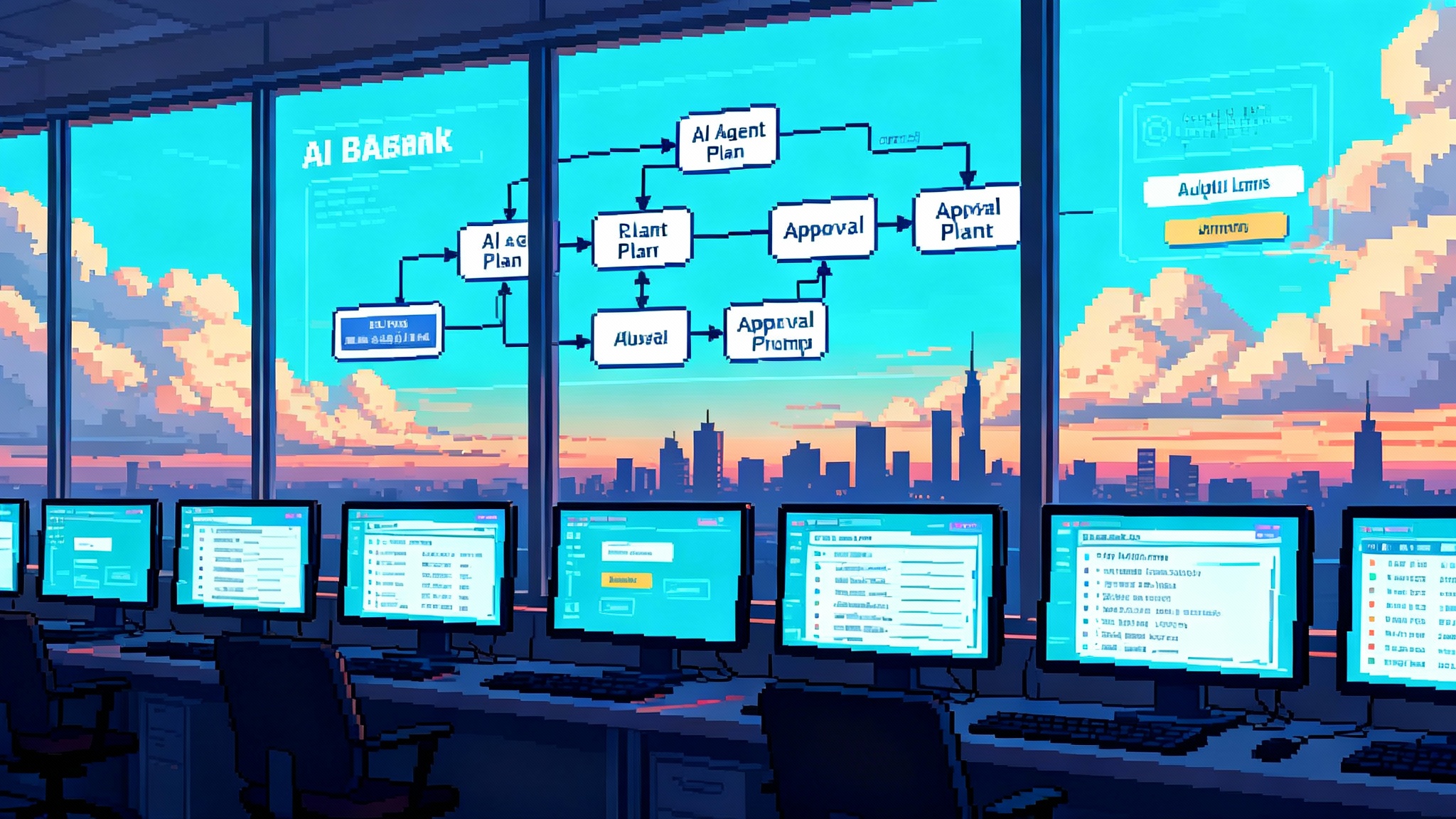

3) The control plane

- Identity and permissions: Every agent run executes as a service principal tied to a human user. Effective permissions are the intersection of user entitlements and the agent’s own role. That prevents elevated access through delegation.

- Human in the loop: Any step with external or irreversible side effects requires confirmation. Think emails to clients, postings to external sites, policy changes, or expense approvals. The agent marks a pending action and waits for sign‑off.

- Guardrails and content filters: Prompt and response filters screen for secrets, PII, and policy violations. High‑risk prompts are blocked or remediated. Tool calls can be masked if they would expose sensitive terms into model context.

- Observability: Every state transition is logged with trace IDs. You can reconstruct what the agent saw, which tools it called, which model version responded, and who confirmed the action. That yields auditable trails for internal audit and regulators.

Governance: what makes this bank‑grade

Auditability and explainability

- Replayability: The orchestration layer stores plans, intermediate reasoning summaries, and tool responses so an auditor can replay a run. Storing full tokens verbatim is often limited for privacy, so teams persist structured summaries and hashes, plus full logs in a secure vault when required by policy.

- Attribution: Every action is attributable to a human requester, not only to the agent. That is essential for accountability in regulated environments. For a deeper dive on policy design, see our enterprise AI governance framework.

Data access controls

- Scope by default: Agents inherit the strictest scope of either the human user or the agent role. Read access to sensitive datasets remains fetch‑only through prebuilt queries that redact fields by policy. Write access is gated through service endpoints with policy checks.

- External data hygiene: External pages and files are fetched through safe renderers that strip scripts, iframes, and trackers before content enters a prompt. For private vendors, legal reviews establish permitted use of terms and page snapshots.

Vendor risk and concentration

- Dual‑vendor model strategy: Running Gemini and Claude side by side creates choice on cost and fallback on incidents. Vendor profiles include breach history, model update cadence, and commitments around privacy and training data use. Procurement negotiates SLAs that cover latency, uptime, deprecations, and advance notice for model versioning.

- Model updates: Because model behavior can drift, releases are treated like code changes. Canary traffic and behavioral tests catch regressions before full rollout.

Policy alignment

- Existing frameworks first: SOX controls, records retention, and existing data classification schemes extend into agent runs. New policies cover long‑running tasks, side‑effect actions, and third‑party website interactions.

- Reg change readiness: EU DORA, U.S. model risk management, and bank secrecy obligations already demand traceability and operational resilience. Agent deployments map to those by treating the agent platform as critical infrastructure and documenting business continuity and disaster recovery. For context on banking controls, read our guide to bank model risk management.

Unit economics: the part that makes or breaks scale

Token spend is only the first line in the budget. The real cost drivers are long‑horizon tasks, retries, and human confirmation loops.

A practical cost model

- Direct model cost: Price per million input and output tokens by model tier. Long tasks with planning plus tool use can easily cross multiple context windows. Teams should track cost per completed task, not per prompt.

- Tool and infra cost: Retrieval queries, document conversions, and sandboxed browsing have their own metered costs. Include those in unit cost or you will understate by 20 to 40 percent on complex workflows.

- Human loop tax: Every confirmation injects latency and labor. If an average task requires two confirmations at one minute each, that is a hidden dollar cost per task that can rival model fees.

- Failure and retry overhead: Agents sometimes get stuck. Timeouts, backoffs, and guardrails reduce but do not eliminate retries. Budget an error factor, then drive it down with better validators and smaller subplans.

Measuring ROI

- Baselines: Start with human baselines for each task. Measure time to complete, rework rate, and escalation rate. Define success as delta to those, not absolute throughput.

- Task portfolio view: Some workflows will show outsized gains, others will not. Sort by value and routinize the winners. Kill or re‑scope the laggards.

- Quality and risk: A 40 percent time saving is meaningless if error rates rise. Track factual accuracy, policy violations caught by guardrails, and complaint rates.

- Marginal value of autonomy: Compare assistive mode vs autonomous mode. You may find that hybrid mode with a single confirmation yields most of the savings at a fraction of the risk.

Budget levers

- Model routing: Use cheaper models for retrieval and formatting, reserve top models for planning. Implement automatic downgrade paths when tasks are simple.

- Plan compression: Encourage agents to draft and agree a plan in fewer tokens. Structured plans beat verbose chain‑of‑thought for cost and reproducibility.

- Output templates: Tight templates cut token bloat and improve validator pass rates.

How Citi’s move maps to the emerging agent stack

The industry is converging on a pattern: a computer‑using agent that can browse, fill forms, and operate software in a sandbox; a planner with tool use; and a control plane that binds actions to a user and asks for confirmation on risky steps.

OpenAI’s Operator embodied this approach with a computer‑using model, a virtual machine, and explicit user confirmations before side effects. OpenAI has since folded Operator’s capabilities into a broader agent experience, but the core design signals where enterprise products are headed. See the OpenAI Operator announcement.

Anthropic’s Chrome‑based agent preview underscores the browser as a universal runtime. A browser agent can read and act on almost any web app without per‑vendor integrations, which is powerful but increases the need for scoped permissions, site blocklists, and human sign‑offs on purchases, posts, and transactions. Expect enterprises to pair a browser agent with strict allowlists, containerized sessions, device verification, and Just‑In‑Time credentials. For implementation patterns, explore our browser agent infrastructure guide.

In that context, Citi’s pilot looks like the enterprise version of the same pattern. The difference is the rigor: RBAC through the firm’s identity provider, a policy engine that guards side effects, centralized logging, and a procurement‑backed dual‑vendor model plan.

What this means for adoption curves

- From copilots to co‑workers: Assistive chat is becoming a task owner. Large firms will start by letting agents own low‑risk internal workflows. External or client‑visible actions will remain gated.

- Verticalization through templates: The fastest wins will come from templated packs. Think research digests, KYC file prep, market summaries, and policy Q&A. These are format heavy and easy to validate.

- Central platforms, federated ownership: Expect a central agent platform owned by CTO or COO, with federated business owners defining task templates and acceptance criteria. Platform and policy stay centralized. Task logic is modular and business owned.

A 90‑day implementation checklist

Weeks 0 to 2: scope, risk, and design

- Pick three workflows with measurable baselines and limited side effects. Example: internal research packs, policy lookups, translation to client‑safe templates.

- Define acceptance criteria: time saved, accuracy thresholds, and guardrail coverage. Document what good looks like.

- Stand up the control plane: service principals, role definitions, audit logging, confirmation UI, and redaction filters. Decide what to log and store for replay.

Weeks 2 to 4: vendor and environment

- Lock model access and egress controls. Set per‑team budgets, quotas, and alerts.

- Choose two model vendors to mitigate concentration risk. Define routing rules.

- Build safe renderers for external web content. Configure site allowlists and blocklists.

Weeks 4 to 6: orchestration and validators

- Implement task plans as structured graphs. Add deterministic validators for each output type.

- Create output templates for speed and consistency.

- Instrument traces end to end. Include cost, latency, failure codes, and confirmation timestamps.

Weeks 6 to 8: pilot hardening

- Run shadow mode alongside humans. Compare outputs. Tune prompts, validators, and routing.

- Set confirmation policies by risk. Require sign‑off on any external email, file upload, transaction, or publication.

- Write runbooks for failure modes: timeouts, loops, and vendor outages. Include automatic downgrades and kill switches.

Weeks 8 to 10: go live to 1,000 to 5,000 users

- Train pilot users. Provide clear instructions on confirmations and when to take over.

- Roll out task‑specific dashboards that show savings, accuracy, and cost per task.

- Establish a triage queue for misfires and user feedback.

Weeks 10 to 12: evaluation and scale

- Measure against baselines. Keep what clears thresholds. Re‑scope what does not.

- Tighten budgets by task. Move long‑running tasks to batch windows if possible.

- Prepare a formal model risk and audit package with logs, tests, and change management.

Forward look: compliance and procurement patterns to expect

Compliance

- Confirmation by default: Regulators will expect proof that a human approved external actions. Screenshots or structured logs of the confirmation step will become standard evidence.

- Replay and retention: Banks will store agent plans and tool interactions long enough to support audits and disputes. That demands a retention schedule and a safe way to store prompt context without over‑collecting sensitive data.

- Segmentation of duties: Agents will not bypass segregation. Payment initiations and access changes will always require dual controls.

Procurement

- Multi‑model contracts: Expect master service agreements that cover multiple model families, with price protections, predictable deprecation policies, and testing sandboxes.

- Browser agent carve‑outs: Contracts for computer‑use or browser agents will include strict rules around scraping, storage, and honoring robots directives, plus vendor attestations for site‑specific integrations.

- Evaluations and SLAs: RFPs will ask for stability under model updates, incident response timelines, and detailed usage telemetry. Benchmarks matter less than real task success under customer workloads.

Security and resilience

- Per‑task egress policies: Network teams will ship task‑scoped egress rules rather than broad internet access for agents. Browser sessions will run in isolated containers with device verification.

- Fallbacks by design: If Vendor A fails, an orchestrator can route to Vendor B with adjusted prompts and validators. If the browser sandbox fails, task plans degrade to retrieval and drafting modes.

Bottom line

Citi’s pilot is a telling milestone. A large, regulated bank is putting autonomous agents into real employee workflows with clear guardrails, enterprise‑grade orchestration, and a plan to measure unit economics. The pattern is becoming clear. The winning enterprise agent stack pairs a computer‑using runtime with a planner, then wraps it in identity, policy, and audit. Costs are managed through model routing, tight templates, and a confirmation UX that catches side effects without drowning users in approvals.

The next phase will not be about a single magical model. It will be about disciplined engineering, risk controls, and procurement muscle. Firms that treat agents like any other critical system, with change management, SLAs, and replayable logs, will scale. Those that do not will stall in pilot purgatory.

Either way, the signal is clear: agents are moving from talk to work. And in places like Citi, that work is starting to look a lot like production.