Solana’s Next Gear: Firedancer’s Plan to Uncap Blocks

Solana is preparing to trade fixed block limits for dynamic capacity. Firedancer’s SIMD-0370, planned for the post‑Alpenglow era, could unlock bigger bursts when demand spikes, cut failed trades, and enable new real-time apps. Here is how it works, the risks, and what builders should do now.

Solana’s fixed block cap is on the chopping block

Solana’s next leap is not a faster clock. It is removing the speed limit altogether. Jump Crypto’s Firedancer team has submitted a proposal, SIMD‑0370 to remove block limit, to eliminate Solana’s hard per‑block compute ceiling once the Alpenglow consensus upgrade lands. The idea lets block producers include as much work as their hardware and the network can handle, rather than stopping at a fixed number.

Today, every Solana block has an upper bound measured in compute units. A compute unit is Solana’s yardstick for CPU time and memory, similar in spirit to Ethereum’s gas but tuned for Solana’s parallel runtime. The current cap has been 60 million compute units per block, and developers have previously floated lifting it to 100 million. That ceiling protects slower validators from oversized blocks, but it also constrains high‑performance validators that could handle more work.

For broader market context on why higher throughput matters, see our take on Solana ETF approval and liquidity.

How Alpenglow makes this possible

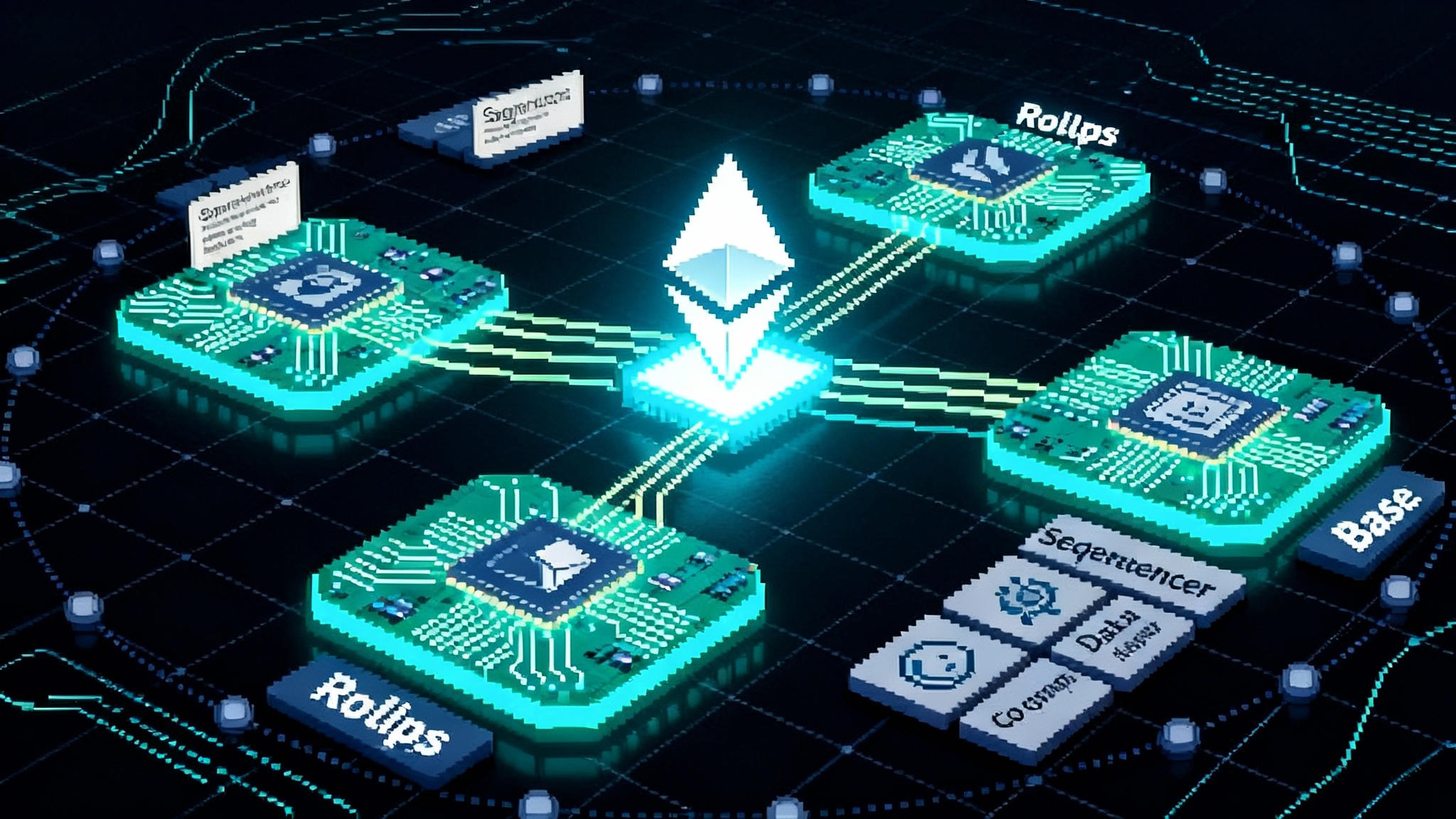

Alpenglow is a redesign of Solana’s consensus around two low‑latency components called Votor and Rotor. The team targets single‑round finality in favorable conditions and sub‑second confirmations in the median case. Read the Alpenglow consensus overview from Anza.

Votor and Rotor in brief

- Votor aggregates votes quickly with a fast path and a fallback path, prioritizing liveness at low latency.

- Rotor reworks data dissemination so block data travels with fewer hops and better use of total bandwidth.

Why skip votes matter

Alpenglow brings skip votes into the foreground. If a validator cannot execute a block in time, it can explicitly skip the slot instead of stalling progress. SIMD‑0370 builds on this: if a leader proposes a heavier block that only some validators can process before the deadline, slower validators skip while faster ones execute and vote. The chain continues and the heavy block finalizes once enough stake attests. Other safety limits (like propagation guardrails) remain to keep dissemination sane.

From fixed ceilings to a performance flywheel

Think of Solana as a set of toll lanes. Until now, the toll gate has had a fixed opening that only lets a certain number of cars through per interval. Even if a lane could flow faster, the width was fixed. SIMD‑0370 widens the opening dynamically when there is demand and when the toll booth can handle it. If a particular booth is slower, cars divert to the booths that can process them faster.

That creates a simple but powerful incentive loop:

- Leaders pack more value. Producers earn more when they fit more valuable transactions into their slots, so they will use every legal inch of capacity their hardware and code can support.

- Validators upgrade to keep up. Operators who skip too many blocks lose rewards and influence, which motivates hardware upgrades, better tuning, and efficient client software.

- Capacity compounds. As more validators upgrade, leaders can safely propose larger blocks without widespread skips, increasing practical throughput and responsiveness.

What dynamic blocks mean on a busy day

Consider a volatile token launch or an airdrop. Under a fixed cap, blocks fill, pending queues stack up, and users see failed swaps because their compute budget runs out before inclusion. Traders widen slippage to survive, which pushes fees up and degrades the experience.

With dynamic blocks in a post‑Alpenglow world, the leader tries to pack a heavier block. Fast validators execute it in time and vote. Slower ones skip. Finality still arrives within a few hundred milliseconds because voting accumulates quickly. The result is more total transactions cleared per second and fewer failed trades during the burst.

New user experiences become practical

- Real‑time games. With near‑150 ms finality and elastic block size, stateful on‑chain tick loops can feel responsive. Multiplayer arenas can certify actions every few frames.

- On‑chain order books. Deep books with frequent cancels and edits are compute heavy. Larger blocks during peak moments reduce cancel storms and failed placements.

- Social and creator apps. Rich interactions like per‑message tipping become less brittle when surges do not slam into a universal ceiling.

For rollup and sequencing parallels beyond Solana, see our guide to permissionless L2 sequencing trends.

The tradeoffs to watch

- Validator hardware pressure. If larger blocks directly boost leader rewards, well‑capitalized operators may upgrade first. Smaller operators could skip more often and see lower rewards. Expect community guidance on minimum specs and tuning.

- Skipped‑block dynamics. A leader who consistently overpacks could see more of their own blocks skipped if the median validator cannot keep up. Client teams will need heuristics that learn live headroom rather than maxing out every slot.

- Propagation and fairness. If a block is too large to disseminate in time to a supermajority, it gets skipped. That protects liveness but raises fairness questions for users who paid to be in that block.

- Decentralization risk. If participation tilts toward a smaller set of high‑performance validators, effective supermajorities could become more concentrated, renewing focus on stake distribution and client diversity.

How fee markets could evolve

Fixed caps force simple scarcity games. When demand jumps above the line, users escalate priority fees and widen slippage, yet many still fail. In a dynamic regime, price discovery shifts to the interplay between the leader’s appetite to pack heavier blocks and the validator set’s ability to execute on time. For design patterns in adjacent ecosystems, review PeerDAS and blob fee design.

A builder’s checklist for 2026‑level throughput

- Profile compute like a hawk

- Measure compute units per instruction, per transaction, and per user interaction. Track peak, p95, and p99. Minimize account write locks and restructure hot paths to avoid contention.

- Eliminate gratuitous syscalls and state writes. Favor smaller transactions that compose instead of a single giant transaction that monopolizes a slot.

- Engineer for sub‑second finality

- Assume confirmation around 100 to 200 milliseconds in the Alpenglow era. Design interfaces that do not block on multiple confirmations for low‑value actions. Show optimistic state and reconcile on finality.

- Use durable nonces and idempotent flows so retries do not double‑spend. Treat a skipped slot as normal.

- Stress test for burst capacity

- Replay historical spikes on devnet and testnet with realistic priority fees. Observe behavior when blocks are fuller and arrive faster.

- Add backpressure in client libraries so you do not flood your own queues when throughput expands.

- Tune fee strategy for dynamics

- Replace static tips with models that react to inclusion latency and effective compute cleared. Pay for the last microsecond you need.

- For traders, calibrate slippage to a moving picture of throughput. If bursts clear more transactions, tighten spreads during peaks.

- Build for partial participation

- Expect that not every validator will see or execute every heavy block on time. Keep apps resilient to temporary view differences across RPC providers.

- Use event streams that reconcile on finality rather than treating inclusion as final for high‑value actions.

- Lean into client diversity

- Track client releases from Agave, Anza, and Firedancer. Test against at least two clients and multiple hardware profiles.

- Operate a staging validator if your app drives significant on‑chain load to tighten the feedback loop.

- Prepare your data layer

- Indexers and analytics pipelines must handle higher write rates. Use batched ingestion, partition by account or program, and compress logs.

- Budget storage and network egress for larger block artifacts.

What to watch between now and mainnet changes

- Protocol guardrails. Expect discussion of safe defaults such as shred caps, target block sizes, and adaptive leader heuristics.

- Governance signals. Alpenglow’s passing vote showed appetite for bold changes. Watch for follow‑up requests for comment, benchmarks, and staged rollouts tied to SIMD‑0370.

- Real‑world telemetry. After Alpenglow, track slots per second, finality distribution, and skip patterns. If skips cluster by hardware class or geography, expect adjustments.

The bottom line

Solana is moving from a world where a single number throttles everyone to a world where capacity is negotiated in real time by code, bandwidth, and silicon. Alpenglow aims to make that safe by finalizing quickly and routing around stragglers. Firedancer’s SIMD‑0370 applies that safety to throughput by removing the fixed cap and letting performance drive rewards.

For builders, the mandate is clear: assume sub‑second finality and variable block capacity, make your software lean, and design interfaces that thrive under volatility. The products that feel instant and reliable during the next frenzy will be the ones that build for this future today.