AgentOps Is the Moat: Inside Salesforce’s Agentforce 3

Salesforce’s June 23, 2025 Agentforce 3 release shifts the AI agent race from building to running at scale. Command Center telemetry, native MCP, and a curated marketplace turn governance, routing, and evals into the real competitive edge.

The next wave of AI agents is running them, not building them

On June 23, 2025 Salesforce announced Agentforce 3, a release framed less as a new way to author agents and more as a way to govern and scale them in the enterprise. The update centers on Command Center for real time observability, built in support for the Model Context Protocol (MCP), and an expanded AgentExchange marketplace. The message is clear: the moat in enterprise AI is AgentOps, not another agent builder. See the official details in the Salesforce Agentforce 3 announcement.

For a broader view of the enterprise stack, compare this with our guide to the 2025 enterprise agent stack.

Why AgentOps becomes the moat

Enterprises do not lack use cases. They lack the operational scaffolding to run agents safely, efficiently, and predictably at scale. The gaps show up in four ways:

- Visibility gaps: Limited insight into agent actions, tool calls, and failure causes slows debugging.

- Governance gaps: Security, identity, and policy controls sit outside the agent surface, so risk teams cannot assert or certify behavior.

- Performance gaps: Latency varies by provider and region, costs drift with prompt growth and long traces, and failures cascade across upstream APIs.

- Trust gaps: Hallucinations, weak grounding, and inconsistent citations keep humans in the loop for too many tasks.

AgentOps turns those gaps into a managed system. The goal is simple to state and hard to deliver: instrument every action, enforce policy in real time, route on health and cost, and evaluate outputs continuously so the fleet gets better every week.

What Agentforce 3 changes for enterprises

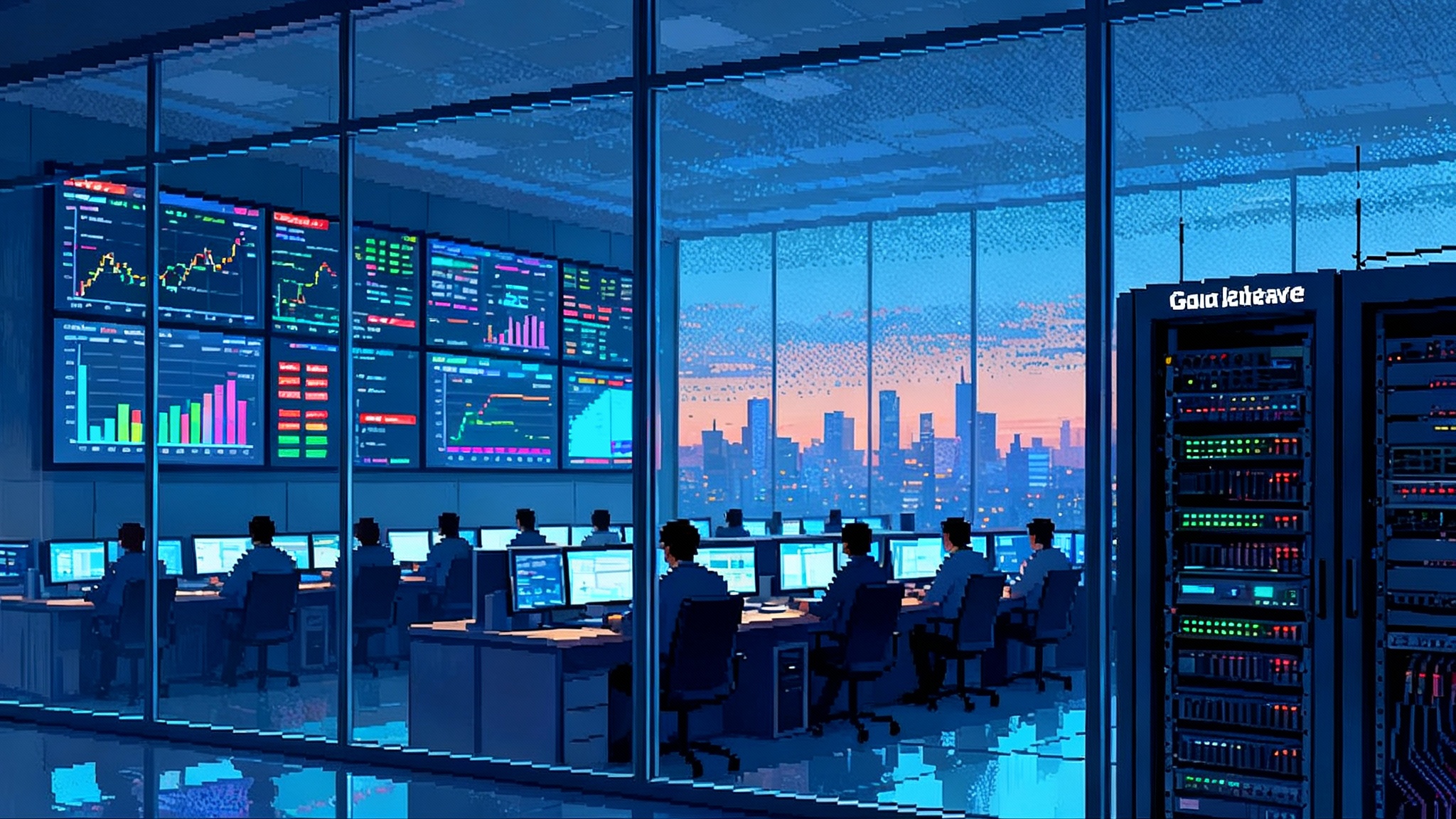

- Command Center unifies agent telemetry on one pane of glass. Teams trace sessions, watch latency and error spikes, and drill into tool calls. Data lands in Salesforce Data Cloud and aligns to open telemetry patterns so logs stream into existing monitoring tools.

- Native MCP support standardizes tool access. An MCP client can talk to any approved MCP server that exposes capabilities and resources. Security and identity teams get one consistent control plane.

- AgentExchange expands discovery and distribution. Partner MCP servers become productized capabilities with usage controls, audit trails, and policy enforcement at the gateway.

The architecture upgrades matter too: lower latency from streaming, improved grounding with web search and inline citations, and automatic model failover. None is flashy alone. Together they bias toward reliable operations at scale.

A shared language: the AgentOps stack

- Observability and tracing

- Session traces with spans for planner steps, tool calls, model calls, and human interventions

- Cost and token accounting per span and per team

- PII redaction on ingress and sensitivity labels

- Events emitted to a central bus for analytics and alerting

- Governance and security

- First class agent identities with scoped credentials and rotation

- Policy enforcement for allow lists, rate limits, and guardrails

- Data boundaries with region routing, tenant isolation, and encryption

- Compliance controls with audit trails and retention schedules

- Routing and failover

- Model routing by domain, cost, and observed error or latency

- Tool routing by queue depth and historical success rates

- Fallback paths with safe handoff to humans when thresholds are breached

- Evals and optimization

- Pre deployment synthetic tests and replayed traces

- Online canaries, red team prompts, and slice based checks

- Feedback loops from human ratings and edit traces

- Topic and scenario management for cohort comparisons

- Open tool access and interoperability

- Standardized tool interface via MCP for capabilities and permissions

- Registry of approved MCP servers with owners, scopes, and SLAs

- Gateway that authenticates, logs, and enforces policy on every call

- Productivity layers

- Studio and testing harness with version control for prompts, tools, and policies

- Wallboards in the flow of work so supervisors see agent and human metrics together

For a concrete enterprise example, see the Citi 5,000 user agent pilot.

MCP in practice and why it matters now

The Model Context Protocol defines an open pattern for how AI systems request tools and resources. Servers expose capabilities and context in a standard way. Clients discover and call them with consistent security and telemetry. Over the past year MCP has become a practical path out of connector sprawl. Native platform support matters because it makes tool access more portable and governable. Technical readers can start with the Model Context Protocol spec.

KPIs that separate pilots from production

Set explicit SLOs, publish them, and review weekly. The short list worth fighting over:

- Task success rate: Share of tasks completed against a defined acceptance test, sliced by topic and segment

- Safe handoff rate: Transfers to a human with full context when policy or confidence thresholds are not met, plus time to handoff

- Grounding and citation coverage: Portion of responses with verifiable citations to approved sources, tied to a hallucination catch rate

- Latency budget adherence: Percent of tasks completed within the end to end budget, broken down by planning, tool calls, and model time

- Cost per resolved task: Fully loaded cost divided by successful task count

- Escalation rate and fix time: Frequency and speed of incident detection and mitigation

- Policy violation rate: Blocks or flags per thousand tasks with root cause attribution

- Human edit distance: For drafting tasks, the proportion of machine content that humans rewrite

- Trace completeness: Sessions with required spans and labels, including tool call IDs and cost tags

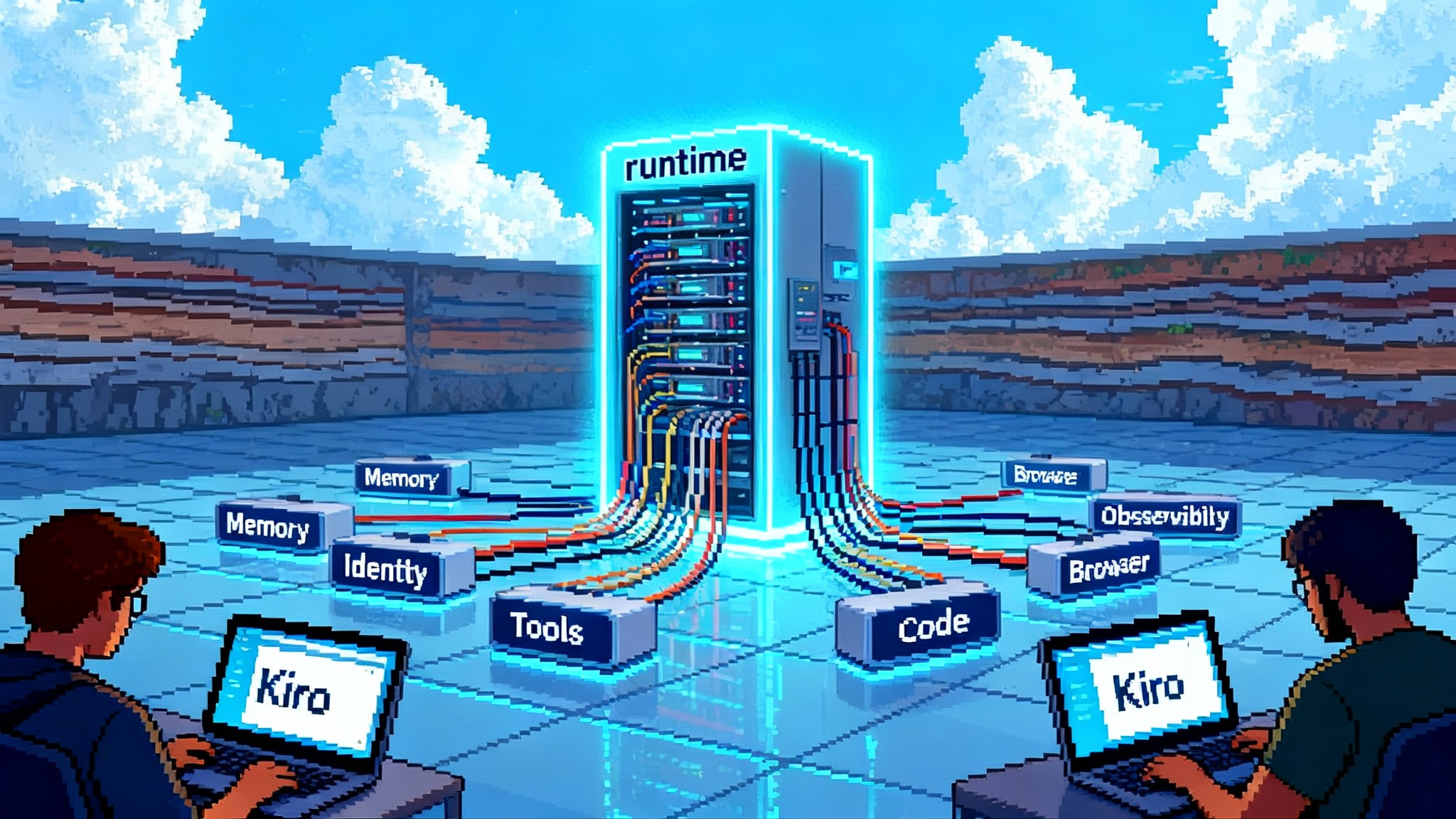

A reference architecture for governed agents

- Clients: Web, mobile, and system triggers initiate tasks with a trace ID

- Agent runtime: Planner, memory store, and skills emit spans with timing, model, and prompt version

- Tool layer: An MCP gateway authenticates agent identities and routes to approved MCP servers with scope and cost tags

- Observability bus: Events flow through redaction filters into a data lake and a time series store

- Command Center: Dashboards for health, topic performance, cost, and safety, plus wallboards for contact centers

- Control plane: Policies, allow lists, and rate limits managed by risk and platform teams with CI driven approvals

- Routing and failover: Health signals from models and tools drive routing tables and safe handoffs when SLAs are breached

A rollout playbook that avoids chaos

- Start with a narrow, valuable task

- Clear acceptance criteria and guardrails. Define what the agent cannot do.

- Put observability in first

- Instrument session and tool spans from day one with a standard schema.

- Establish identity and policy

- Least privilege service principal, credential rotation, and a short allow list of MCP servers.

- Run pre deployment evals

- Synthetic tests plus replayed traces to measure success, handoff, and latency.

- Launch a supervised pilot

- 5 to 10 percent of users, human approval for risky actions, daily review of handoffs.

- Close the loop every week

- Ship one improvement per week based on traces and publish a scorecard.

- Scale with routing and resiliency

- Model routing by cost and performance, automatic failover, and a manual kill switch.

- Expand surface area responsibly

- Add one tool or topic at a time, each with an owner, SLO, and test plan.

A compact schema for agent telemetry

- trace_id

- parent_span_id and span_id

- agent_id and prompt_version

- tool_name, server_id, and scope

- model_name and provider

- cost_unit and cost_value

- latency_ms and tokens_in_out

- policy_decision and risk_flags

- handoff_type and handoff_latency_ms

- grounding_sources and citation_count

A consistent schema lets you instrument once and analyze everywhere, and it makes it easier to tie behavior to downstream outcomes like refunds issued or tickets closed.

Latency budgets that users can feel

Aim for sub 2 seconds to first token and under 6 seconds end to end for common support tasks. Treat the budget like a contract and break it down:

- Planning: 10 to 20 percent

- Tool calls: 60 to 70 percent

- Model generation: 10 to 20 percent

If tool calls dominate, prioritize caching, batching, and faster servers. If planning dominates, simplify prompts or pre compute lookups.

Kill shadow agents with an MCP gateway

Shadow agents begin with good intentions and end with unreviewed secrets, unknown costs, and no audit trail. An MCP gateway and registry change the incentives:

- Easy discovery of approved servers by category and owner

- Helpful defaults that enforce scopes, tag costs, and add tracing headers

- Policy profiles so low risk read only servers flow with minimal review

- Centralized rollback that disables a misbehaving server without touching every agent

For the infrastructure tailwind that makes all of this possible, see the OpenAI and Nvidia 10GW bet.

What great looks like after 90 days

- One or two tasks at or above 85 percent task success with weekly reviews

- Safe handoff rate tuned to policy, often 5 to 15 percent for frontline tasks

- Grounding and citation coverage above 80 percent with automated checks

- Latency budgets met 95 percent of the time with resilient routing

- No shadow connectors because all tool access flows through the MCP gateway and is visible in Command Center

- A backlog of partner servers in AgentExchange with owners and SLOs

People and process still decide outcomes

AgentOps is technology and culture. The best programs bring product, security, data, and frontline teams into one cadence. They run crisp postmortems, teach supervisors how to use dashboards, and reward better handoffs and citation quality, not only deflection.

The competitive edge now belongs to operators

Agentforce 3 is not the only way to build agents. It is a credible attempt to make running them a first class discipline. Command Center treats telemetry as a product. MCP support points to an open standards path for tool access and multi agent interoperability. The expanded marketplace offers a safer route to adopt partner capabilities without reinventing integration. If you instrument first, govern at the gateway, route on real signals, and evaluate continuously, your agents will get more reliable, safer, and cheaper over time.

For the original details, revisit the Salesforce Agentforce 3 announcement. For MCP fundamentals, consult the Model Context Protocol spec.