Meta's Ray-Ban Display and Neural Band make agents real

Meta’s new Ray-Ban Display smart glasses and EMG-based Neural Band move assistants from apps to ambient computing. Here is what the September 2025 launch enables, the constraints that could stall it, and how developers should build for face-first agents.

From apps in pockets to agents on your face

For a decade, AI assistants lived inside phones and speakers. You called them up, waited, and put them away. Meta’s September 2025 unveiling of Ray-Ban Display smart glasses paired with an EMG Neural Band changes that contract. The assistant does not just hear you, it sees what you see, answers in-lens, and acts through tiny wrist gestures that feel as natural as a twitch. On September 17, 2025, Mark Zuckerberg introduced Ray-Ban Display with a built-in lens overlay and a bundled Neural Band, with U.S. availability set for September 30 at $799. The core facts of price, product, and timing are captured in CNBC’s event report. Meta also previewed a developer path that opens visual and audio capabilities to third parties in its wearable access toolbox preview.

What ambient agents unlock when they live on your face

- Hands-free, step-by-step guidance: Assemble furniture, repair a sink, or learn a new camera with annotated overlays that advance on a subtle cue.

- Live translation and captions: Follow a conversation or lecture without looking down. Captions appear where your attention already is, and translation becomes a shared experience.

- Glanceable communications: Messages, navigation hints, and reminders arrive in-lens as short, private overlays. A nod or finger pinch can archive, reply, or expand.

- Context-aware answers: Ask about the monument in front of you, the ingredient on your counter, or the part in your hand. Vision plus audio gives the agent situational understanding that text alone cannot.

- Subtle action via the wrist: The Neural Band reads motor neuron signals to pick up tiny gestures so you can scroll, confirm, or capture without breaking flow.

The through-line is continuity. Instead of starting an interaction, you remain in one, moving between seeing, asking, confirming, and acting without breaking focus.

The hard constraints that decide what ships versus what sticks

1) Latency makes or breaks the illusion

- Visual prompts must be immediate. If captions lag or overlays stall while you hold a screw, you will revert to your phone.

- The end-to-end path matters. Camera capture, on-device preprocessing, encryption, network hops, model inference, rendering, and the display driver all add up. Every millisecond counts.

- On-device versus cloud. Keep perception and short responses local, escalate richer tasks to the cloud, and design graceful fallbacks.

2) Battery versus brains

- Form factor fights compute. Glasses want to be light and cool while NPUs want power and thermal headroom.

- Tiered compute is likely. Low-power local models handle wake words, gesture detection, wake-on-look, and safety checks. Burstier tasks like long translations or video summaries escalate on demand or while docked.

- Charging rituals matter. Adaptive brightness, context-aware sensor duty cycles, and transparent per-feature power budgets determine daily survivability.

3) Privacy by design for continuous sensing

- Ambient does not mean always recording. Default to ephemeral processing for glance and guidance, with clear capture indicators.

- On-device redaction is table stakes. Blur faces, screens, and bystander conversations unless the wearer takes an explicit, visible action.

- Consent in public needs help. LEDs for camera use, a distinct start chime, and a visible capture history in the companion app set expectations.

4) Safety, accessibility, and ergonomics

- Manage visual load. Rate limit overlays and prioritize based on motion, noise, and task complexity.

- Accessibility first. Live captions, adjustable font sizing, high contrast modes, and conversation focus can make the device indispensable.

- Fit and fatigue. Weight distribution, nose pads, and prescription options determine whether the device is worn all day or left on a desk.

The platform shift for developers

If glasses become the default way to invoke the assistant, the unit of development changes from apps to agent skills.

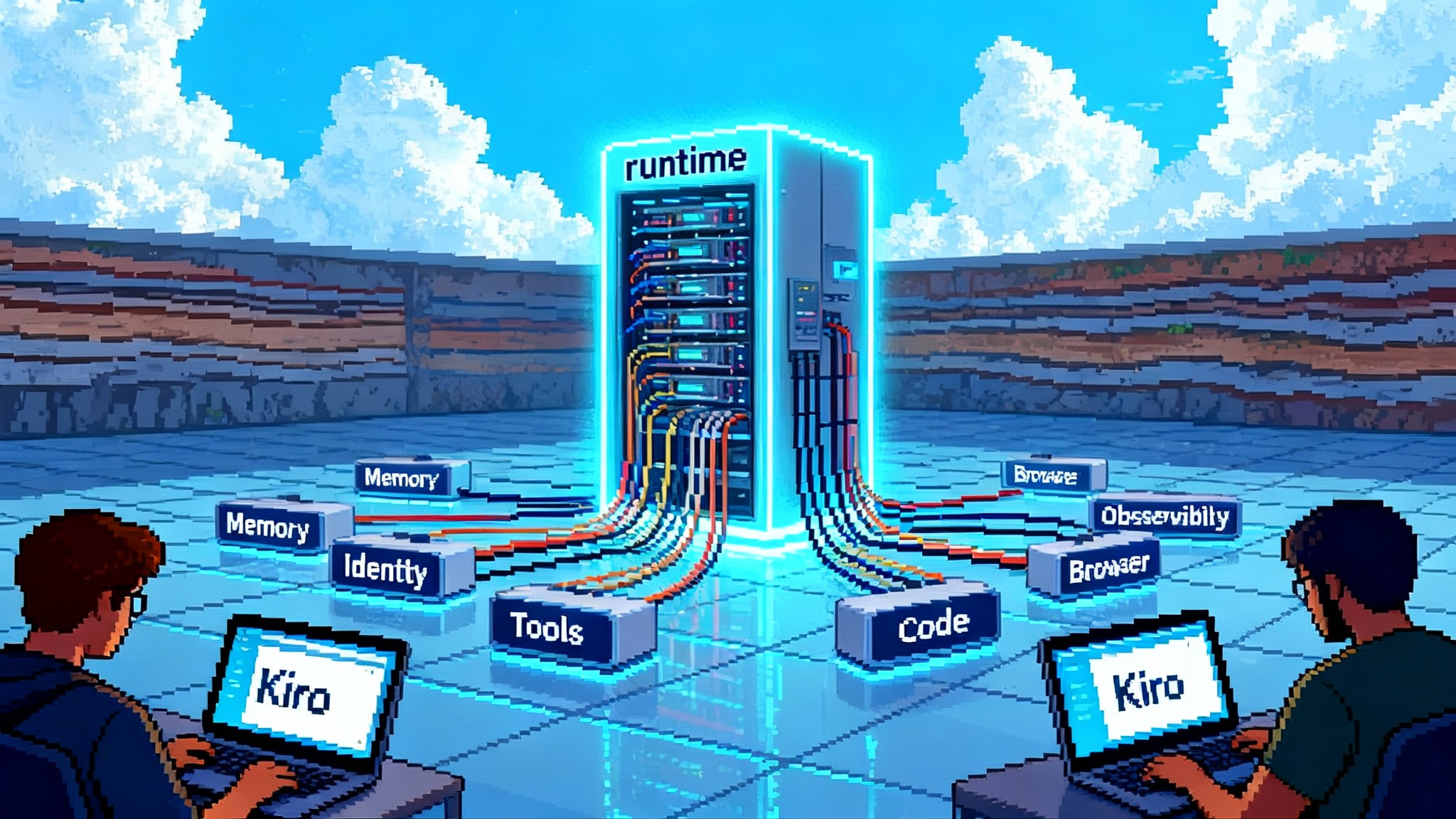

- Skills over screens. Define intents, capabilities, and event handlers that the agent composes based on context. For a deeper look at runtime design, see enterprise agent stack architecture.

- Multimodal inputs are first-class. The same skill may be triggered by voice, a gesture, a look at a tagged object, or a geofence event.

- Output is glanceable by default. Start with a three-word overlay, expand to a short paragraph, then hand off to the phone or desktop by choice.

- On-device versus cloud execution. Declare execution targets and fallbacks. A translation skill might run locally for short phrases and cloud-side for long passages.

- Evented life cycle. Skills are invoked and paused repeatedly as users move through the world. Keep state small, serializable, and privacy-scoped.

What a face-first agent means for competitors

- Apple: Could deliver similar affordances via iPhone silicon, Watch, and AirPods, with privacy and integration as differentiators.

- Google: Strength in search and real-world knowledge, plus Android, Pixel cameras, and Gemini. Hardware consistency is the challenge.

- Amazon: Needs vision-aware skills and clearer monetization of agent actions beyond voice-first.

- Microsoft: Copilot spans devices. A consumer glasses partner or licensing play could bring a face-first Copilot to market.

- Snap and startups: Camera-first culture and creator tools remain a niche to defend. Rings, pins, and pendants may complement glasses.

For broader ecosystem moves, see how the browser becomes an agent across surfaces.

Experience patterns that will define everyday use

- Consent-forward capture: Every photo or video starts with an outward signal and a companion log that is easy to show.

- Glance, dwell, commit: A glance reveals a hint, dwelling confirms interest, and a small gesture commits to the action.

- Context cards, not windows: Small, edge-aligned, dismissible cards summarize one action, with a path to expand on the phone.

- Conversation focus modes: When you talk to someone, incoming content steps back and the person in front of you is amplified.

- Safety-aware transforms: When you are moving quickly, text grows, colors simplify, and attention-heavy tasks shift to audio.

Data, privacy, and policy

- Capability sandboxes: Each sensing modality runs in its own sandbox with explicit grants. Defaults should recognize objects, not identities.

- Context permissions: Move beyond raw sensor toggles to place and activity-based permissions.

- Data minimization and deletion: Short-term buffers for captions and translation, with clear controls to purge and export. Long-term memory is opt in and scoped to specific skills.

- Bystander protections: Automatic blur for faces and screens, plus warnings or overrides for sensitive scenes.

- Model provenance and transparency: Indicate whether responses came from local or cloud models and which third-party skills were invoked.

The economics of an agent platform

- Monetization flows to outcomes. Pay for rebooking a flight, completing a purchase, or drafting and sending an email, not for taps.

- Distribution depends on trust. Badges for privacy posture, on-device execution, and accessibility quality will matter.

- Tooling must collapse the stack. A single project should target on-device models, cloud fallbacks, and companion escalation with profiling for latency, battery, and data flows. For the compute backdrop enabling this, see OpenAI Nvidia 10GW bet unlocks agents.

Anchored to September 2025, eyes on what is next

The September 30, 2025 U.S. availability window matters because it turns a research demo into a public baseline. From that moment, expectations change. People will compare every phone notification to an in-lens glance. They will expect hands-free step-by-step help in kitchens, garages, and studios, and they will ask why language barriers persist when captions and translation can travel with them.

To reach mainstream use, a face-first agent ecosystem will likely require:

- Rich capability sandboxes with visible controls and clear defaults

- Context permissions mapped to places and activities

- New UX patterns for glanceable cards, dwell confirmation, and safe escalation

- A unified developer surface for skills, models, and privacy contracts

- Strong accessibility affordances that are core, not add-ons

- Social norms supported by hardware signals that make capture, translation, and assistance visible to everyone nearby

Agents that see what you see, answer where you look, and act when you gesture feel less like software and more like a sense you put on each morning. Whether that becomes normal will depend on the work that follows, from silicon to policy to the subtle choreography of a nod that means yes.