AIDR arrives: CrowdStrike crystallizes agent security

CrowdStrike’s September 2025 Pangea acquisition and Fal.Con launch of an Agentic Security Platform mark the arrival of AI Detection and Response. Learn how agentic workflows reshape risk, a 90-day AIDR rollout plan, and what to ask vendors before you buy.

The week AIDR became a category

CrowdStrike just put a stake in the ground. On September 16, 2025, the company announced a deal to acquire Pangea and unveiled what it calls an Agentic Security Platform at its Fal.Con conference, positioning the combined offering as complete AI Detection and Response. The move gives a name to an urgent need enterprises have been feeling for the past year, securing the messy real world of agentic AI that uses tools, acts as a first-class identity in SaaS, and takes instructions through prompts. It also clarifies the starting line for a market that will shape every security program over the next 12 to 24 months. The CrowdStrike release on Pangea acquisition frames the moment well and sets expectations for how platforms will compete.

This piece makes the case that AIDR is more than a marketing label. It is the missing security stack for AI agents. We will unpack the new attack surface, explain why prompt injection works so reliably, sketch a pragmatic AIDR reference architecture, compare AIDR to EDR and XDR, and give CISOs a 90-day rollout plan. We close with implications for buyers and vendors as agent security becomes table stakes. For additional context on building blocks, see our overview of the enterprise agent stack architecture.

What changed when AI became agentic

Agentic workflows turn language models into actors that do real work through external tools and systems. Three shifts matter most:

- Tool use at machine speed. Agents call functions, run code, browse, and operate SaaS APIs. That turns every tool into a potential blast multiplier.

- SaaS agent identities. Agents log in as service principals, app registrations, or delegated users. They own tokens, secrets, and permissions. They can laterally move through an estate like any other identity.

- The prompt layer as a trust boundary. Prompts, retrieved content, and hidden instructions converge in one place. That makes the prompt pipeline a new control plane, not just a UI.

These shifts expand both the opportunities and the obligations for security teams. For a view into operational readiness, see AgentOps as the moat.

How the attack surface expands with agents

When agents act, four categories of risk emerge.

- Tool misuse and overreach

- Unsafe function exposure. A tool that can write files, send email, or move money is a powerful capability. Without scopes and policies, agents can chain tools in unintended ways.

- TOCTOU in tool orchestration. An agent reads a file, a second tool mutates it, and a third tool acts on the stale assumption. Adversaries can wedge attacks in these races.

- Supply chain and payload confusion. Tools pull dependencies, fetch remote content, and deserialize payloads. That adds classic software risk to the agent loop.

- Identity and access for agents

- Credential sprawl. Agents accumulate API keys, OAuth tokens, and cookies. Shadow agents appear when teams prototype with personal tokens.

- Permission drift. Privileged scopes are granted for a quick demo and never revoked. Least privilege erodes across dozens of SaaS tenants.

- Lateral movement by design. If an agent acts as a service principal with broad scopes, it becomes a stepping stone into finance systems, data lakes, or CI/CD.

- Data egress and leakage

- Accidental exfiltration. Prompts and context windows mix sensitive data with public content. Outputs can echo or transform secrets and send them to external endpoints.

- Model and tenant boundary confusion. A multi-model or multi-tenant setup can route requests to the wrong provider or region if guardrails are not explicit.

- The prompt pipeline as an attack surface

- Direct and indirect prompt injection. Hidden instructions in web pages, PDFs, emails, or vector stores can hijack agent behavior.

- Cross-domain trust collapse. Agents treat retrieved content as relevant, so semantic proximity can override security intent. Content becomes command.

Why prompt injection keeps working

Prompt injection works because data and instructions share the same channel. Most models do not have an inherent concept of trust boundaries or policy context, and most agent frameworks merge user input, system prompts, tool outputs, and retrieved documents into a single context window.

What makes it effective in practice:

- Instruction commingling. A single string contains the rules, the question, and the data. The model optimizes for helpfulness, not policy isolation.

- Indirect delivery paths. Instructions embedded in third-party content, HTML attributes, CSS, or comments ride along with legitimate data. A browsing or RAG tool will dutifully feed the whole bundle to the model.

- Tool authority escalation. If an injected instruction triggers a tool call that has real permissions, the attack leaves the chat window and touches live systems.

- Human detection is weak. Invisible text, homoglyphs, or benign-looking sentences that carry imperative intent slip through reviews.

Security teams should treat prompt injection like a new class of input validation failure that spans retrieval pipelines, agent planners, and tool call dispatch. OWASP now ranks prompt injection as the top LLM application risk, which matches field experience. See the concise overview in OWASP LLM01 Prompt Injection.

AIDR explained: from EDR and XDR to agent defense

EDR secured endpoints by giving defenders deep telemetry and rapid response at the host level. XDR broadened that lens across endpoints, identity, email, and cloud. AIDR does something parallel for AI agents.

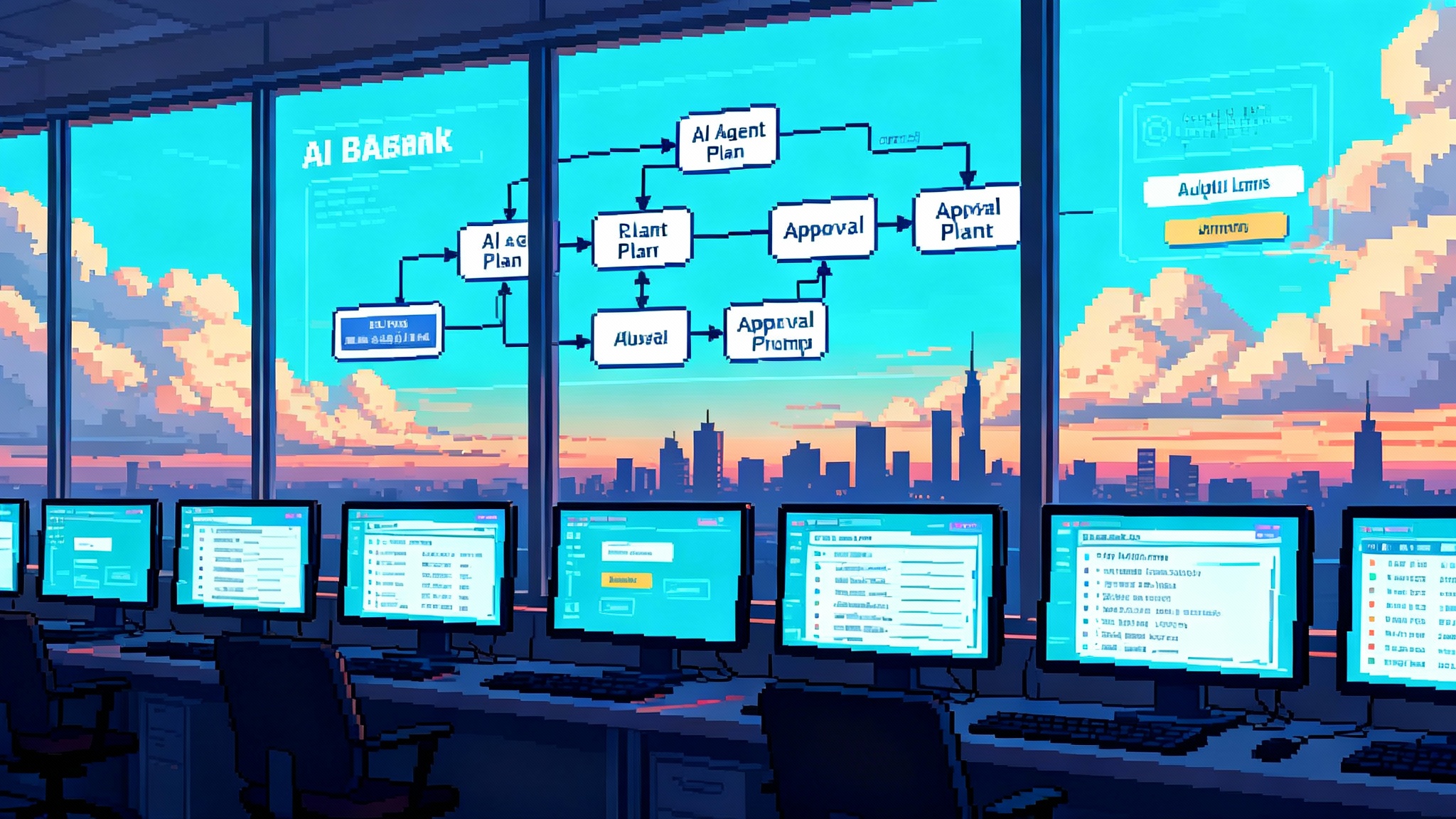

- What is AIDR. AIDR is a control and analytics plane that observes prompts, tool calls, agent identities, and data flows, and then enforces policy in real time.

- What detection looks like. Signals include jailbreak attempts, instruction override patterns, anomalous tool sequences, data egress attempts, and provenance gaps in retrieved content.

- What response looks like. Interventions include policy-based blocking of tool calls, quarantine of agent sessions into a sandbox, credential revocation for agent identities, and automated feedback into prompts or planners to replan safely.

- What success looks like. Lower injection success rate, fewer policy-violating tool calls, and fast containment when an agent is misled.

AIDR is not a replacement for EDR or XDR. It complements them by securing the distinct layers where agents live, decide, and act.

A reference architecture for AIDR

A practical AIDR stack aligns with how agents are built and run. Use this reference architecture as a blueprint.

- Inventory and governance of agents

- Central registry of GPTs, custom agents, and workflows. Track owner, purpose, model, data access, and environments.

- Software bill of behavior. Record the tools each agent can call, with allowed arguments and side effects.

- Policyable tool permissions

- Fine-grained scopes. Tools declare verbs and resources in a manifest, with default deny. Examples: email.send to finance@company.com only, storage.write to a specific bucket path, calendar.read future events only.

- Parameter guards. Static and dynamic validation of arguments before dispatch. Reject wildcards, unbounded queries, or off-domain requests.

- Sandboxed computer and browsing use

- Isolated execution for OS-level actions. File system and network namespaces with allow-lists. Ephemeral sandboxes per session.

- Safe browsing. Headless browser inside a controlled enclave with content sanitization and strict egress rules.

- Prompt and context firewall

- Structured prompting. Separate system rules, user intent, and retrieved data into distinct channels with labels and priorities.

- Content filters and detectors. Identify hidden instructions, jailbreak markers, and risky patterns before they reach the model. Rewrite or drop tainted segments.

- Attested context construction. Record exactly which documents and snippets entered the context window, with hashes and source provenance.

- Data egress controls for AI traffic

- Policy at the AI gateway. Enforce what data classes can leave the enterprise via AI calls, at what granularity, and to which providers or regions.

- Output validation. Scan agent outputs for secrets, regulated data, and sensitive patterns before rendering or forwarding to tools.

- Identity for agents

- First-class lifecycle. Issue, rotate, and revoke credentials for agents. Map agents to service principals with least privilege.

- Human in the loop for escalation. Require approval for scopes that cross financial, privacy, or safety thresholds.

- Governance over GPTs and custom agents

- Review and publish process. Threat model every agent before production. Require sign-off from security and the business owner.

- Drift management. Detect changes to prompts, tools, or model versions and re-run automated evaluations.

- Red-team evaluations and continuous testing

- Injection test suites. Curated adversarial prompts for direct and indirect injection, RAG attacks, and tool abuse.

- Scenario drills. Simulate a malicious invoice PDF, a poisoned wiki, or a compromised partner SaaS app and measure containment.

- Telemetry, audit, and forensics

- High-fidelity logs. Capture prompts, context composition metadata, tool call attempts, responses, and policy decisions with timestamps and request IDs.

- Replayable sessions. Allow investigators to reconstruct an agent run deterministically where possible.

- Response automation and playbooks

- Prebuilt actions. Kill switch for an agent, revoke tokens, disable a tool, isolate a sandbox, notify owners, and create a case in the SIEM.

- Learning loop. Feed detections back into prompt templates, retrieval filters, and tool policies.

A 90-day rollout plan for CISOs

AIDR does not need a year-long program to start paying off. Use this 30-30-30 plan.

Days 0 to 30: establish control and visibility

- Create an agent registry. Inventory all GPTs, copilots, automation scripts, and in-house agents. Tag owners and business processes.

- Default deny for tools. Wrap agent tool calls in a policy engine with allow-listed verbs, resources, and parameter limits.

- Centralize logging. Route prompts, tool attempts, and model responses to your SIEM with a minimal schema.

- Guard the exits. Add an AI gateway in front of model providers with data classification based egress rules.

- Build a safe sandbox. Run browsing and computer use in disposable containers with strict network egress.

Days 31 to 60: harden and test

- Red-team your top workflows. Use curated injection batteries against three high value use cases. Capture Injection Success Rate and top failure modes.

- Lock down identities. Put all agent credentials in a managed secrets store. Rotate keys and set short token lifetimes.

- Sign and verify tool manifests. Treat tool definitions like code. Use code review and CI to publish updates.

- Add output scanning. Secret scanners and data classifiers on every agent output before it leaves the platform or touches a downstream system.

Days 61 to 90: operationalize and measure

- Create SOC playbooks. Define containment actions for prompt injection, tool abuse, and data egress violations. Automate the top three.

- Add approval flows. For agents with financial or privacy impact, require human approval for sensitive tool calls.

- Continuous evaluation. Run weekly adversarial tests and track trend lines. Fail open never. If the evaluation fails, the agent rolls back or pauses.

- Tabletop an incident. Simulate an indirect injection via a vendor knowledge base and walk through detection to remediation with stakeholders.

How AIDR compares to EDR and XDR

- Scope. EDR watches processes and files. XDR watches multiple control points. AIDR watches prompts, tools, and agent identities.

- Signals. EDR sees system calls and memory behavior. XDR sees alerts across domains. AIDR sees instruction overrides, anomalous tool sequences, and data provenance gaps.

- Enforcement. EDR kills processes, quarantines files, and isolates hosts. XDR automates response across domains. AIDR blocks tool calls, rewrites prompts, quarantines agent sessions, and revokes agent credentials.

- Integration. AIDR should feed XDR with detections and receive context back, such as known compromised accounts or risky devices.

In practice, you will run all three. AIDR fills the blind spots created by agent behavior that EDR and XDR cannot observe.

What to ask vendors now

- Visibility. Can you show complete traces of prompts, context construction, tool calls, and policy decisions with low latency and reasonable storage cost?

- Policy model. Do tools and agents support fine-grained scopes, parameter constraints, and human approvals? How are policies versioned and tested?

- Indirect injection defense. How do you sanitize retrieved content and prevent hidden instructions from reaching the model? Can you prove reduction in injection success rate on a standard corpus?

- Identity hygiene. How are agent credentials issued, rotated, and revoked? Can you enforce least privilege at the tool and API level?

- Sandboxing. What isolation exists for browsing and OS actions? Can you detonate untrusted content safely?

- Open telemetry. Can I stream all key events into my SIEM and data lake without losing semantics?

- Response automation. What prebuilt containment actions exist, and can I compose new ones without writing glue code?

Metrics that matter

Pick a small set of KPIs and track them weekly.

- Injection Success Rate. Percentage of runs where a known injection causes a policy violation or unsafe behavior.

- Tool Policy Violation Rate. Percentage of attempted tool calls that breach defined scopes or parameter limits.

- Sensitive Egress Attempts. Count of outputs blocked by data loss or secret scanners.

- Mean Time to Detect and Contain. From first unsafe event to enforced containment.

- Drift Events. Changes to prompts, tools, or model versions that require re-evaluation.

Market implications

For buyers

- Budgets will shift toward AIDR platforms that integrate with existing EDR and XDR. Expect early consolidation, but do not wait. Start with minimal viable controls and an agent registry. Real-world traction like the Citi agent pilot playbook shows how quickly value emerges.

- Proof beats promises. Ask for evaluation harnesses and published metrics on injection defense, not just demos.

- Plan for data gravity. Prompt and tool telemetry will be high volume. Choose platforms that let you keep logs in your lake or SIEM on your terms.

For vendors

- Agent-aware telemetry becomes non-negotiable. Platforms must capture prompts, context composition, tool calls, and policy decisions.

- Policy and identity are core. Vendors that treat tool permissions and agent credentials as first-class will pull ahead.

- Red-team rigor will differentiate. Independent evaluations and shared test suites will matter more than feature lists.

- Consolidation is coming. EDR and XDR leaders will fold AIDR into their platforms, and best-of-breed specialists will compete on depth and time to value.

What good looks like by year end

- Every agent in production has an owner, a registry entry, scoped tool permissions, a tested prompt template, and a defined data perimeter.

- Browsing and OS actions run only inside sandboxes with strict egress and ephemeral storage.

- A prompt firewall intercepts and rewrites risky inputs before they reach the model. Retrieved content is sanitized and tagged with provenance.

- Agent outputs pass through secret and data classifiers. Violations are blocked or redacted automatically.

- SOC playbooks exist for injection, tool abuse, and data egress, with automated containment for the most likely scenarios.

- Weekly adversarial evaluations run on top workflows. The organization tracks trend lines and treats regressions as release blockers.

The bottom line

Agentic AI is powerful precisely because it can act. That power creates a new security surface that does not fit neatly into legacy controls. With the Pangea acquisition and an Agentic Security Platform narrative, CrowdStrike has helped crystallize a category. AIDR is the right mental model and the right stack for what comes next. Start with visibility, lock down tools and identities, put a firewall in front of prompts, and rehearse the worst day. Do that in 90 days, then keep iterating. Agent security will be table stakes sooner than most teams expect, and those who get there early will ship AI faster with fewer surprises.